Spaces:

Runtime error

A newer version of the Streamlit SDK is available:

1.48.1

Luzie internship

- This document contains steps and relevant links to do the steps, but you will still have to read through the links and find your way around the task. If you encounter a problem or an error, search through the documentation or Google it and if you are still stuck after 30 mins, then ask :)

- At the bottom of each section there are terms that might be new to you. If you don't know them, try to search for their meaning and feel free to write down their definition. There are also some questions included that you can try to answer yourself or we can discuss them at the end of each section

0. Introduction

- The goal of this internship is to gather a dataset of banana images, label them, train a machine learning model, and show the model in a website

- The Machine Learning goal of this project is to determine whether a banana is ripe or edible

- In the end we will have something like: https://huggingface.co/spaces/anebz/test

Basic new terms and questions:

- Machine Learning

- Dataset

- Model

- Train a model

- Classes in machine learning

- Why only 2 classes? (ripe and edible)

1. Create dataset

Create a dataset with images relevant for our project, in this case bananas. Since we want to detect ripe and edible bananas, we need images of both types. Deep neural networks need thousands of images to learn the patterns in the images, but in this project we will use pre-trained models. With only a few of our images, we can obtain good results. To obtain decent results, we should have at least 50 images of each class.

This section ends when we have a folder of images in our computer.

New terms and questions:

- Deep neural networks

- Pre-trained model

- Why 50 images and not less?

2. Label dataset

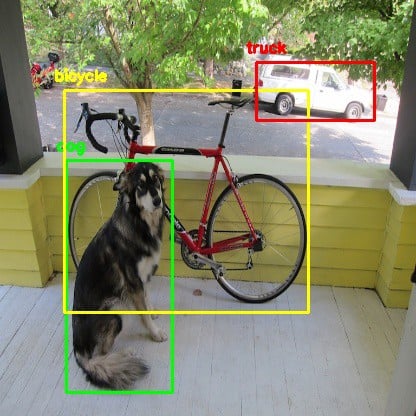

Next step is to label images, to mark if the banana is ripe or edible. If we only mark the class in each image, we are doing classification. But in Computer Vision, a popular task is object detection. This means each image needs two markings: a box where the object is, and a label. To practice object detection, we will mark each image with the box and the label.

We will use the tool Datature: https://datature.io/

It's a startup that created a web interface to annotate images and train computer vision models. The great part of using this is that we don't need any code at all. Resources:

- Blogpost about labeling and training a face mask detection model: https://datature.io/blog/train-and-visualize-face-mask-detection-model

- Datature Nexus documentation: https://docs.datature.io/

Use the resources above to find out how to:

- Create Datature Nexus account (free trial)

- Create a project

- Upload images

- Create classes

- Annotate images

- Create rectangular boxes in images

- Assign a class to each box

This section is finished when all the images have been annotated and reviewed in Nexus.

New terms and questions:

- Computer vision

- Object detection

- Bounding box

- Tag distribution

- Risks of class imbalance

3. Train model

Once we have our images, we can train our model! We will have to create a 'Workflow' in Nexus. Try to use the documentation and blogpost to do the following steps:

- Build training workflow

- Select train-test split ratio

- Select augmentation

- Model settings

- Train model

- Monitor model

- Loss

- Precision and recall

- Export model

This section is finished when there is a .zip exported in the computer with the trained model parameters.

New terms and questions:

- Train-test split ratio

- Augmentation

- Checkpoint

- Model training loss

- Should the loss go up/down?

- What pre-trained model did the workflow use?

- Precision and recall

- Should precision and recall go up/down?

4. Create website

We will use Streamlit to create the website: https://streamlit.io/. It uses Python to create a website.

You can find the website application prepared for you in app.py. That is the Python file that uses the Streamlit library to do the following steps:

- Load model from the

saved_model/folder - User interface for the user to upload an image

- Open image, resize, convert to correct format for the model

- Obtain results from the model prediction, process the data

- Iterate prediction boxes and plot them on top of the image

- Show the result image and the prediction boxes

You are not required to write any code, everything is done for you and the code should work. However, please read through the Python file and the comments, and try to have a general understand of what the code does. Check especially the #TODO lines which include parameters that we might have to change depending on our project settings.

If you have Python installed in your computer, you can install Streamlit from the command line:

pip install streamlit

Install the libraries that we need to run the Streamlit app. These libraries are in the file requirements.txt:

pip install -r requirements.txt

And then run the app.py file:

cd path/to/project/

streamlit run app.py

This will open a new window where we can see the website and interact with it: upload images, and check the results.

This section is done when the code is more or less understood, and we have managed to run the app locally and interact with the model in our browser.

New terms and questions:

- What does streamlit do?

- What is pip?

- What is the confidence threshold?

5. Host it in the cloud

For now the script runs in our computer, but we would like to put it in the Internet so anyone can just access a link and get predictions from the model. There are many ways to deploy an app to the cloud, we will use a simple easy way: Huggingface Spaces: https://huggingface.co/spaces/. This is a website from Huggingface where people can showcase their models and interact with them. Sort the spaces by 'Most liked' and explore some of the spaces :)

- Create Huggingface account

- Create a Space

- Write a name for the space. Remember, this website will be public, so choose a name that matches what the app does! For example: banana-analysis or something like that

- Select Streamlit as the Space SDK

- Choose Public

- When the space is done, clone the repository in your local computer: git clone ...

- Open the folder in Visual Studio code, open README.md and change the emoji for the project

- Copy the model files (saved_model/ folder, label_map.pbtxt file) into this folder

- Copy app.py and requirements.txt into this folder

Once all the files we need are in the folder, we can push this to the Huggingface Space. Open Git bash and input these commands:

git add .

git commit -m "Added files"

git push

The app will take some time to upload the files, especially the model files. After the git push is complete, the Space will take some minutes to build the application and show our Streamlit App in the Huggingface Space.

This section is finished when the Streamlit app is displayed in the Space and we can upload an image and obtain predictions.

New terms and questions:

- What is Huggingface? What do they do?

- What is git? Why is it useful?

- What is git add, git commit, git push?

6. Improve system

We have gathered a dataset, labeled the images, trained a model, exported model parameters, created a Streamlit app and hosted it in Huggingface Spaces. Now everyone can access this link and try their images on our app.

We will review the Space together, try a test dataset and check if the results are satisfactory. Possibly, the dataset will have to be revised, you will need to add more images, label them, train the model again, export the new model's parameters and update these files in the Space. We might have to do this a few times.

In the end, the internship is finished when we have a robust model that can handle all types of images and returns appropriate boxes and labels. Now we can show our app to the world! 🤩