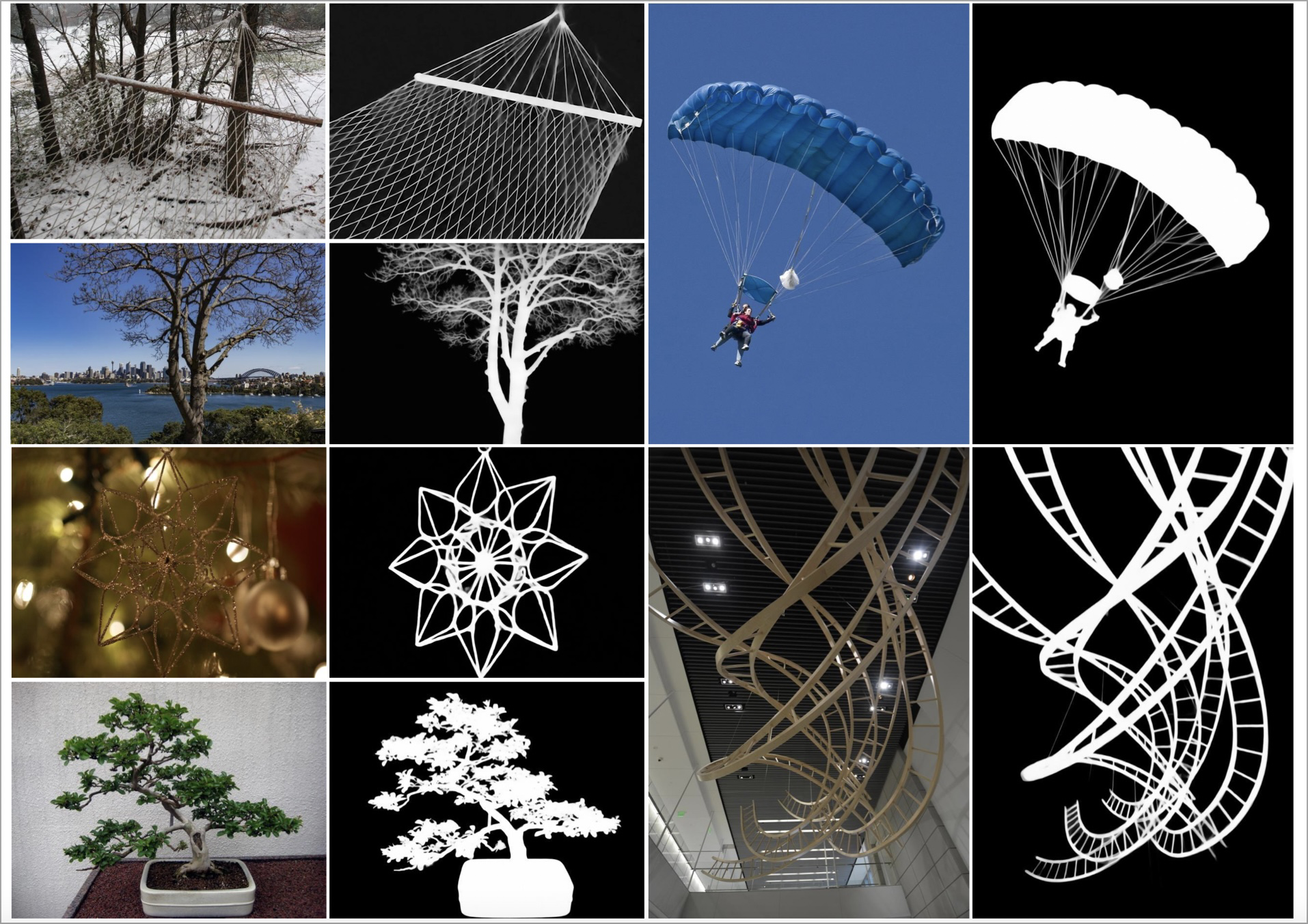

High-Precision Dichotomous Image Segmentation via Probing Diffusion Capacity

This repository contains the official implementation for the paper "High-Precision Dichotomous Image Segmentation via Probing Diffusion Capacity" (ICLR 2025).

How to use

For the complete training and inference process, please refer to our GitHub Repository. This section specifically guides you on loading weights from Hugging Face.

Install Packages:

pip install torch==2.2.0 torchvision==0.17.0 torchaudio==2.2.0 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

pip install -e diffusers-0.30.2/

Load DiffDIS weights from Hugging Face:

import torch

from diffusers import (

DiffusionPipeline,

DDPMScheduler,

UNet2DConditionModel,

AutoencoderKL,

)

from transformers import CLIPTextModel, CLIPTokenizer

hf_model_path = 'qianyu1217/diffdis'

vae = AutoencoderKL.from_pretrained(hf_model_path,subfolder='vae',trust_remote_code=True)

scheduler = DDPMScheduler.from_pretrained(hf_model_path,subfolder='scheduler')

text_encoder = CLIPTextModel.from_pretrained(hf_model_path,subfolder='text_encoder')

tokenizer = CLIPTokenizer.from_pretrained(hf_model_path,subfolder='tokenizer')

unet = UNet2DConditionModel_diffdis.from_pretrained(hf_model_path,subfolder="unet",

in_channels=8, sample_size=96,

low_cpu_mem_usage=False,

ignore_mismatched_sizes=False,

class_embed_type='projection',

projection_class_embeddings_input_dim=4,

mid_extra_cross=True,

mode = 'DBIA',

use_swci = True)

pipe = DiffDISPipeline(unet=unet,

vae=vae,

scheduler=scheduler,

text_encoder=text_encoder,

tokenizer=tokenizer)

Citation

@article{DiffDIS,

title={High-Precision Dichotomous Image Segmentation via Probing Diffusion Capacity},

author={Yu, Qian and Jiang, Peng-Tao and Zhang, Hao and Chen, Jinwei and Li, Bo and Zhang, Lihe and Lu, Huchuan},

journal={arXiv preprint arXiv:2410.10105},

year={2024}

}

- Downloads last month

- 9

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support