metadata

license: apache-2.0

tags:

- vision-transformer

- image-classification

- pytorch

- timm

- mlp-mixer

- gravitational-lensing

- strong-lensing

- astronomy

- astrophysics

datasets:

- parlange/gravit-j24

metrics:

- accuracy

- auc

- f1

paper:

- title: 'GraViT: A Gravitational Lens Discovery Toolkit with Vision Transformers'

url: https://arxiv.org/abs/2509.00226

authors: Parlange et al.

model-index:

- name: MLP-Mixer-b3

results:

- task:

type: image-classification

name: Strong Gravitational Lens Discovery

dataset:

type: common-test-sample

name: Common Test Sample (More et al. 2024)

metrics:

- type: accuracy

value: 0.8118

name: Average Accuracy

- type: auc

value: 0.746

name: Average AUC-ROC

- type: f1

value: 0.4426

name: Average F1-Score

🌌 mlp-mixer-gravit-b3

🔭 This model is part of GraViT: Transfer Learning with Vision Transformers and MLP-Mixer for Strong Gravitational Lens Discovery

🔗 GitHub Repository: https://github.com/parlange/gravit

🛰️ Model Details

- 🤖 Model Type: MLP-Mixer

- 🧪 Experiment: B3 - J24-all-blocks

- 🌌 Dataset: J24

- 🪐 Fine-tuning Strategy: all-blocks

💻 Quick Start

import torch

import timm

# Load the model directly from the Hub

model = timm.create_model(

'hf-hub:parlange/mlp-mixer-gravit-b3',

pretrained=True

)

model.eval()

# Example inference

dummy_input = torch.randn(1, 3, 224, 224)

with torch.no_grad():

output = model(dummy_input)

predictions = torch.softmax(output, dim=1)

print(f"Lens probability: {predictions[0][1]:.4f}")

⚡️ Training Configuration

Training Dataset: J24 (Jaelani et al. 2024)

Fine-tuning Strategy: all-blocks

| 🔧 Parameter | 📝 Value |

|---|---|

| Batch Size | 192 |

| Learning Rate | AdamW with ReduceLROnPlateau |

| Epochs | 100 |

| Patience | 10 |

| Optimizer | AdamW |

| Scheduler | ReduceLROnPlateau |

| Image Size | 224x224 |

| Fine Tune Mode | all_blocks |

| Stochastic Depth Probability | 0.1 |

📈 Training Curves

🏁 Final Epoch Training Metrics

| Metric | Training | Validation |

|---|---|---|

| 📉 Loss | 0.0373 | 0.0536 |

| 🎯 Accuracy | 0.9863 | 0.9861 |

| 📊 AUC-ROC | 0.9989 | 0.9990 |

| ⚖️ F1 Score | 0.9863 | 0.9860 |

☑️ Evaluation Results

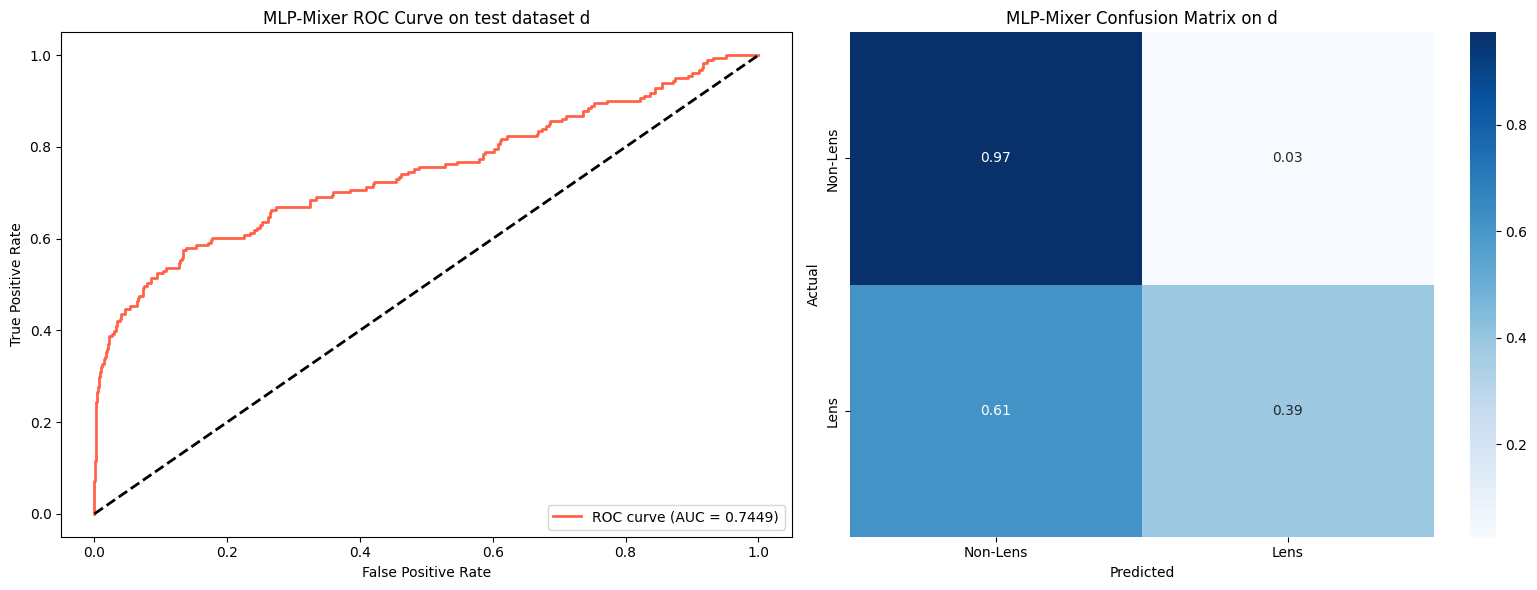

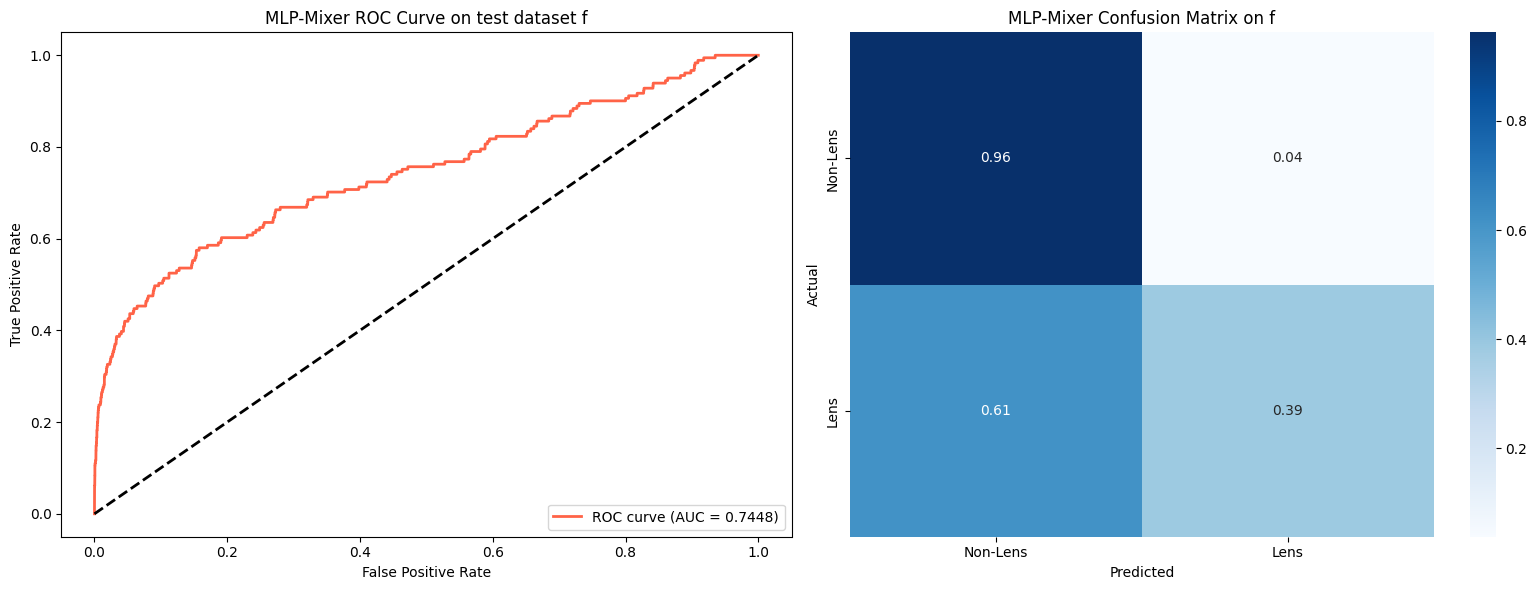

ROC Curves and Confusion Matrices

Performance across all test datasets (a through l) in the Common Test Sample (More et al. 2024):

📋 Performance Summary

Average performance across 12 test datasets from the Common Test Sample (More et al. 2024):

| Metric | Value |

|---|---|

| 🎯 Average Accuracy | 0.8118 |

| 📈 Average AUC-ROC | 0.7460 |

| ⚖️ Average F1-Score | 0.4426 |

📘 Citation

If you use this model in your research, please cite:

@misc{parlange2025gravit,

title={GraViT: Transfer Learning with Vision Transformers and MLP-Mixer for Strong Gravitational Lens Discovery},

author={René Parlange and Juan C. Cuevas-Tello and Octavio Valenzuela and Omar de J. Cabrera-Rosas and Tomás Verdugo and Anupreeta More and Anton T. Jaelani},

year={2025},

eprint={2509.00226},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2509.00226},

}

Model Card Contact

For questions about this model, please contact the author through: https://github.com/parlange/