Initial commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .girattributes +2 -0

- .github/workflows/ci.yml +121 -0

- .github/workflows/clear-cache.yml +29 -0

- .github/workflows/python-publish.yml +37 -0

- .gitignore +153 -0

- CITATION.cff +33 -0

- HISTORY.md +223 -0

- LICENSE +23 -0

- MANIFEST.in +3 -0

- README.md +618 -0

- models.txt +2 -0

- pytest.ini +3 -0

- requirements.txt +8 -0

- src/open_clip/__init__.py +18 -0

- src/open_clip/coca_model.py +582 -0

- src/open_clip/constants.py +11 -0

- src/open_clip/convert.py +206 -0

- src/open_clip/factory.py +586 -0

- src/open_clip/hf_configs.py +67 -0

- src/open_clip/hf_model.py +193 -0

- src/open_clip/loss.py +447 -0

- src/open_clip/model.py +919 -0

- src/open_clip/model_configs/EVA01-g-14-plus.json +18 -0

- src/open_clip/model_configs/EVA01-g-14.json +18 -0

- src/open_clip/model_configs/EVA02-B-16.json +18 -0

- src/open_clip/model_configs/EVA02-E-14-plus.json +18 -0

- src/open_clip/model_configs/EVA02-E-14.json +18 -0

- src/open_clip/model_configs/EVA02-L-14-336.json +18 -0

- src/open_clip/model_configs/EVA02-L-14.json +18 -0

- src/open_clip/model_configs/MobileCLIP-B.json +21 -0

- src/open_clip/model_configs/MobileCLIP-S1.json +21 -0

- src/open_clip/model_configs/MobileCLIP-S2.json +21 -0

- src/open_clip/model_configs/RN101-quickgelu.json +22 -0

- src/open_clip/model_configs/RN101.json +21 -0

- src/open_clip/model_configs/RN50-quickgelu.json +22 -0

- src/open_clip/model_configs/RN50.json +21 -0

- src/open_clip/model_configs/RN50x16-quickgelu.json +22 -0

- src/open_clip/model_configs/RN50x16.json +21 -0

- src/open_clip/model_configs/RN50x4-quickgelu.json +22 -0

- src/open_clip/model_configs/RN50x4.json +21 -0

- src/open_clip/model_configs/RN50x64-quickgelu.json +22 -0

- src/open_clip/model_configs/RN50x64.json +21 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP-256.json +29 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP-384.json +29 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP-512.json +29 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP-i18n-256.json +29 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP.json +29 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP2-256.json +32 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP2-384.json +32 -0

- src/open_clip/model_configs/ViT-B-16-SigLIP2-512.json +32 -0

.girattributes

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.py linguist-language=python

|

| 2 |

+

*.ipynb linguist-documentation

|

.github/workflows/ci.yml

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Continuous integration

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

paths-ignore:

|

| 8 |

+

- '**.md'

|

| 9 |

+

- 'CITATION.cff'

|

| 10 |

+

- 'LICENSE'

|

| 11 |

+

- '.gitignore'

|

| 12 |

+

- 'docs/**'

|

| 13 |

+

pull_request:

|

| 14 |

+

branches:

|

| 15 |

+

- main

|

| 16 |

+

paths-ignore:

|

| 17 |

+

- '**.md'

|

| 18 |

+

- 'CITATION.cff'

|

| 19 |

+

- 'LICENSE'

|

| 20 |

+

- '.gitignore'

|

| 21 |

+

- 'docs/**'

|

| 22 |

+

workflow_dispatch:

|

| 23 |

+

inputs:

|

| 24 |

+

manual_revision_reference:

|

| 25 |

+

required: false

|

| 26 |

+

type: string

|

| 27 |

+

manual_revision_test:

|

| 28 |

+

required: false

|

| 29 |

+

type: string

|

| 30 |

+

|

| 31 |

+

env:

|

| 32 |

+

REVISION_REFERENCE: v2.8.2

|

| 33 |

+

#9d31b2ec4df6d8228f370ff20c8267ec6ba39383 earliest compatible v2.7.0 + pretrained_hf param

|

| 34 |

+

|

| 35 |

+

jobs:

|

| 36 |

+

Tests:

|

| 37 |

+

strategy:

|

| 38 |

+

matrix:

|

| 39 |

+

os: [ ubuntu-latest ] #, macos-latest ]

|

| 40 |

+

python: [ 3.8 ]

|

| 41 |

+

job_num: [ 4 ]

|

| 42 |

+

job: [ 1, 2, 3, 4 ]

|

| 43 |

+

runs-on: ${{ matrix.os }}

|

| 44 |

+

steps:

|

| 45 |

+

- uses: actions/checkout@v3

|

| 46 |

+

with:

|

| 47 |

+

fetch-depth: 0

|

| 48 |

+

ref: ${{ inputs.manual_revision_test }}

|

| 49 |

+

- name: Set up Python ${{ matrix.python }}

|

| 50 |

+

id: pythonsetup

|

| 51 |

+

uses: actions/setup-python@v4

|

| 52 |

+

with:

|

| 53 |

+

python-version: ${{ matrix.python }}

|

| 54 |

+

- name: Venv cache

|

| 55 |

+

id: venv-cache

|

| 56 |

+

uses: actions/cache@v3

|

| 57 |

+

with:

|

| 58 |

+

path: .env

|

| 59 |

+

key: venv-${{ matrix.os }}-${{ steps.pythonsetup.outputs.python-version }}-${{ hashFiles('requirements*') }}

|

| 60 |

+

- name: Pytest durations cache

|

| 61 |

+

uses: actions/cache@v3

|

| 62 |

+

with:

|

| 63 |

+

path: .test_durations

|

| 64 |

+

key: test_durations-${{ matrix.os }}-${{ steps.pythonsetup.outputs.python-version }}-${{ matrix.job }}-${{ github.run_id }}

|

| 65 |

+

restore-keys: test_durations-0-

|

| 66 |

+

- name: Setup

|

| 67 |

+

if: steps.venv-cache.outputs.cache-hit != 'true'

|

| 68 |

+

run: |

|

| 69 |

+

python3 -m venv .env

|

| 70 |

+

source .env/bin/activate

|

| 71 |

+

pip install -e .[test]

|

| 72 |

+

- name: Prepare test data

|

| 73 |

+

run: |

|

| 74 |

+

source .env/bin/activate

|

| 75 |

+

python -m pytest \

|

| 76 |

+

--quiet --co \

|

| 77 |

+

--splitting-algorithm least_duration \

|

| 78 |

+

--splits ${{ matrix.job_num }} \

|

| 79 |

+

--group ${{ matrix.job }} \

|

| 80 |

+

-m regression_test \

|

| 81 |

+

tests \

|

| 82 |

+

| head -n -2 | grep -Po 'test_inference_with_data\[\K[^]]*(?=-False]|-True])' \

|

| 83 |

+

> models_gh_runner.txt

|

| 84 |

+

if [ -n "${{ inputs.manual_revision_reference }}" ]; then

|

| 85 |

+

REVISION_REFERENCE=${{ inputs.manual_revision_reference }}

|

| 86 |

+

fi

|

| 87 |

+

python tests/util_test.py \

|

| 88 |

+

--save_model_list models_gh_runner.txt \

|

| 89 |

+

--model_list models_gh_runner.txt \

|

| 90 |

+

--git_revision $REVISION_REFERENCE

|

| 91 |

+

- name: Unit tests

|

| 92 |

+

run: |

|

| 93 |

+

source .env/bin/activate

|

| 94 |

+

if [[ -f .test_durations ]]

|

| 95 |

+

then

|

| 96 |

+

cp .test_durations durations_1

|

| 97 |

+

mv .test_durations durations_2

|

| 98 |

+

fi

|

| 99 |

+

python -m pytest \

|

| 100 |

+

-x -s -v \

|

| 101 |

+

--splitting-algorithm least_duration \

|

| 102 |

+

--splits ${{ matrix.job_num }} \

|

| 103 |

+

--group ${{ matrix.job }} \

|

| 104 |

+

--store-durations \

|

| 105 |

+

--durations-path durations_1 \

|

| 106 |

+

--clean-durations \

|

| 107 |

+

-m "not regression_test" \

|

| 108 |

+

tests

|

| 109 |

+

OPEN_CLIP_TEST_REG_MODELS=models_gh_runner.txt python -m pytest \

|

| 110 |

+

-x -s -v \

|

| 111 |

+

--store-durations \

|

| 112 |

+

--durations-path durations_2 \

|

| 113 |

+

--clean-durations \

|

| 114 |

+

-m "regression_test" \

|

| 115 |

+

tests

|

| 116 |

+

jq -s -S 'add' durations_* > .test_durations

|

| 117 |

+

- name: Collect pytest durations

|

| 118 |

+

uses: actions/upload-artifact@v4

|

| 119 |

+

with:

|

| 120 |

+

name: pytest_durations_${{ matrix.os }}-${{ matrix.python }}-${{ matrix.job }}

|

| 121 |

+

path: .test_durations

|

.github/workflows/clear-cache.yml

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Clear cache

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

workflow_dispatch:

|

| 5 |

+

|

| 6 |

+

permissions:

|

| 7 |

+

actions: write

|

| 8 |

+

|

| 9 |

+

jobs:

|

| 10 |

+

clear-cache:

|

| 11 |

+

runs-on: ubuntu-latest

|

| 12 |

+

steps:

|

| 13 |

+

- name: Clear cache

|

| 14 |

+

uses: actions/github-script@v6

|

| 15 |

+

with:

|

| 16 |

+

script: |

|

| 17 |

+

const caches = await github.rest.actions.getActionsCacheList({

|

| 18 |

+

owner: context.repo.owner,

|

| 19 |

+

repo: context.repo.repo,

|

| 20 |

+

})

|

| 21 |

+

for (const cache of caches.data.actions_caches) {

|

| 22 |

+

console.log(cache)

|

| 23 |

+

await github.rest.actions.deleteActionsCacheById({

|

| 24 |

+

owner: context.repo.owner,

|

| 25 |

+

repo: context.repo.repo,

|

| 26 |

+

cache_id: cache.id,

|

| 27 |

+

})

|

| 28 |

+

}

|

| 29 |

+

|

.github/workflows/python-publish.yml

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Release

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

jobs:

|

| 8 |

+

deploy:

|

| 9 |

+

runs-on: ubuntu-latest

|

| 10 |

+

steps:

|

| 11 |

+

- uses: actions/checkout@v2

|

| 12 |

+

- uses: actions-ecosystem/action-regex-match@v2

|

| 13 |

+

id: regex-match

|

| 14 |

+

with:

|

| 15 |

+

text: ${{ github.event.head_commit.message }}

|

| 16 |

+

regex: '^Release ([^ ]+)'

|

| 17 |

+

- name: Set up Python

|

| 18 |

+

uses: actions/setup-python@v2

|

| 19 |

+

with:

|

| 20 |

+

python-version: '3.8'

|

| 21 |

+

- name: Install dependencies

|

| 22 |

+

run: |

|

| 23 |

+

python -m pip install --upgrade pip

|

| 24 |

+

pip install setuptools wheel twine build

|

| 25 |

+

- name: Release

|

| 26 |

+

if: ${{ steps.regex-match.outputs.match != '' }}

|

| 27 |

+

uses: softprops/action-gh-release@v1

|

| 28 |

+

with:

|

| 29 |

+

tag_name: v${{ steps.regex-match.outputs.group1 }}

|

| 30 |

+

- name: Build and publish

|

| 31 |

+

if: ${{ steps.regex-match.outputs.match != '' }}

|

| 32 |

+

env:

|

| 33 |

+

TWINE_USERNAME: __token__

|

| 34 |

+

TWINE_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

|

| 35 |

+

run: |

|

| 36 |

+

python -m build

|

| 37 |

+

twine upload dist/*

|

.gitignore

ADDED

|

@@ -0,0 +1,153 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**/logs/

|

| 2 |

+

**/wandb/

|

| 3 |

+

models/

|

| 4 |

+

features/

|

| 5 |

+

results/

|

| 6 |

+

|

| 7 |

+

tests/data/

|

| 8 |

+

*.pt

|

| 9 |

+

|

| 10 |

+

# Byte-compiled / optimized / DLL files

|

| 11 |

+

__pycache__/

|

| 12 |

+

*.py[cod]

|

| 13 |

+

*$py.class

|

| 14 |

+

|

| 15 |

+

# C extensions

|

| 16 |

+

*.so

|

| 17 |

+

|

| 18 |

+

# Distribution / packaging

|

| 19 |

+

.Python

|

| 20 |

+

build/

|

| 21 |

+

develop-eggs/

|

| 22 |

+

dist/

|

| 23 |

+

downloads/

|

| 24 |

+

eggs/

|

| 25 |

+

.eggs/

|

| 26 |

+

lib/

|

| 27 |

+

lib64/

|

| 28 |

+

parts/

|

| 29 |

+

sdist/

|

| 30 |

+

var/

|

| 31 |

+

wheels/

|

| 32 |

+

pip-wheel-metadata/

|

| 33 |

+

share/python-wheels/

|

| 34 |

+

*.egg-info/

|

| 35 |

+

.installed.cfg

|

| 36 |

+

*.egg

|

| 37 |

+

MANIFEST

|

| 38 |

+

|

| 39 |

+

# PyInstaller

|

| 40 |

+

# Usually these files are written by a python script from a template

|

| 41 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 42 |

+

*.manifest

|

| 43 |

+

*.spec

|

| 44 |

+

|

| 45 |

+

# Installer logs

|

| 46 |

+

pip-log.txt

|

| 47 |

+

pip-delete-this-directory.txt

|

| 48 |

+

|

| 49 |

+

# Unit test / coverage reports

|

| 50 |

+

htmlcov/

|

| 51 |

+

.tox/

|

| 52 |

+

.nox/

|

| 53 |

+

.coverage

|

| 54 |

+

.coverage.*

|

| 55 |

+

.cache

|

| 56 |

+

nosetests.xml

|

| 57 |

+

coverage.xml

|

| 58 |

+

*.cover

|

| 59 |

+

*.py,cover

|

| 60 |

+

.hypothesis/

|

| 61 |

+

.pytest_cache/

|

| 62 |

+

|

| 63 |

+

# Translations

|

| 64 |

+

*.mo

|

| 65 |

+

*.pot

|

| 66 |

+

|

| 67 |

+

# Django stuff:

|

| 68 |

+

*.log

|

| 69 |

+

local_settings.py

|

| 70 |

+

db.sqlite3

|

| 71 |

+

db.sqlite3-journal

|

| 72 |

+

|

| 73 |

+

# Flask stuff:

|

| 74 |

+

instance/

|

| 75 |

+

.webassets-cache

|

| 76 |

+

|

| 77 |

+

# Scrapy stuff:

|

| 78 |

+

.scrapy

|

| 79 |

+

|

| 80 |

+

# Sphinx documentation

|

| 81 |

+

docs/_build/

|

| 82 |

+

|

| 83 |

+

# PyBuilder

|

| 84 |

+

target/

|

| 85 |

+

|

| 86 |

+

# Jupyter Notebook

|

| 87 |

+

.ipynb_checkpoints

|

| 88 |

+

|

| 89 |

+

# IPython

|

| 90 |

+

profile_default/

|

| 91 |

+

ipython_config.py

|

| 92 |

+

|

| 93 |

+

# pyenv

|

| 94 |

+

.python-version

|

| 95 |

+

|

| 96 |

+

# pipenv

|

| 97 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 98 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 99 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 100 |

+

# install all needed dependencies.

|

| 101 |

+

#Pipfile.lock

|

| 102 |

+

|

| 103 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 104 |

+

__pypackages__/

|

| 105 |

+

|

| 106 |

+

# Celery stuff

|

| 107 |

+

celerybeat-schedule

|

| 108 |

+

celerybeat.pid

|

| 109 |

+

|

| 110 |

+

# SageMath parsed files

|

| 111 |

+

*.sage.py

|

| 112 |

+

|

| 113 |

+

# Environments

|

| 114 |

+

.env

|

| 115 |

+

.venv

|

| 116 |

+

env/

|

| 117 |

+

venv/

|

| 118 |

+

ENV/

|

| 119 |

+

env.bak/

|

| 120 |

+

venv.bak/

|

| 121 |

+

|

| 122 |

+

# Spyder project settings

|

| 123 |

+

.spyderproject

|

| 124 |

+

.spyproject

|

| 125 |

+

|

| 126 |

+

# Rope project settings

|

| 127 |

+

.ropeproject

|

| 128 |

+

|

| 129 |

+

# mkdocs documentation

|

| 130 |

+

/site

|

| 131 |

+

|

| 132 |

+

# mypy

|

| 133 |

+

.mypy_cache/

|

| 134 |

+

.dmypy.json

|

| 135 |

+

dmypy.json

|

| 136 |

+

|

| 137 |

+

# Pyre type checker

|

| 138 |

+

.pyre/

|

| 139 |

+

sync.sh

|

| 140 |

+

gpu1sync.sh

|

| 141 |

+

.idea

|

| 142 |

+

*.pdf

|

| 143 |

+

**/._*

|

| 144 |

+

**/*DS_*

|

| 145 |

+

**.jsonl

|

| 146 |

+

src/sbatch

|

| 147 |

+

src/misc

|

| 148 |

+

.vscode

|

| 149 |

+

src/debug

|

| 150 |

+

core.*

|

| 151 |

+

|

| 152 |

+

# Allow

|

| 153 |

+

!src/evaluation/misc/results_dbs/*

|

CITATION.cff

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

cff-version: 1.1.0

|

| 2 |

+

message: If you use this software, please cite it as below.

|

| 3 |

+

authors:

|

| 4 |

+

- family-names: Ilharco

|

| 5 |

+

given-names: Gabriel

|

| 6 |

+

- family-names: Wortsman

|

| 7 |

+

given-names: Mitchell

|

| 8 |

+

- family-names: Wightman

|

| 9 |

+

given-names: Ross

|

| 10 |

+

- family-names: Gordon

|

| 11 |

+

given-names: Cade

|

| 12 |

+

- family-names: Carlini

|

| 13 |

+

given-names: Nicholas

|

| 14 |

+

- family-names: Taori

|

| 15 |

+

given-names: Rohan

|

| 16 |

+

- family-names: Dave

|

| 17 |

+

given-names: Achal

|

| 18 |

+

- family-names: Shankar

|

| 19 |

+

given-names: Vaishaal

|

| 20 |

+

- family-names: Namkoong

|

| 21 |

+

given-names: Hongseok

|

| 22 |

+

- family-names: Miller

|

| 23 |

+

given-names: John

|

| 24 |

+

- family-names: Hajishirzi

|

| 25 |

+

given-names: Hannaneh

|

| 26 |

+

- family-names: Farhadi

|

| 27 |

+

given-names: Ali

|

| 28 |

+

- family-names: Schmidt

|

| 29 |

+

given-names: Ludwig

|

| 30 |

+

title: OpenCLIP

|

| 31 |

+

version: v0.1

|

| 32 |

+

doi: 10.5281/zenodo.5143773

|

| 33 |

+

date-released: 2021-07-28

|

HISTORY.md

ADDED

|

@@ -0,0 +1,223 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## 2.24.0

|

| 2 |

+

|

| 3 |

+

* Fix missing space in error message

|

| 4 |

+

* use model flag for normalizing embeddings

|

| 5 |

+

* init logit_bias for non siglip pretrained models

|

| 6 |

+

* Fix logit_bias load_checkpoint addition

|

| 7 |

+

* Make CoCa model match CLIP models for logit scale/bias init

|

| 8 |

+

* Fix missing return of "logit_bias" in CoCa.forward

|

| 9 |

+

* Add NLLB-CLIP with SigLIP models

|

| 10 |

+

* Add get_logits method and NLLB tokenizer

|

| 11 |

+

* Remove the empty file src/open_clip/generation_utils.py

|

| 12 |

+

* Update params.py: "BatchNorm" -> "LayerNorm" in the description string for "--lock-text-freeze-layer-norm"

|

| 13 |

+

|

| 14 |

+

## 2.23.0

|

| 15 |

+

|

| 16 |

+

* Add CLIPA-v2 models

|

| 17 |

+

* Add SigLIP models

|

| 18 |

+

* Add MetaCLIP models

|

| 19 |

+

* Add NLLB-CLIP models

|

| 20 |

+

* CLIPA train code

|

| 21 |

+

* Minor changes/fixes

|

| 22 |

+

* Remove protobuf version limit

|

| 23 |

+

* Stop checking model name when loading CoCa models

|

| 24 |

+

* Log native wandb step

|

| 25 |

+

* Use bool instead of long masks

|

| 26 |

+

|

| 27 |

+

## 2.21.0

|

| 28 |

+

|

| 29 |

+

* Add SigLIP loss + training support

|

| 30 |

+

* Add more DataComp models (B/16, B/32 and B/32@256)

|

| 31 |

+

* Update default num workers

|

| 32 |

+

* Update CoCa generation for `transformers>=4.31`

|

| 33 |

+

* PyTorch 2.0 `state_dict()` compatibility fix for compiled models

|

| 34 |

+

* Fix padding in `ResizeMaxSize`

|

| 35 |

+

* Convert JIT model on state dict load for `pretrained='filename…'`

|

| 36 |

+

* Other minor changes and fixes (typos, README, dependencies, CI)

|

| 37 |

+

|

| 38 |

+

## 2.20.0

|

| 39 |

+

|

| 40 |

+

* Add EVA models

|

| 41 |

+

* Support serial worker training

|

| 42 |

+

* Fix Python 3.7 compatibility

|

| 43 |

+

|

| 44 |

+

## 2.19.0

|

| 45 |

+

|

| 46 |

+

* Add DataComp models

|

| 47 |

+

|

| 48 |

+

## 2.18.0

|

| 49 |

+

|

| 50 |

+

* Enable int8 inference without `.weight` attribute

|

| 51 |

+

|

| 52 |

+

## 2.17.2

|

| 53 |

+

|

| 54 |

+

* Update push_to_hf_hub

|

| 55 |

+

|

| 56 |

+

## 2.17.0

|

| 57 |

+

|

| 58 |

+

* Add int8 support

|

| 59 |

+

* Update notebook demo

|

| 60 |

+

* Refactor zero-shot classification code

|

| 61 |

+

|

| 62 |

+

## 2.16.2

|

| 63 |

+

|

| 64 |

+

* Fixes for context_length and vocab_size attributes

|

| 65 |

+

|

| 66 |

+

## 2.16.1

|

| 67 |

+

|

| 68 |

+

* Fixes for context_length and vocab_size attributes

|

| 69 |

+

* Fix --train-num-samples logic

|

| 70 |

+

* Add HF BERT configs for PubMed CLIP model

|

| 71 |

+

|

| 72 |

+

## 2.16.0

|

| 73 |

+

|

| 74 |

+

* Add improved g-14 weights

|

| 75 |

+

* Update protobuf version

|

| 76 |

+

|

| 77 |

+

## 2.15.0

|

| 78 |

+

|

| 79 |

+

* Add convnext_xxlarge weights

|

| 80 |

+

* Fixed import in readme

|

| 81 |

+

* Add samples per second per gpu logging

|

| 82 |

+

* Fix slurm example

|

| 83 |

+

|

| 84 |

+

## 2.14.0

|

| 85 |

+

|

| 86 |

+

* Move dataset mixtures logic to shard level

|

| 87 |

+

* Fix CoCa accum-grad training

|

| 88 |

+

* Safer transformers import guard

|

| 89 |

+

* get_labels refactoring

|

| 90 |

+

|

| 91 |

+

## 2.13.0

|

| 92 |

+

|

| 93 |

+

* Add support for dataset mixtures with different sampling weights

|

| 94 |

+

* Make transformers optional again

|

| 95 |

+

|

| 96 |

+

## 2.12.0

|

| 97 |

+

|

| 98 |

+

* Updated convnext configs for consistency

|

| 99 |

+

* Added input_patchnorm option

|

| 100 |

+

* Clean and improve CoCa generation

|

| 101 |

+

* Support model distillation

|

| 102 |

+

* Add ConvNeXt-Large 320x320 fine-tune weights

|

| 103 |

+

|

| 104 |

+

## 2.11.1

|

| 105 |

+

|

| 106 |

+

* Make transformers optional

|

| 107 |

+

* Add MSCOCO CoCa finetunes to pretrained models

|

| 108 |

+

|

| 109 |

+

## 2.11.0

|

| 110 |

+

|

| 111 |

+

* coca support and weights

|

| 112 |

+

* ConvNeXt-Large weights

|

| 113 |

+

|

| 114 |

+

## 2.10.1

|

| 115 |

+

|

| 116 |

+

* `hf-hub:org/model_id` support for loading models w/ config and weights in Hugging Face Hub

|

| 117 |

+

|

| 118 |

+

## 2.10.0

|

| 119 |

+

|

| 120 |

+

* Added a ViT-bigG-14 model.

|

| 121 |

+

* Added an up-to-date example slurm script for large training jobs.

|

| 122 |

+

* Added a option to sync logs and checkpoints to S3 during training.

|

| 123 |

+

* New options for LR schedulers, constant and constant with cooldown

|

| 124 |

+

* Fix wandb autoresuming when resume is not set

|

| 125 |

+

* ConvNeXt `base` & `base_w` pretrained models added

|

| 126 |

+

* `timm-` model prefix removed from configs

|

| 127 |

+

* `timm` augmentation + regularization (dropout / drop-path) supported

|

| 128 |

+

|

| 129 |

+

## 2.9.3

|

| 130 |

+

|

| 131 |

+

* Fix wandb collapsing multiple parallel runs into a single one

|

| 132 |

+

|

| 133 |

+

## 2.9.2

|

| 134 |

+

|

| 135 |

+

* Fix braceexpand memory explosion for complex webdataset urls

|

| 136 |

+

|

| 137 |

+

## 2.9.1

|

| 138 |

+

|

| 139 |

+

* Fix release

|

| 140 |

+

|

| 141 |

+

## 2.9.0

|

| 142 |

+

|

| 143 |

+

* Add training feature to auto-resume from the latest checkpoint on restart via `--resume latest`

|

| 144 |

+

* Allow webp in webdataset

|

| 145 |

+

* Fix logging for number of samples when using gradient accumulation

|

| 146 |

+

* Add model configs for convnext xxlarge

|

| 147 |

+

|

| 148 |

+

## 2.8.2

|

| 149 |

+

|

| 150 |

+

* wrapped patchdropout in a torch.nn.Module

|

| 151 |

+

|

| 152 |

+

## 2.8.1

|

| 153 |

+

|

| 154 |

+

* relax protobuf dependency

|

| 155 |

+

* override the default patch dropout value in 'vision_cfg'

|

| 156 |

+

|

| 157 |

+

## 2.8.0

|

| 158 |

+

|

| 159 |

+

* better support for HF models

|

| 160 |

+

* add support for gradient accumulation

|

| 161 |

+

* CI fixes

|

| 162 |

+

* add support for patch dropout

|

| 163 |

+

* add convnext configs

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

## 2.7.0

|

| 167 |

+

|

| 168 |

+

* add multilingual H/14 xlm roberta large

|

| 169 |

+

|

| 170 |

+

## 2.6.1

|

| 171 |

+

|

| 172 |

+

* fix setup.py _read_reqs

|

| 173 |

+

|

| 174 |

+

## 2.6.0

|

| 175 |

+

|

| 176 |

+

* Make openclip training usable from pypi.

|

| 177 |

+

* Add xlm roberta large vit h 14 config.

|

| 178 |

+

|

| 179 |

+

## 2.5.0

|

| 180 |

+

|

| 181 |

+

* pretrained B/32 xlm roberta base: first multilingual clip trained on laion5B

|

| 182 |

+

* pretrained B/32 roberta base: first clip trained using an HF text encoder

|

| 183 |

+

|

| 184 |

+

## 2.4.1

|

| 185 |

+

|

| 186 |

+

* Add missing hf_tokenizer_name in CLIPTextCfg.

|

| 187 |

+

|

| 188 |

+

## 2.4.0

|

| 189 |

+

|

| 190 |

+

* Fix #211, missing RN50x64 config. Fix type of dropout param for ResNet models

|

| 191 |

+

* Bring back LayerNorm impl that casts to input for non bf16/fp16

|

| 192 |

+

* zero_shot.py: set correct tokenizer based on args

|

| 193 |

+

* training/params.py: remove hf params and get them from model config

|

| 194 |

+

|

| 195 |

+

## 2.3.1

|

| 196 |

+

|

| 197 |

+

* Implement grad checkpointing for hf model.

|

| 198 |

+

* custom_text: True if hf_model_name is set

|

| 199 |

+

* Disable hf tokenizer parallelism

|

| 200 |

+

|

| 201 |

+

## 2.3.0

|

| 202 |

+

|

| 203 |

+

* Generalizable Text Transformer with HuggingFace Models (@iejMac)

|

| 204 |

+

|

| 205 |

+

## 2.2.0

|

| 206 |

+

|

| 207 |

+

* Support for custom text tower

|

| 208 |

+

* Add checksum verification for pretrained model weights

|

| 209 |

+

|

| 210 |

+

## 2.1.0

|

| 211 |

+

|

| 212 |

+

* lot including sota models, bfloat16 option, better loading, better metrics

|

| 213 |

+

|

| 214 |

+

## 1.2.0

|

| 215 |

+

|

| 216 |

+

* ViT-B/32 trained on Laion2B-en

|

| 217 |

+

* add missing openai RN50x64 model

|

| 218 |

+

|

| 219 |

+

## 1.1.1

|

| 220 |

+

|

| 221 |

+

* ViT-B/16+

|

| 222 |

+

* Add grad checkpointing support

|

| 223 |

+

* more robust data loader

|

LICENSE

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2012-2021 Gabriel Ilharco, Mitchell Wortsman,

|

| 2 |

+

Nicholas Carlini, Rohan Taori, Achal Dave, Vaishaal Shankar,

|

| 3 |

+

John Miller, Hongseok Namkoong, Hannaneh Hajishirzi, Ali Farhadi,

|

| 4 |

+

Ludwig Schmidt

|

| 5 |

+

|

| 6 |

+

Permission is hereby granted, free of charge, to any person obtaining

|

| 7 |

+

a copy of this software and associated documentation files (the

|

| 8 |

+

"Software"), to deal in the Software without restriction, including

|

| 9 |

+

without limitation the rights to use, copy, modify, merge, publish,

|

| 10 |

+

distribute, sublicense, and/or sell copies of the Software, and to

|

| 11 |

+

permit persons to whom the Software is furnished to do so, subject to

|

| 12 |

+

the following conditions:

|

| 13 |

+

|

| 14 |

+

The above copyright notice and this permission notice shall be

|

| 15 |

+

included in all copies or substantial portions of the Software.

|

| 16 |

+

|

| 17 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

|

| 18 |

+

EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

|

| 19 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

|

| 20 |

+

NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

|

| 21 |

+

LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

|

| 22 |

+

OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

|

| 23 |

+

WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

MANIFEST.in

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

include src/open_clip/bpe_simple_vocab_16e6.txt.gz

|

| 2 |

+

include src/open_clip/model_configs/*.json

|

| 3 |

+

|

README.md

ADDED

|

@@ -0,0 +1,618 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# OpenCLIP

|

| 2 |

+

|

| 3 |

+

[[Paper]](https://arxiv.org/abs/2212.07143) [[Citations]](#citing) [[Clip Colab]](https://colab.research.google.com/github/mlfoundations/open_clip/blob/master/docs/Interacting_with_open_clip.ipynb) [[Coca Colab]](https://colab.research.google.com/github/mlfoundations/open_clip/blob/master/docs/Interacting_with_open_coca.ipynb)

|

| 4 |

+

[](https://pypi.python.org/pypi/open_clip_torch)

|

| 5 |

+

|

| 6 |

+

Welcome to an open source implementation of OpenAI's [CLIP](https://arxiv.org/abs/2103.00020) (Contrastive Language-Image Pre-training).

|

| 7 |

+

|

| 8 |

+

Using this codebase, we have trained several models on a variety of data sources and compute budgets, ranging from [small-scale experiments](docs/LOW_ACC.md) to larger runs including models trained on datasets such as [LAION-400M](https://arxiv.org/abs/2111.02114), [LAION-2B](https://arxiv.org/abs/2210.08402) and [DataComp-1B](https://arxiv.org/abs/2304.14108).

|

| 9 |

+

Many of our models and their scaling properties are studied in detail in the paper [reproducible scaling laws for contrastive language-image learning](https://arxiv.org/abs/2212.07143).

|

| 10 |

+

Some of the best models we've trained and their zero-shot ImageNet-1k accuracy are shown below, along with the ViT-L model trained by OpenAI and other state-of-the-art open source alternatives (all can be loaded via OpenCLIP).

|

| 11 |

+

We provide more details about our full collection of pretrained models [here](docs/PRETRAINED.md), and zero-shot results for 38 datasets [here](docs/openclip_results.csv).

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

| Model | Training data | Resolution | # of samples seen | ImageNet zero-shot acc. |

|

| 16 |

+

| -------- | ------- | ------- | ------- | ------- |

|

| 17 |

+

| ConvNext-Base | LAION-2B | 256px | 13B | 71.5% |

|

| 18 |

+

| ConvNext-Large | LAION-2B | 320px | 29B | 76.9% |

|

| 19 |

+

| ConvNext-XXLarge | LAION-2B | 256px | 34B | 79.5% |

|

| 20 |

+

| ViT-B/32 | DataComp-1B | 256px | 34B | 72.8% |

|

| 21 |

+

| ViT-B/16 | DataComp-1B | 224px | 13B | 73.5% |

|

| 22 |

+

| ViT-L/14 | LAION-2B | 224px | 32B | 75.3% |

|

| 23 |

+

| ViT-H/14 | LAION-2B | 224px | 32B | 78.0% |

|

| 24 |

+

| ViT-L/14 | DataComp-1B | 224px | 13B | 79.2% |

|

| 25 |

+

| ViT-G/14 | LAION-2B | 224px | 34B | 80.1% |

|

| 26 |

+

| | | | | |

|

| 27 |

+

| ViT-L/14-quickgelu [(Original CLIP)](https://arxiv.org/abs/2103.00020) | WIT | 224px | 13B | 75.5% |

|

| 28 |

+

| ViT-SO400M/14 [(SigLIP)](https://arxiv.org/abs/2303.15343) | WebLI | 224px | 45B | 82.0% |

|

| 29 |

+

| ViT-L/14 [(DFN)](https://arxiv.org/abs/2309.17425) | DFN-2B | 224px | 39B | 82.2% |

|

| 30 |

+

| ViT-SO400M-14-SigLIP-384 [(SigLIP)](https://arxiv.org/abs/2303.15343) | WebLI | 384px | 45B | 83.1% |

|

| 31 |

+

| ViT-H/14-quickgelu [(DFN)](https://arxiv.org/abs/2309.17425) | DFN-5B | 224px | 39B | 83.4% |

|

| 32 |

+

| ViT-H-14-378-quickgelu [(DFN)](https://arxiv.org/abs/2309.17425) | DFN-5B | 378px | 44B | 84.4% |

|

| 33 |

+

|

| 34 |

+

Model cards with additional model specific details can be found on the Hugging Face Hub under the OpenCLIP library tag: https://huggingface.co/models?library=open_clip.

|

| 35 |

+

|

| 36 |

+

If you found this repository useful, please consider [citing](#citing).

|

| 37 |

+

We welcome anyone to submit an issue or send an email if you have any other requests or suggestions.

|

| 38 |

+

|

| 39 |

+

Note that portions of `src/open_clip/` modelling and tokenizer code are adaptations of OpenAI's official [repository](https://github.com/openai/CLIP).

|

| 40 |

+

|

| 41 |

+

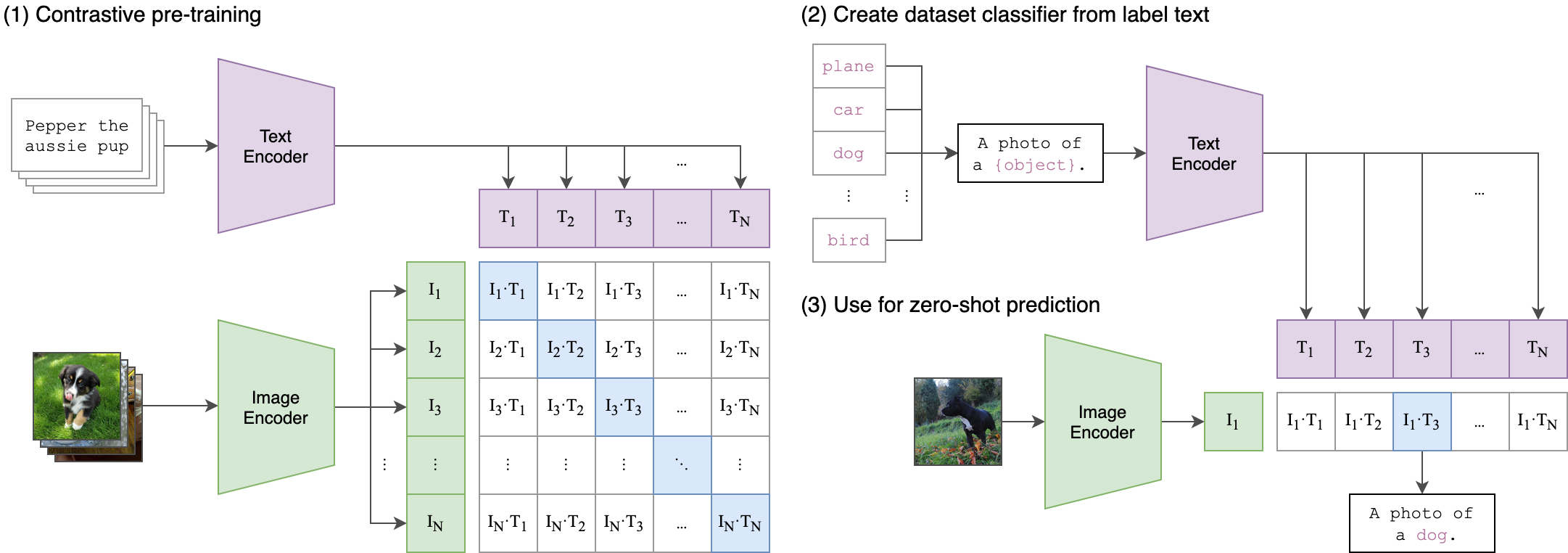

## Approach

|

| 42 |

+

|

| 43 |

+

|  |

|

| 44 |

+

|:--:|

|

| 45 |

+

| Image Credit: https://github.com/openai/CLIP |

|

| 46 |

+

|

| 47 |

+

## Usage

|

| 48 |

+

|

| 49 |

+

```

|

| 50 |

+

pip install open_clip_torch

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

```python

|

| 54 |

+

import torch

|

| 55 |

+

from PIL import Image

|

| 56 |

+

import open_clip

|

| 57 |

+

|

| 58 |

+

model, _, preprocess = open_clip.create_model_and_transforms('ViT-B-32', pretrained='laion2b_s34b_b79k')

|

| 59 |

+

model.eval() # model in train mode by default, impacts some models with BatchNorm or stochastic depth active

|

| 60 |

+

tokenizer = open_clip.get_tokenizer('ViT-B-32')

|

| 61 |

+

|

| 62 |

+

image = preprocess(Image.open("docs/CLIP.png")).unsqueeze(0)

|

| 63 |

+

text = tokenizer(["a diagram", "a dog", "a cat"])

|

| 64 |

+

|

| 65 |

+

with torch.no_grad(), torch.autocast("cuda"):

|

| 66 |

+

image_features = model.encode_image(image)

|

| 67 |

+

text_features = model.encode_text(text)

|

| 68 |

+

image_features /= image_features.norm(dim=-1, keepdim=True)

|

| 69 |

+

text_features /= text_features.norm(dim=-1, keepdim=True)

|

| 70 |

+

|

| 71 |

+

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

|

| 72 |

+

|

| 73 |

+

print("Label probs:", text_probs) # prints: [[1., 0., 0.]]

|

| 74 |

+

```

|

| 75 |

+

|

| 76 |

+