diff --git a/.girattributes b/.girattributes

new file mode 100644

index 0000000000000000000000000000000000000000..73a87ef1d20621efd908d00cee926546c8d46157

--- /dev/null

+++ b/.girattributes

@@ -0,0 +1,2 @@

+*.py linguist-language=python

+*.ipynb linguist-documentation

\ No newline at end of file

diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

new file mode 100644

index 0000000000000000000000000000000000000000..02972c9ec3ad607f6180e4a39b40687f7dfaa7ad

--- /dev/null

+++ b/.github/workflows/ci.yml

@@ -0,0 +1,121 @@

+name: Continuous integration

+

+on:

+ push:

+ branches:

+ - main

+ paths-ignore:

+ - '**.md'

+ - 'CITATION.cff'

+ - 'LICENSE'

+ - '.gitignore'

+ - 'docs/**'

+ pull_request:

+ branches:

+ - main

+ paths-ignore:

+ - '**.md'

+ - 'CITATION.cff'

+ - 'LICENSE'

+ - '.gitignore'

+ - 'docs/**'

+ workflow_dispatch:

+ inputs:

+ manual_revision_reference:

+ required: false

+ type: string

+ manual_revision_test:

+ required: false

+ type: string

+

+env:

+ REVISION_REFERENCE: v2.8.2

+ #9d31b2ec4df6d8228f370ff20c8267ec6ba39383 earliest compatible v2.7.0 + pretrained_hf param

+

+jobs:

+ Tests:

+ strategy:

+ matrix:

+ os: [ ubuntu-latest ] #, macos-latest ]

+ python: [ 3.8 ]

+ job_num: [ 4 ]

+ job: [ 1, 2, 3, 4 ]

+ runs-on: ${{ matrix.os }}

+ steps:

+ - uses: actions/checkout@v3

+ with:

+ fetch-depth: 0

+ ref: ${{ inputs.manual_revision_test }}

+ - name: Set up Python ${{ matrix.python }}

+ id: pythonsetup

+ uses: actions/setup-python@v4

+ with:

+ python-version: ${{ matrix.python }}

+ - name: Venv cache

+ id: venv-cache

+ uses: actions/cache@v3

+ with:

+ path: .env

+ key: venv-${{ matrix.os }}-${{ steps.pythonsetup.outputs.python-version }}-${{ hashFiles('requirements*') }}

+ - name: Pytest durations cache

+ uses: actions/cache@v3

+ with:

+ path: .test_durations

+ key: test_durations-${{ matrix.os }}-${{ steps.pythonsetup.outputs.python-version }}-${{ matrix.job }}-${{ github.run_id }}

+ restore-keys: test_durations-0-

+ - name: Setup

+ if: steps.venv-cache.outputs.cache-hit != 'true'

+ run: |

+ python3 -m venv .env

+ source .env/bin/activate

+ pip install -e .[test]

+ - name: Prepare test data

+ run: |

+ source .env/bin/activate

+ python -m pytest \

+ --quiet --co \

+ --splitting-algorithm least_duration \

+ --splits ${{ matrix.job_num }} \

+ --group ${{ matrix.job }} \

+ -m regression_test \

+ tests \

+ | head -n -2 | grep -Po 'test_inference_with_data\[\K[^]]*(?=-False]|-True])' \

+ > models_gh_runner.txt

+ if [ -n "${{ inputs.manual_revision_reference }}" ]; then

+ REVISION_REFERENCE=${{ inputs.manual_revision_reference }}

+ fi

+ python tests/util_test.py \

+ --save_model_list models_gh_runner.txt \

+ --model_list models_gh_runner.txt \

+ --git_revision $REVISION_REFERENCE

+ - name: Unit tests

+ run: |

+ source .env/bin/activate

+ if [[ -f .test_durations ]]

+ then

+ cp .test_durations durations_1

+ mv .test_durations durations_2

+ fi

+ python -m pytest \

+ -x -s -v \

+ --splitting-algorithm least_duration \

+ --splits ${{ matrix.job_num }} \

+ --group ${{ matrix.job }} \

+ --store-durations \

+ --durations-path durations_1 \

+ --clean-durations \

+ -m "not regression_test" \

+ tests

+ OPEN_CLIP_TEST_REG_MODELS=models_gh_runner.txt python -m pytest \

+ -x -s -v \

+ --store-durations \

+ --durations-path durations_2 \

+ --clean-durations \

+ -m "regression_test" \

+ tests

+ jq -s -S 'add' durations_* > .test_durations

+ - name: Collect pytest durations

+ uses: actions/upload-artifact@v4

+ with:

+ name: pytest_durations_${{ matrix.os }}-${{ matrix.python }}-${{ matrix.job }}

+ path: .test_durations

diff --git a/.github/workflows/clear-cache.yml b/.github/workflows/clear-cache.yml

new file mode 100644

index 0000000000000000000000000000000000000000..22a1a24618ed339cb429dcce0d6969299fb49cac

--- /dev/null

+++ b/.github/workflows/clear-cache.yml

@@ -0,0 +1,29 @@

+name: Clear cache

+

+on:

+ workflow_dispatch:

+

+permissions:

+ actions: write

+

+jobs:

+ clear-cache:

+ runs-on: ubuntu-latest

+ steps:

+ - name: Clear cache

+ uses: actions/github-script@v6

+ with:

+ script: |

+ const caches = await github.rest.actions.getActionsCacheList({

+ owner: context.repo.owner,

+ repo: context.repo.repo,

+ })

+ for (const cache of caches.data.actions_caches) {

+ console.log(cache)

+ await github.rest.actions.deleteActionsCacheById({

+ owner: context.repo.owner,

+ repo: context.repo.repo,

+ cache_id: cache.id,

+ })

+ }

+

diff --git a/.github/workflows/python-publish.yml b/.github/workflows/python-publish.yml

new file mode 100644

index 0000000000000000000000000000000000000000..017ba074c537281d3158a373cfa305acbb736289

--- /dev/null

+++ b/.github/workflows/python-publish.yml

@@ -0,0 +1,37 @@

+name: Release

+

+on:

+ push:

+ branches:

+ - main

+jobs:

+ deploy:

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v2

+ - uses: actions-ecosystem/action-regex-match@v2

+ id: regex-match

+ with:

+ text: ${{ github.event.head_commit.message }}

+ regex: '^Release ([^ ]+)'

+ - name: Set up Python

+ uses: actions/setup-python@v2

+ with:

+ python-version: '3.8'

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install setuptools wheel twine build

+ - name: Release

+ if: ${{ steps.regex-match.outputs.match != '' }}

+ uses: softprops/action-gh-release@v1

+ with:

+ tag_name: v${{ steps.regex-match.outputs.group1 }}

+ - name: Build and publish

+ if: ${{ steps.regex-match.outputs.match != '' }}

+ env:

+ TWINE_USERNAME: __token__

+ TWINE_PASSWORD: ${{ secrets.PYPI_PASSWORD }}

+ run: |

+ python -m build

+ twine upload dist/*

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..b880054ba3f8ad032b6fc110921e0772b2877cd9

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,153 @@

+**/logs/

+**/wandb/

+models/

+features/

+results/

+

+tests/data/

+*.pt

+

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+pip-wheel-metadata/

+share/python-wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.nox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+*.py,cover

+.hypothesis/

+.pytest_cache/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+db.sqlite3-journal

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/_build/

+

+# PyBuilder

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# IPython

+profile_default/

+ipython_config.py

+

+# pyenv

+.python-version

+

+# pipenv

+# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

+# However, in case of collaboration, if having platform-specific dependencies or dependencies

+# having no cross-platform support, pipenv may install dependencies that don't work, or not

+# install all needed dependencies.

+#Pipfile.lock

+

+# PEP 582; used by e.g. github.com/David-OConnor/pyflow

+__pypackages__/

+

+# Celery stuff

+celerybeat-schedule

+celerybeat.pid

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+.dmypy.json

+dmypy.json

+

+# Pyre type checker

+.pyre/

+sync.sh

+gpu1sync.sh

+.idea

+*.pdf

+**/._*

+**/*DS_*

+**.jsonl

+src/sbatch

+src/misc

+.vscode

+src/debug

+core.*

+

+# Allow

+!src/evaluation/misc/results_dbs/*

\ No newline at end of file

diff --git a/CITATION.cff b/CITATION.cff

new file mode 100644

index 0000000000000000000000000000000000000000..1072ddd3a6065bbf88346c2c1d6ce7681363fab8

--- /dev/null

+++ b/CITATION.cff

@@ -0,0 +1,33 @@

+cff-version: 1.1.0

+message: If you use this software, please cite it as below.

+authors:

+ - family-names: Ilharco

+ given-names: Gabriel

+ - family-names: Wortsman

+ given-names: Mitchell

+ - family-names: Wightman

+ given-names: Ross

+ - family-names: Gordon

+ given-names: Cade

+ - family-names: Carlini

+ given-names: Nicholas

+ - family-names: Taori

+ given-names: Rohan

+ - family-names: Dave

+ given-names: Achal

+ - family-names: Shankar

+ given-names: Vaishaal

+ - family-names: Namkoong

+ given-names: Hongseok

+ - family-names: Miller

+ given-names: John

+ - family-names: Hajishirzi

+ given-names: Hannaneh

+ - family-names: Farhadi

+ given-names: Ali

+ - family-names: Schmidt

+ given-names: Ludwig

+title: OpenCLIP

+version: v0.1

+doi: 10.5281/zenodo.5143773

+date-released: 2021-07-28

diff --git a/HISTORY.md b/HISTORY.md

new file mode 100644

index 0000000000000000000000000000000000000000..329452ddd172ba70aa713818b4e4001653840ba8

--- /dev/null

+++ b/HISTORY.md

@@ -0,0 +1,223 @@

+## 2.24.0

+

+* Fix missing space in error message

+* use model flag for normalizing embeddings

+* init logit_bias for non siglip pretrained models

+* Fix logit_bias load_checkpoint addition

+* Make CoCa model match CLIP models for logit scale/bias init

+* Fix missing return of "logit_bias" in CoCa.forward

+* Add NLLB-CLIP with SigLIP models

+* Add get_logits method and NLLB tokenizer

+* Remove the empty file src/open_clip/generation_utils.py

+* Update params.py: "BatchNorm" -> "LayerNorm" in the description string for "--lock-text-freeze-layer-norm"

+

+## 2.23.0

+

+* Add CLIPA-v2 models

+* Add SigLIP models

+* Add MetaCLIP models

+* Add NLLB-CLIP models

+* CLIPA train code

+* Minor changes/fixes

+ * Remove protobuf version limit

+ * Stop checking model name when loading CoCa models

+ * Log native wandb step

+ * Use bool instead of long masks

+

+## 2.21.0

+

+* Add SigLIP loss + training support

+* Add more DataComp models (B/16, B/32 and B/32@256)

+* Update default num workers

+* Update CoCa generation for `transformers>=4.31`

+* PyTorch 2.0 `state_dict()` compatibility fix for compiled models

+* Fix padding in `ResizeMaxSize`

+* Convert JIT model on state dict load for `pretrained='filename…'`

+* Other minor changes and fixes (typos, README, dependencies, CI)

+

+## 2.20.0

+

+* Add EVA models

+* Support serial worker training

+* Fix Python 3.7 compatibility

+

+## 2.19.0

+

+* Add DataComp models

+

+## 2.18.0

+

+* Enable int8 inference without `.weight` attribute

+

+## 2.17.2

+

+* Update push_to_hf_hub

+

+## 2.17.0

+

+* Add int8 support

+* Update notebook demo

+* Refactor zero-shot classification code

+

+## 2.16.2

+

+* Fixes for context_length and vocab_size attributes

+

+## 2.16.1

+

+* Fixes for context_length and vocab_size attributes

+* Fix --train-num-samples logic

+* Add HF BERT configs for PubMed CLIP model

+

+## 2.16.0

+

+* Add improved g-14 weights

+* Update protobuf version

+

+## 2.15.0

+

+* Add convnext_xxlarge weights

+* Fixed import in readme

+* Add samples per second per gpu logging

+* Fix slurm example

+

+## 2.14.0

+

+* Move dataset mixtures logic to shard level

+* Fix CoCa accum-grad training

+* Safer transformers import guard

+* get_labels refactoring

+

+## 2.13.0

+

+* Add support for dataset mixtures with different sampling weights

+* Make transformers optional again

+

+## 2.12.0

+

+* Updated convnext configs for consistency

+* Added input_patchnorm option

+* Clean and improve CoCa generation

+* Support model distillation

+* Add ConvNeXt-Large 320x320 fine-tune weights

+

+## 2.11.1

+

+* Make transformers optional

+* Add MSCOCO CoCa finetunes to pretrained models

+

+## 2.11.0

+

+* coca support and weights

+* ConvNeXt-Large weights

+

+## 2.10.1

+

+* `hf-hub:org/model_id` support for loading models w/ config and weights in Hugging Face Hub

+

+## 2.10.0

+

+* Added a ViT-bigG-14 model.

+* Added an up-to-date example slurm script for large training jobs.

+* Added a option to sync logs and checkpoints to S3 during training.

+* New options for LR schedulers, constant and constant with cooldown

+* Fix wandb autoresuming when resume is not set

+* ConvNeXt `base` & `base_w` pretrained models added

+* `timm-` model prefix removed from configs

+* `timm` augmentation + regularization (dropout / drop-path) supported

+

+## 2.9.3

+

+* Fix wandb collapsing multiple parallel runs into a single one

+

+## 2.9.2

+

+* Fix braceexpand memory explosion for complex webdataset urls

+

+## 2.9.1

+

+* Fix release

+

+## 2.9.0

+

+* Add training feature to auto-resume from the latest checkpoint on restart via `--resume latest`

+* Allow webp in webdataset

+* Fix logging for number of samples when using gradient accumulation

+* Add model configs for convnext xxlarge

+

+## 2.8.2

+

+* wrapped patchdropout in a torch.nn.Module

+

+## 2.8.1

+

+* relax protobuf dependency

+* override the default patch dropout value in 'vision_cfg'

+

+## 2.8.0

+

+* better support for HF models

+* add support for gradient accumulation

+* CI fixes

+* add support for patch dropout

+* add convnext configs

+

+

+## 2.7.0

+

+* add multilingual H/14 xlm roberta large

+

+## 2.6.1

+

+* fix setup.py _read_reqs

+

+## 2.6.0

+

+* Make openclip training usable from pypi.

+* Add xlm roberta large vit h 14 config.

+

+## 2.5.0

+

+* pretrained B/32 xlm roberta base: first multilingual clip trained on laion5B

+* pretrained B/32 roberta base: first clip trained using an HF text encoder

+

+## 2.4.1

+

+* Add missing hf_tokenizer_name in CLIPTextCfg.

+

+## 2.4.0

+

+* Fix #211, missing RN50x64 config. Fix type of dropout param for ResNet models

+* Bring back LayerNorm impl that casts to input for non bf16/fp16

+* zero_shot.py: set correct tokenizer based on args

+* training/params.py: remove hf params and get them from model config

+

+## 2.3.1

+

+* Implement grad checkpointing for hf model.

+* custom_text: True if hf_model_name is set

+* Disable hf tokenizer parallelism

+

+## 2.3.0

+

+* Generalizable Text Transformer with HuggingFace Models (@iejMac)

+

+## 2.2.0

+

+* Support for custom text tower

+* Add checksum verification for pretrained model weights

+

+## 2.1.0

+

+* lot including sota models, bfloat16 option, better loading, better metrics

+

+## 1.2.0

+

+* ViT-B/32 trained on Laion2B-en

+* add missing openai RN50x64 model

+

+## 1.1.1

+

+* ViT-B/16+

+* Add grad checkpointing support

+* more robust data loader

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..5bfbf6c09daad743dbf9a98d303c0402e4099a27

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,23 @@

+Copyright (c) 2012-2021 Gabriel Ilharco, Mitchell Wortsman,

+Nicholas Carlini, Rohan Taori, Achal Dave, Vaishaal Shankar,

+John Miller, Hongseok Namkoong, Hannaneh Hajishirzi, Ali Farhadi,

+Ludwig Schmidt

+

+Permission is hereby granted, free of charge, to any person obtaining

+a copy of this software and associated documentation files (the

+"Software"), to deal in the Software without restriction, including

+without limitation the rights to use, copy, modify, merge, publish,

+distribute, sublicense, and/or sell copies of the Software, and to

+permit persons to whom the Software is furnished to do so, subject to

+the following conditions:

+

+The above copyright notice and this permission notice shall be

+included in all copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

+EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

+MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND

+NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE

+LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION

+OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION

+WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

diff --git a/MANIFEST.in b/MANIFEST.in

new file mode 100644

index 0000000000000000000000000000000000000000..c74de18e62cf8fe3b8fa777195f7d38c90b13380

--- /dev/null

+++ b/MANIFEST.in

@@ -0,0 +1,3 @@

+include src/open_clip/bpe_simple_vocab_16e6.txt.gz

+include src/open_clip/model_configs/*.json

+

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..a46954bba4e8f9ee0e2c944082688110b0a66f11

--- /dev/null

+++ b/README.md

@@ -0,0 +1,618 @@

+# OpenCLIP

+

+[[Paper]](https://arxiv.org/abs/2212.07143) [[Citations]](#citing) [[Clip Colab]](https://colab.research.google.com/github/mlfoundations/open_clip/blob/master/docs/Interacting_with_open_clip.ipynb) [[Coca Colab]](https://colab.research.google.com/github/mlfoundations/open_clip/blob/master/docs/Interacting_with_open_coca.ipynb)

+[](https://pypi.python.org/pypi/open_clip_torch)

+

+Welcome to an open source implementation of OpenAI's [CLIP](https://arxiv.org/abs/2103.00020) (Contrastive Language-Image Pre-training).

+

+Using this codebase, we have trained several models on a variety of data sources and compute budgets, ranging from [small-scale experiments](docs/LOW_ACC.md) to larger runs including models trained on datasets such as [LAION-400M](https://arxiv.org/abs/2111.02114), [LAION-2B](https://arxiv.org/abs/2210.08402) and [DataComp-1B](https://arxiv.org/abs/2304.14108).

+Many of our models and their scaling properties are studied in detail in the paper [reproducible scaling laws for contrastive language-image learning](https://arxiv.org/abs/2212.07143).

+Some of the best models we've trained and their zero-shot ImageNet-1k accuracy are shown below, along with the ViT-L model trained by OpenAI and other state-of-the-art open source alternatives (all can be loaded via OpenCLIP).

+We provide more details about our full collection of pretrained models [here](docs/PRETRAINED.md), and zero-shot results for 38 datasets [here](docs/openclip_results.csv).

+

+

+

+| Model | Training data | Resolution | # of samples seen | ImageNet zero-shot acc. |

+| -------- | ------- | ------- | ------- | ------- |

+| ConvNext-Base | LAION-2B | 256px | 13B | 71.5% |

+| ConvNext-Large | LAION-2B | 320px | 29B | 76.9% |

+| ConvNext-XXLarge | LAION-2B | 256px | 34B | 79.5% |

+| ViT-B/32 | DataComp-1B | 256px | 34B | 72.8% |

+| ViT-B/16 | DataComp-1B | 224px | 13B | 73.5% |

+| ViT-L/14 | LAION-2B | 224px | 32B | 75.3% |

+| ViT-H/14 | LAION-2B | 224px | 32B | 78.0% |

+| ViT-L/14 | DataComp-1B | 224px | 13B | 79.2% |

+| ViT-G/14 | LAION-2B | 224px | 34B | 80.1% |

+| | | | | |

+| ViT-L/14-quickgelu [(Original CLIP)](https://arxiv.org/abs/2103.00020) | WIT | 224px | 13B | 75.5% |

+| ViT-SO400M/14 [(SigLIP)](https://arxiv.org/abs/2303.15343) | WebLI | 224px | 45B | 82.0% |

+| ViT-L/14 [(DFN)](https://arxiv.org/abs/2309.17425) | DFN-2B | 224px | 39B | 82.2% |

+| ViT-SO400M-14-SigLIP-384 [(SigLIP)](https://arxiv.org/abs/2303.15343) | WebLI | 384px | 45B | 83.1% |

+| ViT-H/14-quickgelu [(DFN)](https://arxiv.org/abs/2309.17425) | DFN-5B | 224px | 39B | 83.4% |

+| ViT-H-14-378-quickgelu [(DFN)](https://arxiv.org/abs/2309.17425) | DFN-5B | 378px | 44B | 84.4% |

+

+Model cards with additional model specific details can be found on the Hugging Face Hub under the OpenCLIP library tag: https://huggingface.co/models?library=open_clip.

+

+If you found this repository useful, please consider [citing](#citing).

+We welcome anyone to submit an issue or send an email if you have any other requests or suggestions.

+

+Note that portions of `src/open_clip/` modelling and tokenizer code are adaptations of OpenAI's official [repository](https://github.com/openai/CLIP).

+

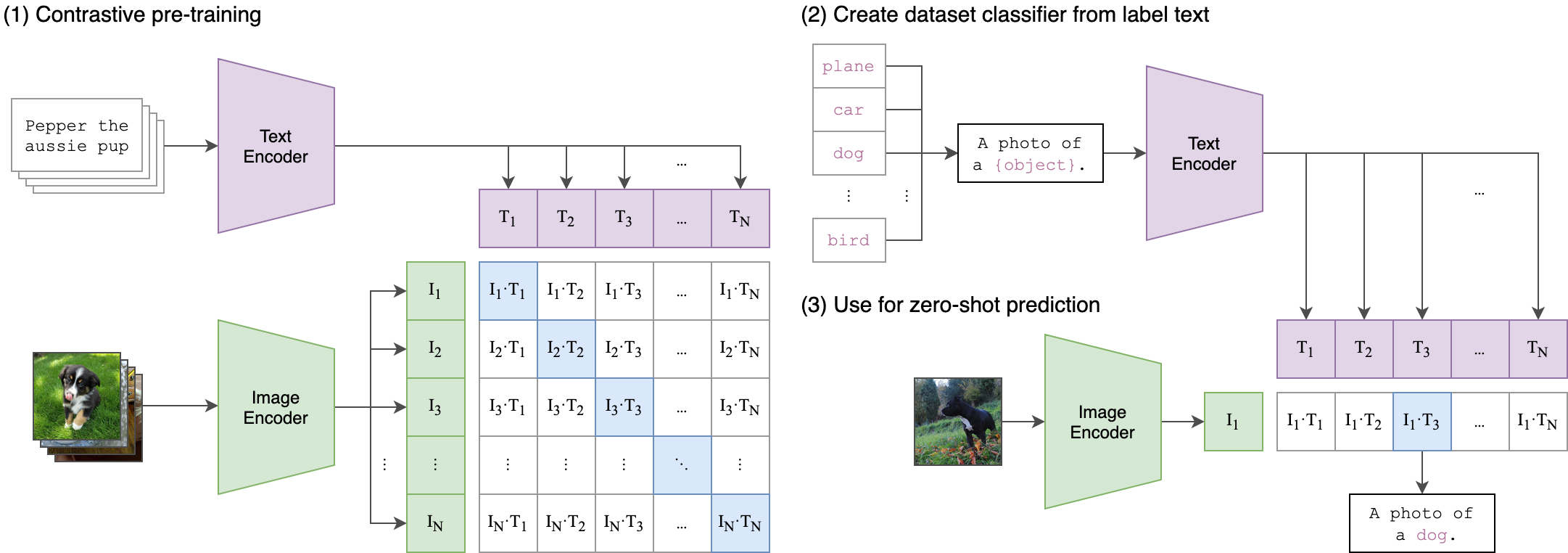

+## Approach

+

+|  |

+|:--:|

+| Image Credit: https://github.com/openai/CLIP |

+

+## Usage

+

+```

+pip install open_clip_torch

+```

+

+```python

+import torch

+from PIL import Image

+import open_clip

+

+model, _, preprocess = open_clip.create_model_and_transforms('ViT-B-32', pretrained='laion2b_s34b_b79k')

+model.eval() # model in train mode by default, impacts some models with BatchNorm or stochastic depth active

+tokenizer = open_clip.get_tokenizer('ViT-B-32')

+

+image = preprocess(Image.open("docs/CLIP.png")).unsqueeze(0)

+text = tokenizer(["a diagram", "a dog", "a cat"])

+

+with torch.no_grad(), torch.autocast("cuda"):

+ image_features = model.encode_image(image)

+ text_features = model.encode_text(text)

+ image_features /= image_features.norm(dim=-1, keepdim=True)

+ text_features /= text_features.norm(dim=-1, keepdim=True)

+

+ text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

+

+print("Label probs:", text_probs) # prints: [[1., 0., 0.]]

+```

+

+If model uses `timm` image encoders (convnext, siglip, eva, etc) ensure the latest timm is installed. Upgrade `timm` if you see 'Unknown model' errors for the image encoder.

+

+If model uses transformers tokenizers, ensure `transformers` is installed.

+

+See also this [[Clip Colab]](https://colab.research.google.com/github/mlfoundations/open_clip/blob/master/docs/Interacting_with_open_clip.ipynb).

+

+To compute billions of embeddings efficiently, you can use [clip-retrieval](https://github.com/rom1504/clip-retrieval) which has openclip support.

+

+### Pretrained models

+

+We offer a simple model interface to instantiate both pre-trained and untrained models.

+To see which pretrained models are available, use the following code snippet.

+More details about our pretrained models are available [here](docs/PRETRAINED.md).

+

+```python

+>>> import open_clip

+>>> open_clip.list_pretrained()

+```

+

+You can find more about the models we support (e.g. number of parameters, FLOPs) in [this table](docs/model_profile.csv).

+

+NOTE: Many existing checkpoints use the QuickGELU activation from the original OpenAI models. This activation is actually less efficient than native torch.nn.GELU in recent versions of PyTorch. The model defaults are now nn.GELU, so one should use model definitions with `-quickgelu` postfix for the OpenCLIP pretrained weights. All OpenAI pretrained weights will always default to QuickGELU. One can also use the non `-quickgelu` model definitions with pretrained weights using QuickGELU but there will be an accuracy drop, for fine-tune that will likely vanish for longer runs.

+Future trained models will use nn.GELU.

+

+### Loading models

+

+Models can be loaded with `open_clip.create_model_and_transforms`, as shown in the example below. The model name and corresponding `pretrained` keys are compatible with the outputs of `open_clip.list_pretrained()`.

+

+The `pretrained` argument also accepts local paths, for example `/path/to/my/b32.pt`.

+You can also load checkpoints from huggingface this way. To do so, download the `open_clip_pytorch_model.bin` file (for example, [https://huggingface.co/laion/CLIP-ViT-L-14-DataComp.XL-s13B-b90K/tree/main](https://huggingface.co/laion/CLIP-ViT-L-14-DataComp.XL-s13B-b90K/blob/main/open_clip_pytorch_model.bin)), and use `pretrained=/path/to/open_clip_pytorch_model.bin`.

+

+```python

+# pretrained also accepts local paths

+model, _, preprocess = open_clip.create_model_and_transforms('ViT-B-32', pretrained='laion2b_s34b_b79k')

+```

+

+## Fine-tuning on classification tasks

+

+This repository is focused on training CLIP models. To fine-tune a *trained* zero-shot model on a downstream classification task such as ImageNet, please see [our other repository: WiSE-FT](https://github.com/mlfoundations/wise-ft). The [WiSE-FT repository](https://github.com/mlfoundations/wise-ft) contains code for our paper on [Robust Fine-tuning of Zero-shot Models](https://arxiv.org/abs/2109.01903), in which we introduce a technique for fine-tuning zero-shot models while preserving robustness under distribution shift.

+

+## Data

+

+To download datasets as webdataset, we recommend [img2dataset](https://github.com/rom1504/img2dataset).

+

+### Conceptual Captions

+

+See [cc3m img2dataset example](https://github.com/rom1504/img2dataset/blob/main/dataset_examples/cc3m.md).

+

+### YFCC and other datasets

+

+In addition to specifying the training data via CSV files as mentioned above, our codebase also supports [webdataset](https://github.com/webdataset/webdataset), which is recommended for larger scale datasets. The expected format is a series of `.tar` files. Each of these `.tar` files should contain two files for each training example, one for the image and one for the corresponding text. Both files should have the same name but different extensions. For instance, `shard_001.tar` could contain files such as `abc.jpg` and `abc.txt`. You can learn more about `webdataset` at [https://github.com/webdataset/webdataset](https://github.com/webdataset/webdataset). We use `.tar` files with 1,000 data points each, which we create using [tarp](https://github.com/webdataset/tarp).

+

+You can download the YFCC dataset from [Multimedia Commons](http://mmcommons.org/).

+Similar to OpenAI, we used a subset of YFCC to reach the aforementioned accuracy numbers.

+The indices of images in this subset are in [OpenAI's CLIP repository](https://github.com/openai/CLIP/blob/main/data/yfcc100m.md).

+

+

+## Training CLIP

+

+### Install

+

+We advise you first create a virtual environment with:

+

+```

+python3 -m venv .env

+source .env/bin/activate

+pip install -U pip

+```

+

+You can then install openclip for training with `pip install 'open_clip_torch[training]'`.

+

+#### Development

+

+If you want to make changes to contribute code, you can clone openclip then run `make install` in openclip folder (after creating a virtualenv)

+

+Install pip PyTorch as per https://pytorch.org/get-started/locally/

+

+You may run `make install-training` to install training deps

+

+#### Testing

+

+Test can be run with `make install-test` then `make test`

+

+`python -m pytest -x -s -v tests -k "training"` to run a specific test

+

+Running regression tests against a specific git revision or tag:

+1. Generate testing data

+ ```sh

+ python tests/util_test.py --model RN50 RN101 --save_model_list models.txt --git_revision 9d31b2ec4df6d8228f370ff20c8267ec6ba39383

+ ```

+ **_WARNING_: This will invoke git and modify your working tree, but will reset it to the current state after data has been generated! \

+ Don't modify your working tree while test data is being generated this way.**

+

+2. Run regression tests

+ ```sh

+ OPEN_CLIP_TEST_REG_MODELS=models.txt python -m pytest -x -s -v -m regression_test

+ ```

+

+### Sample single-process running code:

+

+```bash

+python -m open_clip_train.main \

+ --save-frequency 1 \

+ --zeroshot-frequency 1 \

+ --report-to tensorboard \

+ --train-data="/path/to/train_data.csv" \

+ --val-data="/path/to/validation_data.csv" \

+ --csv-img-key filepath \

+ --csv-caption-key title \

+ --imagenet-val=/path/to/imagenet/root/val/ \

+ --warmup 10000 \

+ --batch-size=128 \

+ --lr=1e-3 \

+ --wd=0.1 \

+ --epochs=30 \

+ --workers=8 \

+ --model RN50

+```

+

+Note: `imagenet-val` is the path to the *validation* set of ImageNet for zero-shot evaluation, not the training set!

+You can remove this argument if you do not want to perform zero-shot evaluation on ImageNet throughout training. Note that the `val` folder should contain subfolders. If it does not, please use [this script](https://raw.githubusercontent.com/soumith/imagenetloader.torch/master/valprep.sh).

+

+### Multi-GPU and Beyond

+

+This code has been battle tested up to 1024 A100s and offers a variety of solutions

+for distributed training. We include native support for SLURM clusters.

+

+As the number of devices used to train increases, so does the space complexity of

+the the logit matrix. Using a naïve all-gather scheme, space complexity will be

+`O(n^2)`. Instead, complexity may become effectively linear if the flags

+`--gather-with-grad` and `--local-loss` are used. This alteration results in one-to-one

+numerical results as the naïve method.

+

+#### Epochs

+

+For larger datasets (eg Laion2B), we recommend setting `--train-num-samples` to a lower value than the full epoch, for example `--train-num-samples 135646078` to 1/16 of an epoch in conjunction with `--dataset-resampled` to do sampling with replacement. This allows having frequent checkpoints to evaluate more often.

+

+#### Patch Dropout

+

+Recent research has shown that one can dropout half to three-quarters of the visual tokens, leading to up to 2-3x training speeds without loss of accuracy.

+

+You can set this on your visual transformer config with the key `patch_dropout`.

+

+In the paper, they also finetuned without the patch dropout at the end. You can do this with the command-line argument `--force-patch-dropout 0.`

+

+#### Multiple data sources

+

+OpenCLIP supports using multiple data sources, by separating different data paths with `::`.

+For instance, to train on CC12M and on LAION, one might use `--train-data "/data/cc12m/cc12m-train-{0000..2175}.tar::/data/LAION-400M/{00000..41455}.tar"`.

+Using `--dataset-resampled` is recommended for these cases.

+

+By default, on expectation the amount of times the model will see a sample from each source is proportional to the size of the source.

+For instance, when training on one data source with size 400M and one with size 10M, samples from the first source are 40x more likely to be seen in expectation.

+

+We also support different weighting of the data sources, by using the `--train-data-upsampling-factors` flag.

+For instance, using `--train-data-upsampling-factors=1::1` in the above scenario is equivalent to not using the flag, and `--train-data-upsampling-factors=1::2` is equivalent to upsampling the second data source twice.

+If you want to sample from data sources with the same frequency, the upsampling factors should be inversely proportional to the sizes of the data sources.

+For instance, if dataset `A` has 1000 samples and dataset `B` has 100 samples, you can use `--train-data-upsampling-factors=0.001::0.01` (or analogously, `--train-data-upsampling-factors=1::10`).

+

+#### Single-Node

+

+We make use of `torchrun` to launch distributed jobs. The following launches a

+a job on a node of 4 GPUs:

+

+```bash

+cd open_clip/src

+torchrun --nproc_per_node 4 -m open_clip_train.main \

+ --train-data '/data/cc12m/cc12m-train-{0000..2175}.tar' \

+ --train-num-samples 10968539 \

+ --dataset-type webdataset \

+ --batch-size 320 \

+ --precision amp \

+ --workers 4 \

+ --imagenet-val /data/imagenet/validation/

+```

+

+#### Multi-Node

+

+The same script above works, so long as users include information about the number

+of nodes and host node.

+

+```bash

+cd open_clip/src

+torchrun --nproc_per_node=4 \

+ --rdzv_endpoint=$HOSTE_NODE_ADDR \

+ -m open_clip_train.main \

+ --train-data '/data/cc12m/cc12m-train-{0000..2175}.tar' \

+ --train-num-samples 10968539 \

+ --dataset-type webdataset \

+ --batch-size 320 \

+ --precision amp \

+ --workers 4 \

+ --imagenet-val /data/imagenet/validation/

+```

+

+#### SLURM

+

+This is likely the easiest solution to utilize. The following script was used to

+train our largest models:

+

+```bash

+#!/bin/bash -x

+#SBATCH --nodes=32

+#SBATCH --gres=gpu:4

+#SBATCH --ntasks-per-node=4

+#SBATCH --cpus-per-task=6

+#SBATCH --wait-all-nodes=1

+#SBATCH --job-name=open_clip

+#SBATCH --account=ACCOUNT_NAME

+#SBATCH --partition PARTITION_NAME

+

+eval "$(/path/to/conda/bin/conda shell.bash hook)" # init conda

+conda activate open_clip

+export CUDA_VISIBLE_DEVICES=0,1,2,3

+export MASTER_PORT=12802

+

+master_addr=$(scontrol show hostnames "$SLURM_JOB_NODELIST" | head -n 1)

+export MASTER_ADDR=$master_addr

+

+cd /shared/open_clip

+export PYTHONPATH="$PYTHONPATH:$PWD/src"

+srun --cpu_bind=v --accel-bind=gn python -u src/open_clip_train/main.py \

+ --save-frequency 1 \

+ --report-to tensorboard \

+ --train-data="/data/LAION-400M/{00000..41455}.tar" \

+ --warmup 2000 \

+ --batch-size=256 \

+ --epochs=32 \

+ --workers=8 \

+ --model ViT-B-32 \

+ --name "ViT-B-32-Vanilla" \

+ --seed 0 \

+ --local-loss \

+ --gather-with-grad

+```

+

+### Resuming from a checkpoint:

+

+```bash

+python -m open_clip_train.main \

+ --train-data="/path/to/train_data.csv" \

+ --val-data="/path/to/validation_data.csv" \

+ --resume /path/to/checkpoints/epoch_K.pt

+```

+

+### Training CoCa:

+Training [CoCa](https://arxiv.org/abs/2205.01917) models is enabled through specifying a CoCa config using the ```--model``` parameter of the training script. Currently available configs are "coca_base", "coca_ViT-B-32", and "coca_roberta-ViT-B-32" (which uses RoBERTa as the text encoder). CoCa configs are different from CLIP configs because they have an additional "multimodal_cfg" component which specifies parameters for the multimodal text decoder. Here's an example from the coca_ViT-B-32 config:

+```json

+"multimodal_cfg": {

+ "context_length": 76,

+ "vocab_size": 49408,

+ "width": 512,

+ "heads": 8,

+ "layers": 12,

+ "latent_dim": 512,

+ "attn_pooler_heads": 8

+}

+```

+Credit to [lucidrains](https://github.com/lucidrains) for [initial code](https://github.com/lucidrains/CoCa-pytorch), [gpucce](https://github.com/gpucce) for adapting the code to open_clip, and [iejMac](https://github.com/iejMac) for training the models.

+

+### Generating text with CoCa

+

+```python

+import open_clip

+import torch

+from PIL import Image

+

+model, _, transform = open_clip.create_model_and_transforms(

+ model_name="coca_ViT-L-14",

+ pretrained="mscoco_finetuned_laion2B-s13B-b90k"

+)

+

+im = Image.open("cat.jpg").convert("RGB")

+im = transform(im).unsqueeze(0)

+

+with torch.no_grad(), torch.cuda.amp.autocast():

+ generated = model.generate(im)

+

+print(open_clip.decode(generated[0]).split("")[0].replace("", ""))

+```

+

+See also this [[Coca Colab]](https://colab.research.google.com/github/mlfoundations/open_clip/blob/master/docs/Interacting_with_open_coca.ipynb)

+

+### Fine Tuning CoCa

+

+To fine-tune coca on mscoco, first create the dataset, one way is using a csvdataset and perhaps the simplest way to do it is using [CLIP_benchmark](https://github.com/LAION-AI/CLIP_benchmark) which in turn uses [pycocotools](https://github.com/cocodataset/cocoapi) (that can be used also by itself).

+

+```python

+from clip_benchmark.datasets.builder import build_dataset

+import pandas as pd

+import os

+

+root_path = "path/to/data/dir" # set this to smth meaningful

+ds = build_dataset("mscoco_captions", root=root_path, split="train", task="captioning") # this downloads the dataset if it is not there already

+coco = ds.coco

+imgs = coco.loadImgs(coco.getImgIds())

+future_df = {"filepath":[], "title":[]}

+for img in imgs:

+ caps = coco.imgToAnns[img["id"]]

+ for cap in caps:

+ future_df["filepath"].append(img["file_name"])

+ future_df["title"].append(cap["caption"])

+pd.DataFrame.from_dict(future_df).to_csv(

+ os.path.join(root_path, "train2014.csv"), index=False, sep="\t"

+)

+```

+This should create a csv dataset that one can use to fine-tune coca with open_clip

+```bash

+python -m open_clip_train.main \

+ --dataset-type "csv" \

+ --train-data "path/to/data/dir/train2014.csv" \

+ --warmup 1000 \

+ --batch-size 128 \

+ --lr 1e-5 \

+ --wd 0.1 \

+ --epochs 1 \

+ --workers 3 \

+ --model "coca_ViT-L-14" \

+ --report-to "wandb" \

+ --coca-contrastive-loss-weight 0 \

+ --coca-caption-loss-weight 1 \

+ --log-every-n-steps 100

+```

+

+This is a general setting, open_clip has very parameters that can be set, ```python -m open_clip_train.main --help``` should show them. The only relevant change compared to pre-training are the two arguments

+

+```bash

+--coca-contrastive-loss-weight 0

+--coca-caption-loss-weight 1

+```

+which make the model only train the generative side.

+

+### Training with pre-trained language models as text encoder:

+

+If you wish to use different language models as the text encoder for CLIP you can do so by using one of the Hugging Face model configs in ```src/open_clip/model_configs``` and passing in it's tokenizer as the ```--model``` and ```--hf-tokenizer-name``` parameters respectively. Currently we only support RoBERTa ("test-roberta" config), however adding new models should be trivial. You can also determine how many layers, from the end, to leave unfrozen with the ```--lock-text-unlocked-layers``` parameter. Here's an example command to train CLIP with the RoBERTa LM that has it's last 10 layers unfrozen:

+```bash

+python -m open_clip_train.main \

+ --train-data="pipe:aws s3 cp s3://s-mas/cc3m/{00000..00329}.tar -" \

+ --train-num-samples 3000000 \

+ --val-data="pipe:aws s3 cp s3://s-mas/cc3m/{00330..00331}.tar -" \

+ --val-num-samples 10000 \

+ --dataset-type webdataset \

+ --batch-size 256 \

+ --warmup 2000 \

+ --epochs 10 \

+ --lr 5e-4 \

+ --precision amp \

+ --workers 6 \

+ --model "roberta-ViT-B-32" \

+ --lock-text \

+ --lock-text-unlocked-layers 10 \

+ --name "10_unfrozen" \

+ --report-to "tensorboard" \

+```

+

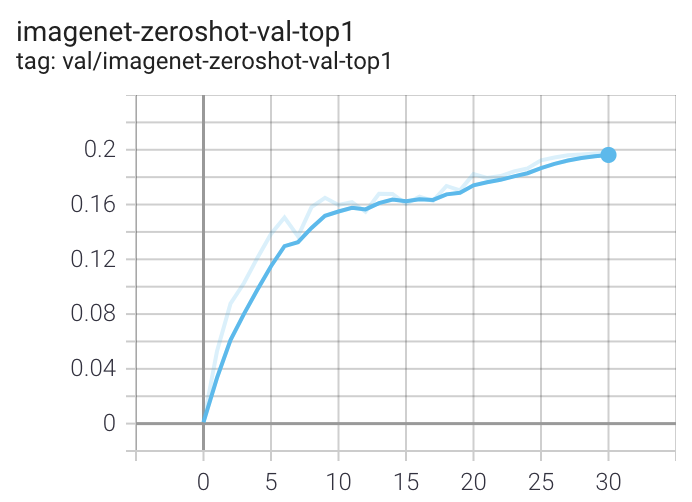

+### Loss Curves

+

+When run on a machine with 8 GPUs the command should produce the following training curve for Conceptual Captions:

+

+

+

+More detailed curves for Conceptual Captions are given at [/docs/clip_conceptual_captions.md](/docs/clip_conceptual_captions.md).

+

+When training a RN50 on YFCC the same hyperparameters as above are used, with the exception of `lr=5e-4` and `epochs=32`.

+

+Note that to use another model, like `ViT-B/32` or `RN50x4` or `RN50x16` or `ViT-B/16`, specify with `--model RN50x4`.

+

+### Logging

+

+For tensorboard logging, run:

+```bash

+tensorboard --logdir=logs/tensorboard/ --port=7777

+```

+

+For wandb logging, we recommend looking at the `step` variable instead of `Step`, since the later was not properly set in earlier versions of this codebase.

+For older runs with models trained before https://github.com/mlfoundations/open_clip/pull/613, the `Step` variable should be ignored.

+For newer runs, after that PR, the two variables are the same.

+

+## Evaluation / Zero-Shot

+

+We recommend https://github.com/LAION-AI/CLIP_benchmark#how-to-use for systematic evaluation on 40 datasets.

+

+### Evaluating local checkpoint:

+

+```bash

+python -m open_clip_train.main \

+ --val-data="/path/to/validation_data.csv" \

+ --model RN101 \

+ --pretrained /path/to/checkpoints/epoch_K.pt

+```

+

+### Evaluating hosted pretrained checkpoint on ImageNet zero-shot prediction:

+

+```bash

+python -m open_clip_train.main \

+ --imagenet-val /path/to/imagenet/validation \

+ --model ViT-B-32-quickgelu \

+ --pretrained laion400m_e32

+```

+

+### Model distillation

+

+You can distill from a pre-trained by using `--distill-model` and `--distill-pretrained` to specify the model you'd like to distill from.

+For instance, to distill from OpenAI ViT-L/14 use `--distill-model ViT-L-14 --distill-pretrained openai`.

+

+### Gradient accumulation

+

+To simulate larger batches use `--accum-freq k`. If per gpu batch size, `--batch-size`, is `m`, then the effective batch size will be `k * m * num_gpus`.

+

+When increasing `--accum-freq` from its default of 1, samples/s will remain approximately constant (batch size will double, as will time-per-batch). It is recommended to use other features to reduce batch size such as `--grad-checkpointing --local-loss --gather-with-grad` before increasing `--accum-freq`. `--accum-freq` can be used in addition to these features.

+

+Instead of 1 forward pass per example, there are now 2 forward passes per-example. However, the first is done with `torch.no_grad`.

+

+There is some additional GPU memory required --- the features and data from all `m` batches are stored in memory.

+

+There are also `m` loss computations instead of the usual 1.

+

+For more information see Cui et al. (https://arxiv.org/abs/2112.09331) or Pham et al. (https://arxiv.org/abs/2111.10050).

+

+### Int8 Support

+

+We have beta support for int8 training and inference.

+You can enable int8 training with `--use-bnb-linear SwitchBackLinearGlobal` or `--use-bnb-linear SwitchBackLinearGlobalMemEfficient`.

+Please see the bitsandbytes library for definitions for these layers.

+For CLIP VIT-Huge this should currently correspond to a 10% training speedup with no accuracy loss.

+More speedups comin when the attention layer is refactored so that linear layers man be replaced there, too.

+

+See the tutorial https://github.com/mlfoundations/open_clip/blob/main/tutorials/int8_tutorial.ipynb or [paper](https://arxiv.org/abs/2304.13013).

+

+### Support for remote loading/training

+

+It is always possible to resume directly from a remote file, e.g., a file in an s3 bucket. Just set `--resume s3:// `.

+This will work with any filesystem supported by `fsspec`.

+

+It is also possible to train `open_clip` models while continuously backing up to s3. This can help to avoid slow local file systems.

+

+Say that your node has a local ssd `/scratch`, an s3 bucket `s3://`.

+

+In that case, set `--logs /scratch` and `--remote-sync s3://`. Then, a background process will sync `/scratch/` to `s3:///`. After syncing, the background process will sleep for `--remote-sync-frequency` seconds, which defaults to 5 minutes.

+

+There is also experimental support for syncing to other remote file systems, not just s3. To do so, specify `--remote-sync-protocol fsspec`. However, this is currently very slow and not recommended.

+

+Also, to optionally avoid saving too many checkpoints locally when using these features, you can use `--delete-previous-checkpoint` which deletes the previous checkpoint after saving a new one.

+

+Note: if you are using this feature with `--resume latest`, there are a few warnings. First, use with `--save-most-recent` is not supported. Second, only `s3` is supported. Finally, since the sync happens in the background, it is possible that the most recent checkpoint may not be finished syncing to the remote.

+

+### Pushing Models to Hugging Face Hub

+

+The module `open_clip.push_to_hf_hub` includes helpers for pushing models /w weights and config to the HF Hub.

+

+The tool can be run from command line, ex:

+`python -m open_clip.push_to_hf_hub --model convnext_large_d_320 --pretrained /train/checkpoints/epoch_12.pt --repo-id laion/CLIP-convnext_large_d_320.laion2B-s29B-b131K-ft`

+

+

+

+## Acknowledgments

+

+We gratefully acknowledge the Gauss Centre for Supercomputing e.V. (www.gauss-centre.eu) for funding this part of work by providing computing time through the John von Neumann Institute for Computing (NIC) on the GCS Supercomputer JUWELS Booster at Jülich Supercomputing Centre (JSC).

+

+## The Team

+

+Current development of this repository is led by [Ross Wightman](https://rwightman.com/), [Romain Beaumont](https://github.com/rom1504), [Cade Gordon](http://cadegordon.io/), and [Vaishaal Shankar](http://vaishaal.com/).

+

+The original version of this repository is from a group of researchers at UW, Google, Stanford, Amazon, Columbia, and Berkeley.

+

+[Gabriel Ilharco*](http://gabrielilharco.com/), [Mitchell Wortsman*](https://mitchellnw.github.io/), [Nicholas Carlini](https://nicholas.carlini.com/), [Rohan Taori](https://www.rohantaori.com/), [Achal Dave](http://www.achaldave.com/), [Vaishaal Shankar](http://vaishaal.com/), [John Miller](https://people.eecs.berkeley.edu/~miller_john/), [Hongseok Namkoong](https://hsnamkoong.github.io/), [Hannaneh Hajishirzi](https://homes.cs.washington.edu/~hannaneh/), [Ali Farhadi](https://homes.cs.washington.edu/~ali/), [Ludwig Schmidt](https://people.csail.mit.edu/ludwigs/)

+

+Special thanks to [Jong Wook Kim](https://jongwook.kim/) and [Alec Radford](https://github.com/Newmu) for help with reproducing CLIP!

+

+## Citing

+

+If you found this repository useful, please consider citing:

+```bibtex

+@software{ilharco_gabriel_2021_5143773,

+ author = {Ilharco, Gabriel and

+ Wortsman, Mitchell and

+ Wightman, Ross and

+ Gordon, Cade and

+ Carlini, Nicholas and

+ Taori, Rohan and

+ Dave, Achal and

+ Shankar, Vaishaal and

+ Namkoong, Hongseok and

+ Miller, John and

+ Hajishirzi, Hannaneh and

+ Farhadi, Ali and

+ Schmidt, Ludwig},

+ title = {OpenCLIP},

+ month = jul,

+ year = 2021,

+ note = {If you use this software, please cite it as below.},

+ publisher = {Zenodo},

+ version = {0.1},

+ doi = {10.5281/zenodo.5143773},

+ url = {https://doi.org/10.5281/zenodo.5143773}

+}

+```

+

+```bibtex

+@inproceedings{cherti2023reproducible,

+ title={Reproducible scaling laws for contrastive language-image learning},

+ author={Cherti, Mehdi and Beaumont, Romain and Wightman, Ross and Wortsman, Mitchell and Ilharco, Gabriel and Gordon, Cade and Schuhmann, Christoph and Schmidt, Ludwig and Jitsev, Jenia},

+ booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

+ pages={2818--2829},

+ year={2023}

+}

+```

+

+```bibtex

+@inproceedings{Radford2021LearningTV,

+ title={Learning Transferable Visual Models From Natural Language Supervision},

+ author={Alec Radford and Jong Wook Kim and Chris Hallacy and A. Ramesh and Gabriel Goh and Sandhini Agarwal and Girish Sastry and Amanda Askell and Pamela Mishkin and Jack Clark and Gretchen Krueger and Ilya Sutskever},

+ booktitle={ICML},

+ year={2021}

+}

+```

+

+```bibtex

+@inproceedings{schuhmann2022laionb,

+ title={{LAION}-5B: An open large-scale dataset for training next generation image-text models},

+ author={Christoph Schuhmann and

+ Romain Beaumont and

+ Richard Vencu and

+ Cade W Gordon and

+ Ross Wightman and

+ Mehdi Cherti and

+ Theo Coombes and

+ Aarush Katta and

+ Clayton Mullis and

+ Mitchell Wortsman and

+ Patrick Schramowski and

+ Srivatsa R Kundurthy and

+ Katherine Crowson and

+ Ludwig Schmidt and

+ Robert Kaczmarczyk and

+ Jenia Jitsev},

+ booktitle={Thirty-sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

+ year={2022},

+ url={https://openreview.net/forum?id=M3Y74vmsMcY}

+}

+```

+

+[](https://zenodo.org/badge/latestdoi/390536799)

diff --git a/models.txt b/models.txt

new file mode 100644

index 0000000000000000000000000000000000000000..ce97c15febdbd26e1c79a71a0c34e059853a7611

--- /dev/null

+++ b/models.txt

@@ -0,0 +1,2 @@

+RN101

+RN50

diff --git a/pytest.ini b/pytest.ini

new file mode 100644

index 0000000000000000000000000000000000000000..9546b10ce86328ef21697b8d134a6d5865632f35

--- /dev/null

+++ b/pytest.ini

@@ -0,0 +1,3 @@

+[pytest]

+markers =

+ regression_test

diff --git a/requirements.txt b/requirements.txt

new file mode 100644

index 0000000000000000000000000000000000000000..4b1ff4a3d66d6ce16afb3712fe26d698e0323b43

--- /dev/null

+++ b/requirements.txt

@@ -0,0 +1,8 @@

+torch>=1.9.0

+torchvision

+regex

+ftfy

+tqdm

+huggingface_hub

+safetensors

+timm

diff --git a/src/open_clip/__init__.py b/src/open_clip/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..d0419b4d7887b5af810f6251c9e4b3c18971b59a

--- /dev/null

+++ b/src/open_clip/__init__.py

@@ -0,0 +1,18 @@

+from .version import __version__

+

+from .coca_model import CoCa

+from .constants import OPENAI_DATASET_MEAN, OPENAI_DATASET_STD

+from .factory import create_model, create_model_and_transforms, create_model_from_pretrained, get_tokenizer, create_loss

+from .factory import list_models, add_model_config, get_model_config, load_checkpoint

+from .loss import ClipLoss, DistillClipLoss, CoCaLoss

+from .model import CLIP, CustomTextCLIP, CLIPTextCfg, CLIPVisionCfg, \

+ convert_weights_to_lp, convert_weights_to_fp16, trace_model, get_cast_dtype, get_input_dtype, \

+ get_model_tokenize_cfg, get_model_preprocess_cfg, set_model_preprocess_cfg

+from .openai import load_openai_model, list_openai_models

+from .pretrained import list_pretrained, list_pretrained_models_by_tag, list_pretrained_tags_by_model, \

+ get_pretrained_url, download_pretrained_from_url, is_pretrained_cfg, get_pretrained_cfg, download_pretrained

+from .push_to_hf_hub import push_pretrained_to_hf_hub, push_to_hf_hub

+from .tokenizer import SimpleTokenizer, tokenize, decode

+from .transform import image_transform, AugmentationCfg

+from .zero_shot_classifier import build_zero_shot_classifier, build_zero_shot_classifier_legacy

+from .zero_shot_metadata import OPENAI_IMAGENET_TEMPLATES, SIMPLE_IMAGENET_TEMPLATES, IMAGENET_CLASSNAMES

diff --git a/src/open_clip/coca_model.py b/src/open_clip/coca_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..ebf65563043237dfccf85e23b41d6da9ad113397

--- /dev/null

+++ b/src/open_clip/coca_model.py

@@ -0,0 +1,582 @@

+from typing import Dict, List, Optional, Union

+

+import torch

+from torch import nn

+from torch.nn import functional as F

+import numpy as np

+from dataclasses import dataclass

+

+from .transformer import (

+ LayerNormFp32,

+ LayerNorm,

+ QuickGELU,

+ MultimodalTransformer,

+)

+from .model import CLIPTextCfg, CLIPVisionCfg, _build_vision_tower, _build_text_tower

+

+try:

+ from transformers import (

+ BeamSearchScorer,

+ LogitsProcessorList,

+ TopPLogitsWarper,

+ TopKLogitsWarper,

+ RepetitionPenaltyLogitsProcessor,

+ MinLengthLogitsProcessor,

+ MaxLengthCriteria,

+ StopStringCriteria,

+ EosTokenCriteria,

+ StoppingCriteriaList

+ )

+

+ GENERATION_TYPES = {

+ "top_k": TopKLogitsWarper,

+ "top_p": TopPLogitsWarper,

+ "beam_search": "beam_search"

+ }

+ _has_transformers = True

+except ImportError as e:

+ GENERATION_TYPES = {

+ "top_k": None,

+ "top_p": None,

+ "beam_search": "beam_search"

+ }

+ _has_transformers = False

+

+

+@dataclass

+class MultimodalCfg(CLIPTextCfg):

+ mlp_ratio: int = 4

+ dim_head: int = 64

+ heads: int = 8

+ n_queries: int = 256

+ attn_pooler_heads: int = 8

+

+

+def _build_text_decoder_tower(

+ embed_dim,

+ multimodal_cfg,

+ quick_gelu: bool = False,

+ cast_dtype: Optional[torch.dtype] = None,

+):

+ multimodal_cfg = MultimodalCfg(**multimodal_cfg) if isinstance(multimodal_cfg, dict) else multimodal_cfg

+ act_layer = QuickGELU if quick_gelu else nn.GELU

+ norm_layer = (

+ LayerNormFp32 if cast_dtype in (torch.float16, torch.bfloat16) else LayerNorm

+ )

+

+ decoder = MultimodalTransformer(

+ context_length=multimodal_cfg.context_length,

+ width=multimodal_cfg.width,

+ heads=multimodal_cfg.heads,

+ layers=multimodal_cfg.layers,

+ ls_init_value=multimodal_cfg.ls_init_value,

+ output_dim=embed_dim,

+ act_layer=act_layer,

+ norm_layer=norm_layer,

+ )

+

+ return decoder

+

+

+def _token_to_tensor(token_id, device: str = "cpu") -> torch.Tensor:

+ if not isinstance(token_id, torch.Tensor):

+ if isinstance(token_id, int):

+ token_id = [token_id]

+ token_id = torch.tensor(token_id, device=device)

+ return token_id

+

+

+class CoCa(nn.Module):

+ def __init__(

+ self,

+ embed_dim,

+ multimodal_cfg: MultimodalCfg,

+ text_cfg: CLIPTextCfg,

+ vision_cfg: CLIPVisionCfg,

+ quick_gelu: bool = False,

+ init_logit_scale: float = np.log(1 / 0.07),

+ init_logit_bias: Optional[float] = None,

+ nonscalar_logit_scale: bool = False,

+ cast_dtype: Optional[torch.dtype] = None,

+ pad_id: int = 0,

+ ):

+ super().__init__()

+ multimodal_cfg = MultimodalCfg(**multimodal_cfg) if isinstance(multimodal_cfg, dict) else multimodal_cfg

+ text_cfg = CLIPTextCfg(**text_cfg) if isinstance(text_cfg, dict) else text_cfg

+ vision_cfg = CLIPVisionCfg(**vision_cfg) if isinstance(vision_cfg, dict) else vision_cfg

+

+ self.text = _build_text_tower(

+ embed_dim=embed_dim,

+ text_cfg=text_cfg,

+ quick_gelu=quick_gelu,

+ cast_dtype=cast_dtype,

+ )

+

+ vocab_size = (

+ text_cfg.vocab_size # for hf models

+ if hasattr(text_cfg, "hf_model_name") and text_cfg.hf_model_name is not None

+ else text_cfg.vocab_size

+ )

+

+ self.visual = _build_vision_tower(

+ embed_dim=embed_dim,

+ vision_cfg=vision_cfg,

+ quick_gelu=quick_gelu,

+ cast_dtype=cast_dtype,

+ )

+

+ self.text_decoder = _build_text_decoder_tower(

+ vocab_size,

+ multimodal_cfg=multimodal_cfg,

+ quick_gelu=quick_gelu,

+ cast_dtype=cast_dtype,

+ )

+

+ lshape = [1] if nonscalar_logit_scale else []

+ self.logit_scale = nn.Parameter(torch.ones(lshape) * init_logit_scale)

+ if init_logit_bias is not None:

+ self.logit_bias = nn.Parameter(torch.ones(lshape) * init_logit_bias)

+ else:

+ self.logit_bias = None

+ self.pad_id = pad_id

+

+ self.context_length = multimodal_cfg.context_length

+

+ @torch.jit.ignore

+ def set_grad_checkpointing(self, enable: bool = True):

+ self.visual.set_grad_checkpointing(enable)

+ self.text.set_grad_checkpointing(enable)

+ self.text_decoder.set_grad_checkpointing(enable)

+

+ def _encode_image(self, images, normalize: bool = True):

+ image_latent, tokens_embs = self.visual(images)

+ image_latent = F.normalize(image_latent, dim=-1) if normalize else image_latent

+ return image_latent, tokens_embs

+

+ def _encode_text(self, text, normalize: bool = True):

+ text_latent, token_emb = self.text(text)

+ text_latent = F.normalize(text_latent, dim=-1) if normalize else text_latent

+ return text_latent, token_emb

+

+ def encode_image(self, images, normalize: bool = True):

+ image_latent, _ = self._encode_image(images, normalize=normalize)

+ return image_latent

+

+ def encode_text(self, text, normalize: bool = True):

+ text_latent, _ = self._encode_text(text, normalize=normalize)

+ return text_latent

+

+ def forward_intermediates(

+ self,

+ image: Optional[torch.Tensor] = None,

+ text: Optional[torch.Tensor] = None,

+ image_indices: Optional[Union[int, List[int]]] = None,

+ text_indices: Optional[Union[int, List[int]]] = None,

+ stop_early: bool = False,

+ normalize: bool = True,

+ normalize_intermediates: bool = False,

+ intermediates_only: bool = False,

+ image_output_fmt: str = 'NCHW',

+ image_output_extra_tokens: bool = False,

+ text_output_fmt: str = 'NLC',

+ text_output_extra_tokens: bool = False,

+ output_logits: bool = False,

+ output_logit_scale_bias: bool = False,

+ ) -> Dict[str, Union[torch.Tensor, List[torch.Tensor]]]:

+ """ Forward features that returns intermediates.

+

+ Args:

+ image: Input image tensor

+ text: Input text tensor

+ image_indices: For image tower, Take last n blocks if int, all if None, select matching indices if sequence

+ text_indices: Take last n blocks if int, all if None, select matching indices if sequence

+ stop_early: Stop iterating over blocks when last desired intermediate hit

+ normalize: L2 Normalize final image and text features (if present)

+ normalize_intermediates: Apply final encoder norm layer to all intermediates (if possible)

+ intermediates_only: Only return intermediate features, do not return final features

+ image_output_fmt: Shape of intermediate image feature outputs

+ image_output_extra_tokens: Return both prefix and spatial intermediate tokens

+ text_output_fmt: Shape of intermediate text feature outputs

+ text_output_extra_tokens: Return both prefix and spatial intermediate tokens

+ output_logits: Include logits in output

+ output_logit_scale_bias: Include the logit scale bias in the output

+ Returns:

+

+ """

+ output = {}

+ if intermediates_only:

+ # intermediates only disables final feature normalization, and include logits

+ normalize = False

+ output_logits = False

+ if output_logits:

+ assert False, 'FIXME, needs implementing'

+

+ if image is not None:

+ image_output = self.visual.forward_intermediates(

+ image,

+ indices=image_indices,

+ stop_early=stop_early,

+ normalize_intermediates=normalize_intermediates,

+ intermediates_only=intermediates_only,

+ output_fmt=image_output_fmt,

+ output_extra_tokens=image_output_extra_tokens,

+ )

+ if normalize and "image_features" in image_output:

+ image_output["image_features"] = F.normalize(image_output["image_features"], dim=-1)

+ output.update(image_output)

+

+ if text is not None:

+ text_output = self.text.forward_intermediates(

+ text,

+ indices=text_indices,

+ stop_early=stop_early,

+ normalize_intermediates=normalize_intermediates,

+ intermediates_only=intermediates_only,

+ output_fmt=text_output_fmt,

+ output_extra_tokens=text_output_extra_tokens,

+ )

+ if normalize and "text_features" in text_output:

+ text_output["text_features"] = F.normalize(text_output["text_features"], dim=-1)

+ output.update(text_output)

+

+ # FIXME text decoder

+ logit_scale_exp = self.logit_scale.exp() if output_logits or output_logit_scale_bias else None

+ if output_logit_scale_bias:

+ output["logit_scale"] = logit_scale_exp

+ if self.logit_bias is not None:

+ output['logit_bias'] = self.logit_bias

+

+ return output

+

+ def forward(

+ self,

+ image,

+ text: Optional[torch.Tensor] = None,

+ image_latent: Optional[torch.Tensor] = None,

+ image_embs: Optional[torch.Tensor] = None,

+ output_labels: bool = True,

+ ):

+ if image_latent is None or image_embs is None:

+ image_latent, image_embs = self._encode_image(image)

+

+ if text is None:

+ return {"image_features": image_latent, "image_embs": image_embs}

+

+ text_latent, token_embs = self._encode_text(text)

+

+ # FIXME this isn't an ideal solution, would like to improve -RW

+ labels: Optional[torch.Tensor] = text[:, 1:] if output_labels else None

+ if output_labels:

+ # align text_embs and thus logits with labels for teacher-forcing caption loss

+ token_embs = token_embs[:, :-1]

+

+ logits = self.text_decoder(image_embs, token_embs)

+ out_dict = {

+ "image_features": image_latent,

+ "text_features": text_latent,

+ "logits": logits,

+ "logit_scale": self.logit_scale.exp()

+ }

+ if labels is not None:

+ out_dict["labels"] = labels

+ if self.logit_bias is not None:

+ out_dict["logit_bias"] = self.logit_bias

+ return out_dict

+

+ def generate(

+ self,

+ image,

+ text=None,

+ seq_len=30,

+ max_seq_len=77,

+ temperature=1.,

+ generation_type="beam_search",

+ top_p=0.1, # keep tokens in the 1 - top_p quantile

+ top_k=1, # keeps the top_k most probable tokens

+ pad_token_id=None,

+ eos_token_id=None,

+ sot_token_id=None,

+ num_beams=6,

+ num_beam_groups=3,

+ min_seq_len=5,

+ stopping_criteria=None,

+ repetition_penalty=1.0,

+ fixed_output_length=False # if True output.shape == (batch_size, seq_len)

+ ):

+ # taking many ideas and components from HuggingFace GenerationMixin

+ # https://huggingface.co/docs/transformers/main/en/main_classes/text_generation

+ assert _has_transformers, "Please install transformers for generate functionality. `pip install transformers`."

+ assert seq_len > min_seq_len, "seq_len must be larger than min_seq_len"

+ device = image.device

+

+ with torch.no_grad():

+ sot_token_id = _token_to_tensor(49406 if sot_token_id is None else sot_token_id, device=device)

+ eos_token_id = _token_to_tensor(49407 if eos_token_id is None else eos_token_id, device=device)

+ pad_token_id = self.pad_id if pad_token_id is None else pad_token_id

+ logit_processor = LogitsProcessorList(

+ [

+ MinLengthLogitsProcessor(min_seq_len, eos_token_id),

+ RepetitionPenaltyLogitsProcessor(repetition_penalty),

+ ]

+ )

+

+ if stopping_criteria is None:

+ stopping_criteria = [MaxLengthCriteria(max_length=seq_len)]

+ stopping_criteria = StoppingCriteriaList(stopping_criteria)

+

+ if generation_type == "beam_search":

+ output = self._generate_beamsearch(

+ image_inputs=image,

+ pad_token_id=pad_token_id,

+ eos_token_id=eos_token_id,

+ sot_token_id=sot_token_id,

+ num_beams=num_beams,

+ num_beam_groups=num_beam_groups,

+ min_seq_len=min_seq_len,

+ stopping_criteria=stopping_criteria,

+ logit_processor=logit_processor,

+ )

+ if fixed_output_length and output.shape[1] < seq_len:

+ pad_len = seq_len - output.shape[1]

+ return torch.cat((

+ output,

+ torch.ones(output.shape[0], pad_len, device=device, dtype=output.dtype) * pad_token_id

+ ),

+ dim=1

+ )

+ return output

+

+ elif generation_type == "top_p":

+ logit_warper = GENERATION_TYPES[generation_type](top_p)

+ elif generation_type == "top_k":

+ logit_warper = GENERATION_TYPES[generation_type](top_k)

+ else:

+ raise ValueError(

+ f"generation_type has to be one of "

+ f"{'| ' + ' | '.join(list(GENERATION_TYPES.keys())) + ' |'}."

+ )

+

+ image_latent, image_embs = self._encode_image(image)

+

+ if text is None:

+ text = torch.ones((image.shape[0], 1), device=device, dtype=torch.long) * sot_token_id

+

+ was_training = self.training

+ num_dims = len(text.shape)

+

+ if num_dims == 1:

+ text = text[None, :]

+

+ self.eval()

+ out = text

+

+ while True:

+ x = out[:, -max_seq_len:]

+ cur_len = x.shape[1]

+ logits = self(

+ image,

+ x,

+ image_latent=image_latent,

+ image_embs=image_embs,

+ output_labels=False,

+ )["logits"][:, -1]

+ mask = (out[:, -1] == eos_token_id) | (out[:, -1] == pad_token_id)

+ sample = torch.ones((out.shape[0], 1), device=device, dtype=torch.long) * pad_token_id

+

+ if mask.all():

+ if not fixed_output_length:

+ break

+ else:

+ logits = logits[~mask, :]

+ filtered_logits = logit_processor(x[~mask, :], logits)

+ filtered_logits = logit_warper(x[~mask, :], filtered_logits)

+ probs = F.softmax(filtered_logits / temperature, dim=-1)

+

+ if (cur_len + 1 == seq_len):

+ sample[~mask, :] = torch.ones((sum(~mask), 1), device=device, dtype=torch.long) * eos_token_id

+ else:

+ sample[~mask, :] = torch.multinomial(probs, 1)

+

+ out = torch.cat((out, sample), dim=-1)

+

+ cur_len += 1

+

+ if all(stopping_criteria(out, None)):

+ break

+

+ if num_dims == 1:

+ out = out.squeeze(0)

+

+ self.train(was_training)

+ return out

+

+ def _generate_beamsearch(

+ self,

+ image_inputs,

+ pad_token_id=None,

+ eos_token_id=None,

+ sot_token_id=None,

+ num_beams=6,

+ num_beam_groups=3,

+ min_seq_len=5,

+ stopping_criteria=None,

+ logit_processor=None,

+ logit_warper=None,

+ ):

+ device = image_inputs.device

+ batch_size = image_inputs.shape[0]

+ image_inputs = torch.repeat_interleave(image_inputs, num_beams, dim=0)

+ image_latent, image_embs = self._encode_image(image_inputs)

+

+ input_ids = torch.ones((batch_size * num_beams, 1), device=device, dtype=torch.long)

+ input_ids = input_ids * sot_token_id

+ beam_scorer = BeamSearchScorer(

+ batch_size=batch_size,

+ num_beams=num_beams,

+ device=device,

+ num_beam_groups=num_beam_groups,

+ )

+ # instantiate logits processors

+ logits_processor = (

+ LogitsProcessorList([MinLengthLogitsProcessor(min_seq_len, eos_token_id=eos_token_id)])

+ if logit_processor is None

+ else logit_processor

+ )

+

+ num_beams = beam_scorer.num_beams

+ num_beam_groups = beam_scorer.num_beam_groups

+ num_sub_beams = num_beams // num_beam_groups

+ batch_size = len(beam_scorer._beam_hyps) // num_beam_groups

+ batch_beam_size, cur_len = input_ids.shape

+ beam_indices = None

+

+ if num_beams * batch_size != batch_beam_size:

+ raise ValueError(

+ f"Batch dimension of `input_ids` should be {num_beams * batch_size}, but is {batch_beam_size}."

+ )

+

+ beam_scores = torch.full((batch_size, num_beams), -1e9, dtype=torch.float, device=device)

+ # initialise score of first beam of each group with 0 and the rest with 1e-9. This ensures that the beams in

+ # the same group don't produce same tokens everytime.

+ beam_scores[:, ::num_sub_beams] = 0

+ beam_scores = beam_scores.view((batch_size * num_beams,))

+

+ while True:

+

+ # predicted tokens in cur_len step

+ current_tokens = torch.zeros(batch_size * num_beams, dtype=input_ids.dtype, device=device)

+

+ # indices which will form the beams in the next time step

+ reordering_indices = torch.zeros(batch_size * num_beams, dtype=torch.long, device=device)

+

+ # do one decoder step on all beams of all sentences in batch

+ model_inputs = prepare_inputs_for_generation(input_ids=input_ids, image_inputs=image_inputs)

+ outputs = self(

+ model_inputs['images'],

+ model_inputs['text'],

+ image_latent=image_latent,

+ image_embs=image_embs,

+ output_labels=False,

+ )

+

+ for beam_group_idx in range(num_beam_groups):

+ group_start_idx = beam_group_idx * num_sub_beams

+ group_end_idx = min(group_start_idx + num_sub_beams, num_beams)

+ group_size = group_end_idx - group_start_idx

+

+ # indices of beams of current group among all sentences in batch

+ batch_group_indices = []

+

+ for batch_idx in range(batch_size):

+ batch_group_indices.extend(

+ [batch_idx * num_beams + idx for idx in range(group_start_idx, group_end_idx)]

+ )

+ group_input_ids = input_ids[batch_group_indices]

+

+ # select outputs of beams of currentg group only

+ next_token_logits = outputs['logits'][batch_group_indices, -1, :]

+ vocab_size = next_token_logits.shape[-1]

+

+ next_token_scores_processed = logits_processor(

+ group_input_ids, next_token_logits, current_tokens=current_tokens, beam_group_idx=beam_group_idx

+ )

+ next_token_scores = next_token_scores_processed + beam_scores[batch_group_indices].unsqueeze(-1)

+ next_token_scores = next_token_scores.expand_as(next_token_scores_processed)

+

+ # reshape for beam search

+ next_token_scores = next_token_scores.view(batch_size, group_size * vocab_size)

+

+ next_token_scores, next_tokens = torch.topk(

+ next_token_scores, 2 * group_size, dim=1, largest=True, sorted=True

+ )

+

+ next_indices = torch.div(next_tokens, vocab_size, rounding_mode="floor")

+ next_tokens = next_tokens % vocab_size

+

+ # stateless

+ process_beam_indices = sum(beam_indices, ()) if beam_indices is not None else None

+ beam_outputs = beam_scorer.process(

+ group_input_ids,

+ next_token_scores,

+ next_tokens,

+ next_indices,

+ pad_token_id=pad_token_id,

+ eos_token_id=eos_token_id,

+ beam_indices=process_beam_indices,

+ group_index=beam_group_idx,

+ )

+ beam_scores[batch_group_indices] = beam_outputs["next_beam_scores"]

+ beam_next_tokens = beam_outputs["next_beam_tokens"]

+ beam_idx = beam_outputs["next_beam_indices"]

+

+ input_ids[batch_group_indices] = group_input_ids[beam_idx]

+ group_input_ids = torch.cat([group_input_ids[beam_idx, :], beam_next_tokens.unsqueeze(-1)], dim=-1)

+ current_tokens[batch_group_indices] = group_input_ids[:, -1]

+

+ # (beam_idx // group_size) -> batch_idx

+ # (beam_idx % group_size) -> offset of idx inside the group

+ reordering_indices[batch_group_indices] = (

+ num_beams * torch.div(beam_idx, group_size, rounding_mode="floor") + group_start_idx + (beam_idx % group_size)

+ )

+

+ input_ids = torch.cat([input_ids, current_tokens.unsqueeze(-1)], dim=-1)

+

+ # increase cur_len

+ cur_len = cur_len + 1

+ if beam_scorer.is_done or all(stopping_criteria(input_ids, None)):

+ break

+

+ final_beam_indices = sum(beam_indices, ()) if beam_indices is not None else None

+ sequence_outputs = beam_scorer.finalize(

+ input_ids,

+ beam_scores,

+ next_tokens,

+ next_indices,

+ pad_token_id=pad_token_id,

+ eos_token_id=eos_token_id,

+ max_length=stopping_criteria.max_length,

+ beam_indices=final_beam_indices,

+ )

+ return sequence_outputs['sequences']

+

+

+def prepare_inputs_for_generation(input_ids, image_inputs, past=None, **kwargs):

+ if past:

+ input_ids = input_ids[:, -1].unsqueeze(-1)

+

+ attention_mask = kwargs.get("attention_mask", None)

+ position_ids = kwargs.get("position_ids", None)

+

+ if attention_mask is not None and position_ids is None:

+ # create position_ids on the fly for batch generation

+ position_ids = attention_mask.long().cumsum(-1) - 1

+ position_ids.masked_fill_(attention_mask == 0, 1)

+ else:

+ position_ids = None

+ return {

+ "text": input_ids,

+ "images": image_inputs,

+ "past_key_values": past,

+ "position_ids": position_ids,

+ "attention_mask": attention_mask,

+ }

diff --git a/src/open_clip/constants.py b/src/open_clip/constants.py

new file mode 100644

index 0000000000000000000000000000000000000000..5bdfc2451286e448b98c45392de6b2cc03292ca0

--- /dev/null

+++ b/src/open_clip/constants.py

@@ -0,0 +1,11 @@

+OPENAI_DATASET_MEAN = (0.48145466, 0.4578275, 0.40821073)

+OPENAI_DATASET_STD = (0.26862954, 0.26130258, 0.27577711)

+IMAGENET_MEAN = (0.485, 0.456, 0.406)

+IMAGENET_STD = (0.229, 0.224, 0.225)

+INCEPTION_MEAN = (0.5, 0.5, 0.5)

+INCEPTION_STD = (0.5, 0.5, 0.5)

+

+# Default name for a weights file hosted on the Huggingface Hub.

+HF_WEIGHTS_NAME = "open_clip_pytorch_model.bin" # default pytorch pkl

+HF_SAFE_WEIGHTS_NAME = "open_clip_model.safetensors" # safetensors version

+HF_CONFIG_NAME = 'open_clip_config.json'