Spaces:

Running

on

CPU Upgrade

Running

on

CPU Upgrade

Commit

·

a6bdbe4

0

Parent(s):

Initial public commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .dockerignore +6 -0

- .gitattributes +38 -0

- .gitignore +6 -0

- Dockerfile +58 -0

- README.md +159 -0

- __init__.py +14 -0

- app.py +86 -0

- auth.py +77 -0

- cache.py +67 -0

- cache_archive.zip +3 -0

- evaluation.py +69 -0

- frontend/package-lock.json +0 -0

- frontend/package.json +31 -0

- frontend/public/assets/ai_headshot.svg +1 -0

- frontend/public/assets/alex.avif +0 -0

- frontend/public/assets/alex.mp4 +3 -0

- frontend/public/assets/alex_300.avif +0 -0

- frontend/public/assets/alex_fhir.json +246 -0

- frontend/public/assets/gemini.avif +0 -0

- frontend/public/assets/jason_fhir.json +167 -0

- frontend/public/assets/jordan.avif +0 -0

- frontend/public/assets/jordan.mp4 +3 -0

- frontend/public/assets/jordan_300.avif +0 -0

- frontend/public/assets/medgemma.avif +0 -0

- frontend/public/assets/patients_and_conditions.json +46 -0

- frontend/public/assets/sacha.avif +0 -0

- frontend/public/assets/sacha.mp4 +3 -0

- frontend/public/assets/sacha_150.avif +0 -0

- frontend/public/assets/sacha_fhir.json +266 -0

- frontend/public/assets/welcome_bottom_graphics.svg +1 -0

- frontend/public/assets/welcome_graphics.svg +1 -0

- frontend/public/assets/welcome_top_graphics.svg +1 -0

- frontend/public/index.html +29 -0

- frontend/src/App.js +88 -0

- frontend/src/components/DetailsPopup/DetailsPopup.css +46 -0

- frontend/src/components/DetailsPopup/DetailsPopup.js +76 -0

- frontend/src/components/Interview/Interview.css +282 -0

- frontend/src/components/Interview/Interview.js +491 -0

- frontend/src/components/PatientBuilder/PatientBuilder.css +205 -0

- frontend/src/components/PatientBuilder/PatientBuilder.js +235 -0

- frontend/src/components/PreloadImages.js +42 -0

- frontend/src/components/RolePlayDialogs/RolePlayDialogs.css +101 -0

- frontend/src/components/RolePlayDialogs/RolePlayDialogs.js +103 -0

- frontend/src/components/WelcomePage/WelcomePage.css +144 -0

- frontend/src/components/WelcomePage/WelcomePage.js +62 -0

- frontend/src/index.js +23 -0

- frontend/src/shared/Style.css +205 -0

- gemini.py +59 -0

- gemini_tts.py +212 -0

- interview_simulator.py +385 -0

.dockerignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

frontend/build

|

| 2 |

+

frontend/node_modules

|

| 3 |

+

env.list

|

| 4 |

+

__pycache__

|

| 5 |

+

**/__pycache__

|

| 6 |

+

.*

|

.gitattributes

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

frontend/public/assets/jordan.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

frontend/public/assets/sacha.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

frontend/public/assets/alex.mp4 filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

env.list

|

| 2 |

+

__pycache__

|

| 3 |

+

**/__pycache__

|

| 4 |

+

frontend/node_modules

|

| 5 |

+

frontend/build

|

| 6 |

+

.git

|

Dockerfile

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2025 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

| 15 |

+

# Build React app

|

| 16 |

+

FROM node:24-slim AS frontend-build

|

| 17 |

+

WORKDIR /app/frontend

|

| 18 |

+

# Upgrade npm to the desired version

|

| 19 |

+

RUN npm install -g npm@11.4.x

|

| 20 |

+

COPY frontend/ ./

|

| 21 |

+

RUN npm install

|

| 22 |

+

RUN npm run build

|

| 23 |

+

|

| 24 |

+

# Python backend

|

| 25 |

+

FROM python:3.10-slim

|

| 26 |

+

WORKDIR /app

|

| 27 |

+

|

| 28 |

+

# Install ffmpeg for audio conversion

|

| 29 |

+

RUN apt-get update && apt-get install -y ffmpeg && rm -rf /var/lib/apt/lists/*

|

| 30 |

+

|

| 31 |

+

# Copy requirements.txt first for better caching

|

| 32 |

+

COPY requirements.txt ./

|

| 33 |

+

RUN pip install --no-cache-dir -r requirements.txt

|

| 34 |

+

|

| 35 |

+

# Copy Flask app

|

| 36 |

+

COPY *.py ./

|

| 37 |

+

COPY symptoms.json ./

|

| 38 |

+

COPY report_template.txt ./

|

| 39 |

+

|

| 40 |

+

# Copy built React app

|

| 41 |

+

COPY --from=frontend-build /app/frontend/build ./frontend/build

|

| 42 |

+

ENV FRONTEND_BUILD=/app/frontend/build

|

| 43 |

+

|

| 44 |

+

# Create cache directory and set permissions, then assign the env variable

|

| 45 |

+

RUN mkdir -p /cache && chmod 777 /cache

|

| 46 |

+

ENV CACHE_DIR=/cache

|

| 47 |

+

|

| 48 |

+

# If cache.zip exists, extract it into /cache

|

| 49 |

+

COPY cache* /tmp/

|

| 50 |

+

RUN if [ -f /tmp/cache_archive.zip ]; then \

|

| 51 |

+

apt-get update && apt-get install -y unzip && \

|

| 52 |

+

unzip /tmp/cache_archive.zip -d /cache && \

|

| 53 |

+

rm /tmp/cache_archive.zip && \

|

| 54 |

+

chmod -R 777 /cache; \

|

| 55 |

+

fi

|

| 56 |

+

|

| 57 |

+

EXPOSE 7860

|

| 58 |

+

CMD ["gunicorn", "-b", "0.0.0.0:7860", "app:app", "--threads", "4", "--timeout", "300"]

|

README.md

ADDED

|

@@ -0,0 +1,159 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Appoint Ready - MedGemma Demo

|

| 3 |

+

emoji: 📋

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: gray

|

| 6 |

+

sdk: docker

|

| 7 |

+

models:

|

| 8 |

+

- google/medgemma-27b-text-it

|

| 9 |

+

pinned: false

|

| 10 |

+

license: apache-2.0

|

| 11 |

+

short_description: 'Simulated Pre-visit Intake Demo built using MedGemma Demo'

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

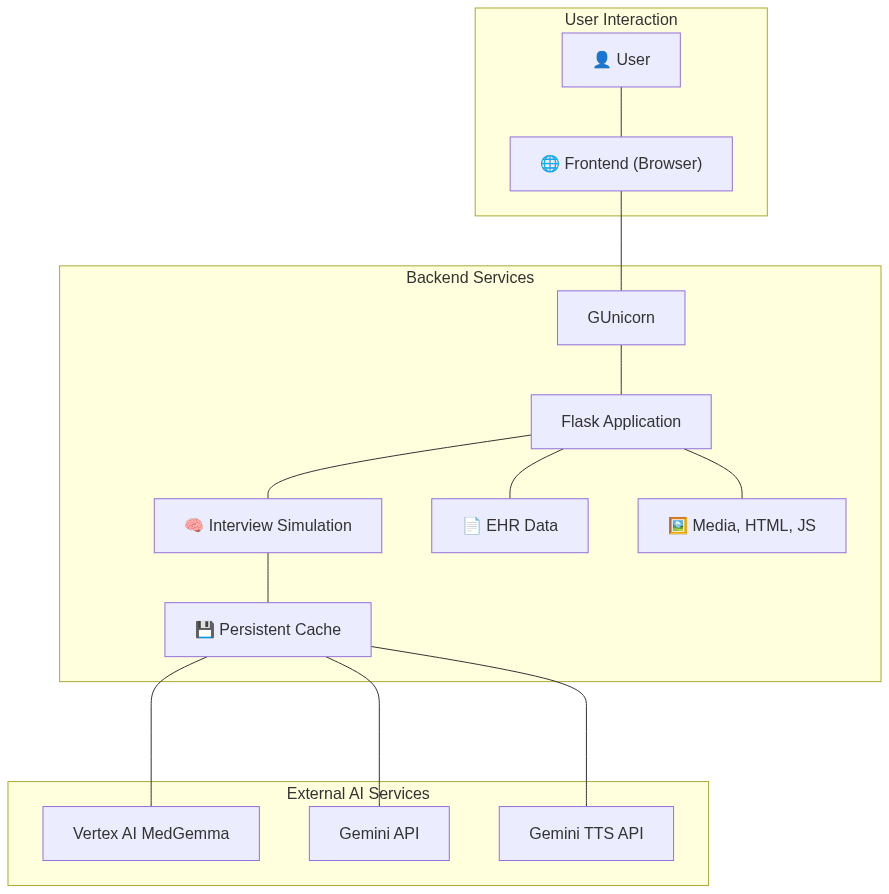

## Table of Contents

|

| 15 |

+

- [Demo Description](#demo-description)

|

| 16 |

+

- [Technical Architecture](#technical-architecture)

|

| 17 |

+

- [Running the Demo Locally](#running-the-demo-locally)

|

| 18 |

+

- [Models used](#models-used)

|

| 19 |

+

- [Caching](#caching)

|

| 20 |

+

- [Disclaimer](#disclaimer)

|

| 21 |

+

- [Other Models and Demos](#other-models-and-demos)

|

| 22 |

+

|

| 23 |

+

# AppointReady: Simulated Pre-visit Intake Demo built using MedGemma

|

| 24 |

+

|

| 25 |

+

Healthcare providers often seek efficient ways to gather comprehensive patient information before appointments. This demo illustrates how MedGemma could be used in an application to streamline pre-visit information collection and utilization.

|

| 26 |

+

|

| 27 |

+

The demonstration first asks questions to gather pre-visit information.

|

| 28 |

+

After it has identified and collected relevant information, the demo application generates a pre-visit report using both collected and health record information (stored as FHIR resources for this demonstration). This type of intelligent pre-visit report can help providers be more efficient and effective while also providing an improved experience for patients compared to traditional intake forms.

|

| 29 |

+

|

| 30 |

+

At the conclusion of the demo, you can view an evaluation of the pre-visit report which provides additional insights into the quality of the demonstrated capabilities. For this evaluation, MedGemma is provided the patient's reference diagnosis, allowing MedGemma to create a self-evaluation report highlighting strengths as well as areas for improvement.

|

| 31 |

+

|

| 32 |

+

## Technical Architecture

|

| 33 |

+

This application is composed of several key components:

|

| 34 |

+

|

| 35 |

+

* **Frontend**: A web interface built with React that provides the user interface for the chat and report visualization.

|

| 36 |

+

* **Backend**: A Python server built with Gunicorn/Flask that handles the application logic. It communicates with the LLMs, manages the conversation flow, and generates the final pre-visit report.

|

| 37 |

+

* **API called**:

|

| 38 |

+

* **MedGemma**: Acts as the clinical assistant, asking relevant questions and summarizing information.

|

| 39 |

+

* **Gemini**: Role-plays as the patient, providing responses based on a predefined scenario.

|

| 40 |

+

* **Gemini TTS**: Generative text-to-speech model.

|

| 41 |

+

* **Deployment**: The entire application is containerized using Docker for easy deployment and scalability.

|

| 42 |

+

|

| 43 |

+

A high-level overview of the architecture:

|

| 44 |

+

|

| 45 |

+

[](https://mermaid.live/edit#pako:eNqFk81u00AQx19ltVIlkJzIdpM69i1NShpEpQqnHMAcFnvTrGqvrfW6DUS5cUTiS-IKByRegQPileARmN1N7DgE4Yt3_vObnfHMeIXjPKE4wNeCFAs0G0ccwXN0hMZ0zjhFE5FXRWnUsnphsAhflVSgKZdUkFiynEfYIOpRvmcR_v35_Vd9jvDzxvlA5BDFEw28eVfb6N6pyO-Avl_joEb8r8ynJL5RfEjFLYtpuZt5csVZnAsOl2-P7eQpKW_Aqd9oWBQpi4kpf4fSn3XL6F3IsirVfl3tty-NCzW-VuyIxAuq6Q8_0SUVJSvh66TRW-TZ-eMxkUSzH18rEym7xYQSMsTDsqSy1OCnH7--v0UXNGHEQuezi0cWehj-p2FnS6iZkxQNpweb9oQKSZfDKSQwRwVCignNsnY5oDDOhpcKNWcExgFkNgsbBIwWtlNls2ajnHOqN2mza3rBOp1OvSBGrtdFueoZ893hmyg14U2IHrYSDwx2H9lMZV_eHYTxHbhLk2bQvN4FLW5bvKd3mo7-wwO9wxb8myzBgRQVtXBGRUaUiVcqJsJyQTPYrACOCZ2TKpVquGsIKwh_mufZNhJ-5OsFDuYkLcGqioRIOmYE1iSrVQGtpWKUV1zioNf39SU4WOElDhzH7_quNzhxB57XcwZuz8IvQe47XdexvZ5r9zzH953-2sKvdF67Ozju2_aJ43gD1_aOfW_9ByuAZrY)

|

| 46 |

+

|

| 47 |

+

<!--```mermaid

|

| 48 |

+

graph TD

|

| 49 |

+

%% Define Groups

|

| 50 |

+

subgraph "User Interaction"

|

| 51 |

+

User["👤 User"]

|

| 52 |

+

Frontend["🌐 Frontend (Browser)"]

|

| 53 |

+

end

|

| 54 |

+

|

| 55 |

+

subgraph "Backend Services"

|

| 56 |

+

GUnicorn["GUnicorn"]

|

| 57 |

+

Flask["Flask Application"]

|

| 58 |

+

InterviewSimulation["🧠 Interview Simulation"]

|

| 59 |

+

Cache["💾 Persistent Cache"]

|

| 60 |

+

EHRData["📄 EHR Data"]

|

| 61 |

+

StaticAssets["🖼️ Media, HTML, JS"]

|

| 62 |

+

end

|

| 63 |

+

|

| 64 |

+

subgraph "External AI Services"

|

| 65 |

+

VertexAI["Vertex AI MedGemma"]

|

| 66 |

+

GeminiAPI["Gemini API"]

|

| 67 |

+

GeminiTTS["Gemini TTS API"]

|

| 68 |

+

end

|

| 69 |

+

|

| 70 |

+

%% Define Connections

|

| 71 |

+

User --- Frontend

|

| 72 |

+

Frontend --- GUnicorn

|

| 73 |

+

GUnicorn --- Flask

|

| 74 |

+

Flask --- InterviewSimulation

|

| 75 |

+

Flask --- EHRData

|

| 76 |

+

Flask --- StaticAssets

|

| 77 |

+

InterviewSimulation --- Cache

|

| 78 |

+

Cache --- VertexAI

|

| 79 |

+

Cache --- GeminiAPI

|

| 80 |

+

Cache --- GeminiTTS

|

| 81 |

+

```-->

|

| 82 |

+

|

| 83 |

+

## Running the Demo Locally

|

| 84 |

+

|

| 85 |

+

To run this demo on your own machine, you'll need to have Docker installed.

|

| 86 |

+

|

| 87 |

+

### Prerequisites

|

| 88 |

+

* Docker

|

| 89 |

+

* Git

|

| 90 |

+

* A Google Cloud project with the Vertex AI API enabled.

|

| 91 |

+

|

| 92 |

+

### Setup & Configuration

|

| 93 |

+

1. **Clone the repository:**

|

| 94 |

+

```bash

|

| 95 |

+

git clone https://huggingface.co/spaces/google/appoint-ready

|

| 96 |

+

cd appoint-ready

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

2. **Configure environment variables:**

|

| 100 |

+

This project uses an `env.list` file for configuration, which is passed to Docker. Create this file in the root directory.

|

| 101 |

+

```ini

|

| 102 |

+

# env.list

|

| 103 |

+

GEMINI_API_KEY="your-gemini-token"

|

| 104 |

+

GENERATE_SPEECH=false or true

|

| 105 |

+

GCP_MEDGEMMA_ENDPOINT=medgemma vertex ai endpoint

|

| 106 |

+

GCP_MEDGEMMA_SERVICE_ACCOUNT_KEY="service-account-key json"

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

GEMINI_API_KEY: Key can be generated via [aistudio](https://aistudio.google.com/apikey).

|

| 110 |

+

GENERATE_SPEECH: Should the demo generate speech not found in cache. Default is false.

|

| 111 |

+

GCP_MEDGEMMA_ENDPOINT: Deploy MedGemma via [Model Garden](https://console.cloud.google.com/vertex-ai/publishers/google/model-garden/medgemma).

|

| 112 |

+

|

| 113 |

+

### Execution

|

| 114 |

+

1. **Build and run the Docker containers:**

|

| 115 |

+

```bash

|

| 116 |

+

run_local.sh

|

| 117 |

+

```

|

| 118 |

+

|

| 119 |

+

2. **Access the application:**

|

| 120 |

+

Once the containers are running, you can access the demo in your web browser at `http://localhost:[PORT]`. (e.g., `http://localhost:7860`).

|

| 121 |

+

|

| 122 |

+

# Models used

|

| 123 |

+

This demo uses four models:

|

| 124 |

+

|

| 125 |

+

* MedGemma 27b-text-it: https://huggingface.co/google/medgemma-27b-text-it \

|

| 126 |

+

For this demo MedGemma-27b was deployed via Model Garden (https://console.cloud.google.com/vertex-ai/publishers/google/model-garden/medgemma).

|

| 127 |

+

* Gemini: https://cloud.google.com/vertex-ai/generative-ai/docs/models/gemini/2-5-flash \

|

| 128 |

+

We use Gemini to role play the patient while MedGemma plays the clinical assistant.

|

| 129 |

+

* Gemini TTS: https://ai.google.dev/gemini-api/docs/models#gemini-2.5-flash-preview-tts \

|

| 130 |

+

As an alternative consider using Clould Text-to-Speech: https://cloud.google.com/text-to-speech

|

| 131 |

+

* Veo 3: Was used to generate patient avatar animation https://gemini.google/overview/video-generation

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

## Caching

|

| 136 |

+

This demo is functional, and results are persistently cached to reduce environmental impact.

|

| 137 |

+

|

| 138 |

+

## Disclaimer

|

| 139 |

+

This demonstration is for illustrative purposes only and does not represent a finished or approved

|

| 140 |

+

product. It is not representative of compliance to any regulations or standards for

|

| 141 |

+

quality, safety or efficacy. Any real-world application would require additional development,

|

| 142 |

+

training, and adaptation. The experience highlighted in this demo shows MedGemma's baseline

|

| 143 |

+

capability for the displayed task and is intended to help developers and users explore possible

|

| 144 |

+

applications and inspire further development.

|

| 145 |

+

|

| 146 |

+

This is not an officially supported Google product. This project is not

|

| 147 |

+

eligible for the [Google Open Source Software Vulnerability Rewards

|

| 148 |

+

Program](https://bughunters.google.com/open-source-security).

|

| 149 |

+

|

| 150 |

+

# Other Models and Demos

|

| 151 |

+

See other demos here: https://huggingface.co/collections/google/hai-def-concept-apps-6837acfccce400abe6ec26c1

|

| 152 |

+

|

| 153 |

+

MedGemma is finetunable - see colab here: https://github.com/Google-Health/medgemma/blob/main/notebooks/fine_tune_with_hugging_face.ipynb

|

| 154 |

+

|

| 155 |

+

# Contacts

|

| 156 |

+

|

| 157 |

+

* This demo is part of Google's [Health AI Developer Foundations (HAI-DEF)](https://developers.google.com/health-ai-developer-foundations?referral=appoint-ready)

|

| 158 |

+

* Technical info - contact [@lirony](https://huggingface.co/lirony)

|

| 159 |

+

* Press only: press@google.com

|

__init__.py

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2025 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

app.py

ADDED

|

@@ -0,0 +1,86 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2025 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

| 15 |

+

from evaluation import evaluate_report, evaluation_prompt

|

| 16 |

+

from flask import Flask, send_from_directory, request, jsonify, Response, stream_with_context, send_file

|

| 17 |

+

from flask_cors import CORS

|

| 18 |

+

import os, time, json, re

|

| 19 |

+

from gemini import gemini_get_text_response

|

| 20 |

+

from interview_simulator import stream_interview

|

| 21 |

+

from cache import create_cache_zip

|

| 22 |

+

from medgemma import medgemma_get_text_response

|

| 23 |

+

|

| 24 |

+

app = Flask(__name__, static_folder=os.environ.get("FRONTEND_BUILD", "frontend/build"), static_url_path="/")

|

| 25 |

+

CORS(app, resources={r"/api/*": {"origins": "http://localhost:3000"}})

|

| 26 |

+

|

| 27 |

+

@app.route("/")

|

| 28 |

+

def serve():

|

| 29 |

+

"""Serves the main index.html file."""

|

| 30 |

+

return send_from_directory(app.static_folder, "index.html")

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

@app.route("/api/stream_conversation", methods=["GET"])

|

| 34 |

+

def stream_conversation():

|

| 35 |

+

"""Streams the conversation with the interview simulator."""

|

| 36 |

+

patient = request.args.get("patient", "Patient")

|

| 37 |

+

condition = request.args.get("condition", "unknown condition")

|

| 38 |

+

|

| 39 |

+

def generate():

|

| 40 |

+

try:

|

| 41 |

+

for message in stream_interview(patient, condition):

|

| 42 |

+

yield f"data: {message}\n\n"

|

| 43 |

+

except Exception as e:

|

| 44 |

+

yield f"data: Error: {str(e)}\n\n"

|

| 45 |

+

raise e

|

| 46 |

+

|

| 47 |

+

return Response(stream_with_context(generate()), mimetype="text/event-stream")

|

| 48 |

+

|

| 49 |

+

@app.route("/api/evaluate_report", methods=["POST"])

|

| 50 |

+

def evaluate_report_call():

|

| 51 |

+

"""Evaluates the provided medical report."""

|

| 52 |

+

data = request.get_json()

|

| 53 |

+

report = data.get("report", "")

|

| 54 |

+

if not report:

|

| 55 |

+

return jsonify({"error": "Report is required"}), 400

|

| 56 |

+

condition = data.get("condition", "")

|

| 57 |

+

if not condition:

|

| 58 |

+

return jsonify({"error": "Condition is required"}), 400

|

| 59 |

+

|

| 60 |

+

evaluation_text = evaluate_report(report, condition)

|

| 61 |

+

|

| 62 |

+

return jsonify({"evaluation": evaluation_text})

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

@app.route("/api/download_cache")

|

| 66 |

+

def download_cache_zip():

|

| 67 |

+

"""Creates a zip file of the cache and returns it for download."""

|

| 68 |

+

zip_filepath, error = create_cache_zip()

|

| 69 |

+

if error:

|

| 70 |

+

return jsonify({"error": error}), 500

|

| 71 |

+

if not os.path.isfile(zip_filepath):

|

| 72 |

+

return jsonify({"error": f"File not found: {zip_filepath}"}), 404

|

| 73 |

+

return send_file(zip_filepath, as_attachment=True)

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

@app.route("/<path:path>")

|

| 77 |

+

def static_proxy(path):

|

| 78 |

+

"""Serves static files and defaults to index.html for unknown paths."""

|

| 79 |

+

file_path = os.path.join(app.static_folder, path)

|

| 80 |

+

if os.path.isfile(file_path):

|

| 81 |

+

return send_from_directory(app.static_folder, path)

|

| 82 |

+

else:

|

| 83 |

+

return send_from_directory(app.static_folder, "index.html")

|

| 84 |

+

|

| 85 |

+

if __name__ == "__main__":

|

| 86 |

+

app.run(host="0.0.0.0", port=7860, threaded=True)

|

auth.py

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2025 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

| 15 |

+

import json

|

| 16 |

+

import datetime

|

| 17 |

+

from google.oauth2 import service_account

|

| 18 |

+

import google.auth.transport.requests

|

| 19 |

+

|

| 20 |

+

def create_credentials(secret_key_json) -> service_account.Credentials:

|

| 21 |

+

"""Creates Google Cloud credentials from the provided service account key.

|

| 22 |

+

|

| 23 |

+

Returns:

|

| 24 |

+

service_account.Credentials: The created credentials object.

|

| 25 |

+

|

| 26 |

+

Raises:

|

| 27 |

+

ValueError: If the environment variable is not set or is empty, or if the

|

| 28 |

+

JSON format is invalid.

|

| 29 |

+

"""

|

| 30 |

+

|

| 31 |

+

if not secret_key_json:

|

| 32 |

+

raise ValueError("Userdata variable 'GCP_MEDGEMMA_SERVICE_ACCOUNT_KEY' is not set or is empty.")

|

| 33 |

+

try:

|

| 34 |

+

service_account_info = json.loads(secret_key_json)

|

| 35 |

+

except (SyntaxError, ValueError) as e:

|

| 36 |

+

raise ValueError("Invalid service account key JSON format.") from e

|

| 37 |

+

return service_account.Credentials.from_service_account_info(

|

| 38 |

+

service_account_info,

|

| 39 |

+

scopes=['https://www.googleapis.com/auth/cloud-platform']

|

| 40 |

+

)

|

| 41 |

+

|

| 42 |

+

def refresh_credentials(credentials: service_account.Credentials) -> service_account.Credentials:

|

| 43 |

+

"""Refreshes the provided Google Cloud credentials if they are about to expire

|

| 44 |

+

(within 5 minutes) or if they don't have an expiry time set.

|

| 45 |

+

|

| 46 |

+

Args:

|

| 47 |

+

credentials: The credentials object to refresh.

|

| 48 |

+

|

| 49 |

+

Returns:

|

| 50 |

+

service_account.Credentials: The refreshed credentials object.

|

| 51 |

+

"""

|

| 52 |

+

if credentials.expiry:

|

| 53 |

+

expiry_time = credentials.expiry.replace(tzinfo=datetime.timezone.utc)

|

| 54 |

+

# Calculate the time remaining until expiration

|

| 55 |

+

time_remaining = expiry_time - datetime.datetime.now(datetime.timezone.utc)

|

| 56 |

+

# Check if the token is about to expire (e.g., within 5 minutes)

|

| 57 |

+

if time_remaining < datetime.timedelta(minutes=5):

|

| 58 |

+

request = google.auth.transport.requests.Request()

|

| 59 |

+

credentials.refresh(request)

|

| 60 |

+

else:

|

| 61 |

+

# If no expiry is set, always attempt to refresh (e.g., for certain credential types)

|

| 62 |

+

request = google.auth.transport.requests.Request()

|

| 63 |

+

credentials.refresh(request)

|

| 64 |

+

return credentials

|

| 65 |

+

|

| 66 |

+

def get_access_token_refresh_if_needed(credentials: service_account.Credentials) -> str:

|

| 67 |

+

"""Gets the access token from the credentials, refreshing them if needed.

|

| 68 |

+

|

| 69 |

+

Args:

|

| 70 |

+

credentials: The credentials object.

|

| 71 |

+

|

| 72 |

+

Returns:

|

| 73 |

+

str: The access token.

|

| 74 |

+

"""

|

| 75 |

+

credentials = refresh_credentials(credentials)

|

| 76 |

+

return credentials.token

|

| 77 |

+

|

cache.py

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2025 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

| 15 |

+

from diskcache import Cache

|

| 16 |

+

import os

|

| 17 |

+

import shutil

|

| 18 |

+

import tempfile

|

| 19 |

+

import zipfile

|

| 20 |

+

import logging

|

| 21 |

+

|

| 22 |

+

cache = Cache(os.environ.get("CACHE_DIR", "/cache"))

|

| 23 |

+

# Print cache statistics after loading

|

| 24 |

+

try:

|

| 25 |

+

item_count = len(cache)

|

| 26 |

+

size_bytes = cache.volume()

|

| 27 |

+

print(f"Cache loaded: {item_count} items, approx {size_bytes} bytes")

|

| 28 |

+

except Exception as e:

|

| 29 |

+

print(f"Could not retrieve cache statistics: {e}")

|

| 30 |

+

|

| 31 |

+

def create_cache_zip():

|

| 32 |

+

temp_dir = tempfile.gettempdir()

|

| 33 |

+

base_name = os.path.join(temp_dir, "cache_archive") # A more descriptive name

|

| 34 |

+

archive_path = base_name + ".zip"

|

| 35 |

+

cache_directory = os.environ.get("CACHE_DIR", "/cache")

|

| 36 |

+

|

| 37 |

+

if not os.path.isdir(cache_directory):

|

| 38 |

+

logging.error(f"Cache directory not found at {cache_directory}")

|

| 39 |

+

return None, f"Cache directory not found on server: {cache_directory}"

|

| 40 |

+

|

| 41 |

+

logging.info("Forcing a cache checkpoint for safe backup...")

|

| 42 |

+

try:

|

| 43 |

+

# Open and immediately close a connection.

|

| 44 |

+

# This forces SQLite to perform a checkpoint, merging the .wal file

|

| 45 |

+

# into the main .db file, ensuring the on-disk files are consistent.

|

| 46 |

+

with Cache(cache_directory) as temp_cache:

|

| 47 |

+

temp_cache.close()

|

| 48 |

+

|

| 49 |

+

# Clean up temporary files before archiving.

|

| 50 |

+

tmp_path = os.path.join(cache_directory, 'tmp')

|

| 51 |

+

if os.path.isdir(tmp_path):

|

| 52 |

+

logging.info(f"Removing temporary cache directory: {tmp_path}")

|

| 53 |

+

shutil.rmtree(tmp_path)

|

| 54 |

+

|

| 55 |

+

logging.info(f"Checkpoint complete. Creating zip archive of {cache_directory} to {archive_path}")

|

| 56 |

+

with zipfile.ZipFile(archive_path, 'w', zipfile.ZIP_DEFLATED, compresslevel=9) as zipf:

|

| 57 |

+

for root, _, files in os.walk(cache_directory):

|

| 58 |

+

for file in files:

|

| 59 |

+

file_path = os.path.join(root, file)

|

| 60 |

+

arcname = os.path.relpath(file_path, cache_directory)

|

| 61 |

+

zipf.write(file_path, arcname)

|

| 62 |

+

logging.info("Zip archive created successfully.")

|

| 63 |

+

return archive_path, None

|

| 64 |

+

|

| 65 |

+

except Exception as e:

|

| 66 |

+

logging.error(f"Error creating zip archive of cache directory: {e}", exc_info=True)

|

| 67 |

+

return None, f"Error creating zip archive: {e}"

|

cache_archive.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7c4735cc77e6df31539abaa76ef2389252ab6057d875bba8a30b09fdaa5f84e0

|

| 3 |

+

size 6150757

|

evaluation.py

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2025 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

| 15 |

+

import re

|

| 16 |

+

from medgemma import medgemma_get_text_response

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def evaluation_prompt(defacto_condition):

|

| 20 |

+

# Returns a detailed prompt for the LLM to evaluate a pre-visit report for a specific condition

|

| 21 |

+

return f"""

|

| 22 |

+

Your role is to evaluate the helpfulness of a pre-visit report, which is based on a pre-visit patient interview and existing health records.

|

| 23 |

+

The patient was de facto diagnosed condition: "{defacto_condition}" which was not known at the time of the interview.

|

| 24 |

+

|

| 25 |

+

List the specific elements in the previsit report text that are helpful or necessary for the PCP to diagnose the de facto diagnosed condition: "{defacto_condition}".

|

| 26 |

+

|

| 27 |

+

This include pertinet positives or negatives.

|

| 28 |

+

List critical elements that are MISSING from the previsit report text that would have been helpful for the PCP to diagnose the de facto diagnosed condition.

|

| 29 |

+

This include pertinet positives or negatives that were missing from the report.

|

| 30 |

+

(keep in mind that the condition "{defacto_condition}" was not known at the time)

|

| 31 |

+

|

| 32 |

+

The evaluation output should be in HTML format.

|

| 33 |

+

|

| 34 |

+

REPORT TEMPLATE START

|

| 35 |

+

|

| 36 |

+

<h3 class="helpful">Helpful Facts:</h3>

|

| 37 |

+

|

| 38 |

+

<h3 class="missing">What wasn't covered but would be helpful:</h3>

|

| 39 |

+

|

| 40 |

+

REPORT TEMPLATE END

|

| 41 |

+

"""

|

| 42 |

+

|

| 43 |

+

def evaluate_report(report, condition):

|

| 44 |

+

"""Evaluate the pre-visit report based on the condition using MedGemma LLM."""

|

| 45 |

+

evaluation_text = medgemma_get_text_response([

|

| 46 |

+

{

|

| 47 |

+

"role": "system",

|

| 48 |

+

"content": [

|

| 49 |

+

{

|

| 50 |

+

"type": "text",

|

| 51 |

+

"text": f"{evaluation_prompt(condition)}"

|

| 52 |

+

}

|

| 53 |

+

]

|

| 54 |

+

},

|

| 55 |

+

{

|

| 56 |

+

"role": "user",

|

| 57 |

+

"content": [

|

| 58 |

+

{

|

| 59 |

+

"type": "text",

|

| 60 |

+

"text": f"Here is the report text:\n{report}"

|

| 61 |

+

}

|

| 62 |

+

]

|

| 63 |

+

},

|

| 64 |

+

])

|

| 65 |

+

|

| 66 |

+

# Remove any LLM "thinking" blocks (special tokens sometimes present in output)

|

| 67 |

+

evaluation_text = re.sub(r'<unused94>.*?<unused95>', '', evaluation_text, flags=re.DOTALL)

|

| 68 |

+

|

| 69 |

+

return evaluation_text

|

frontend/package-lock.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

frontend/package.json

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "frontend",

|

| 3 |

+

"version": "0.1.0",

|

| 4 |

+

"private": true,

|

| 5 |

+

"dependencies": {

|

| 6 |

+

"diff": "^8.0.2",

|

| 7 |

+

"html-react-parser": "^5.2.5",

|

| 8 |

+

"marked": "^15.0.12",

|

| 9 |

+

"react": "^18.2.0",

|

| 10 |

+

"react-dom": "^18.2.0",

|

| 11 |

+

"react-scripts": "^5.0.1",

|

| 12 |

+

"@textea/json-viewer": "^3.2.1",

|

| 13 |

+

"@mui/material": "^5.15.20"

|

| 14 |

+

},

|

| 15 |

+

"scripts": {

|

| 16 |

+

"start": "cross-env NODE_OPTIONS=--openssl-legacy-provider react-scripts start",

|

| 17 |

+

"build": "react-scripts build"

|

| 18 |

+

},

|

| 19 |

+

"browserslist": {

|

| 20 |

+

"production": [

|

| 21 |

+

">0.2%",

|

| 22 |

+

"not dead",

|

| 23 |

+

"not op_mini all"

|

| 24 |

+

],

|

| 25 |

+

"development": [

|

| 26 |

+

"last 1 chrome version",

|

| 27 |

+

"last 1 firefox version",

|

| 28 |

+

"last 1 safari version"

|

| 29 |

+

]

|

| 30 |

+

}

|

| 31 |

+

}

|

frontend/public/assets/ai_headshot.svg

ADDED

|

|

frontend/public/assets/alex.avif

ADDED

|

frontend/public/assets/alex.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ae46c52980e7d7a176245a8f42e90c7386af502e2efed87721937a0e3c9e53ae

|

| 3 |

+

size 1122871

|

frontend/public/assets/alex_300.avif

ADDED

|

frontend/public/assets/alex_fhir.json

ADDED

|

@@ -0,0 +1,246 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[

|

| 2 |

+

{

|

| 3 |

+

"resourceType": "Patient",

|

| 4 |

+

"id": "alex-sharma-63-female",

|

| 5 |

+

"meta": {

|

| 6 |

+

"profile": [

|

| 7 |

+

"http://hl7.org/fhir/R4/StructureDefinition/Patient"

|

| 8 |

+

]

|

| 9 |

+

},

|

| 10 |

+

"text": {

|

| 11 |

+

"status": "generated",

|

| 12 |

+

"div": "<div xmlns=\"http://www.w3.org/1999/xhtml\"><p><b>Alex Sharma</b> (Female, 63)</p><p>Known Conditions: Diabetes</p></div>"

|

| 13 |

+

},

|

| 14 |

+

"identifier": [

|

| 15 |

+

{

|

| 16 |

+

"use": "usual",

|

| 17 |

+

"type": {

|

| 18 |

+

"coding": [

|

| 19 |

+

{

|

| 20 |

+

"system": "http://terminology.hl7.org/CodeSystem/v2-0203",

|

| 21 |

+

"code": "MR",

|

| 22 |

+

"display": "Medical record number"

|

| 23 |

+

}

|

| 24 |

+

]

|

| 25 |

+

},

|

| 26 |

+

"system": "http://example.org/patients",

|

| 27 |

+

"value": "PAT-2023-001"

|

| 28 |

+

}

|

| 29 |

+

],

|

| 30 |

+

"name": [

|

| 31 |

+

{

|

| 32 |

+

"use": "official",

|

| 33 |

+

"family": "Sharma",

|

| 34 |

+

"given": [

|

| 35 |

+

"Alex"

|

| 36 |

+

]

|

| 37 |

+

}

|

| 38 |

+

],

|

| 39 |

+

"gender": "female",

|

| 40 |

+

"birthDate": "1962-01-15",

|

| 41 |

+

"deceasedBoolean": false

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"resourceType": "Encounter",

|

| 45 |

+

"id": "encounter-diabetes-followup",

|

| 46 |

+

"meta": {

|

| 47 |

+

"profile": [

|

| 48 |

+

"http://hl7.org/fhir/R4/StructureDefinition/Encounter"

|

| 49 |

+

]

|

| 50 |

+

},

|

| 51 |

+

"text": {

|

| 52 |

+

"status": "generated",

|

| 53 |

+

"div": "<div xmlns=\"http://www.w3.org/1999/xhtml\"><p><b>Diabetes Follow-up Visit</b> for Alex Sharma on 2024-03-10</p></div>"

|

| 54 |

+

},

|

| 55 |

+

"status": "finished",

|

| 56 |

+

"class": {

|

| 57 |

+

"system": "http://terminology.hl7.org/CodeSystem/v3-ActCode",

|

| 58 |

+

"code": "AMB",

|

| 59 |

+

"display": "Ambulatory"

|

| 60 |

+

},

|

| 61 |

+

"type": [

|

| 62 |

+

{

|

| 63 |

+

"coding": [

|

| 64 |

+

{

|

| 65 |

+

"system": "http://terminology.hl7.org/CodeSystem/v3-ActCode",

|

| 66 |

+

"code": "FLD",

|

| 67 |

+

"display": "Field"

|

| 68 |

+

}

|

| 69 |

+

],

|

| 70 |

+

"text": "Follow-up visit for Diabetes"

|

| 71 |

+

}

|

| 72 |

+

],

|

| 73 |

+

"subject": {

|

| 74 |

+

"reference": "Patient/alex-sharma-63-female",

|

| 75 |

+

"display": "Alex Sharma"

|

| 76 |

+

},

|

| 77 |

+

"period": {

|

| 78 |

+

"start": "2024-03-10T10:00:00Z",

|

| 79 |

+

"end": "2024-03-10T10:45:00Z"

|

| 80 |

+

},

|

| 81 |

+

"serviceProvider": {

|

| 82 |

+

"reference": "Organization/example-org",

|

| 83 |

+

"display": "Example Medical Center"

|

| 84 |

+

}

|

| 85 |

+

},

|

| 86 |

+

{

|

| 87 |

+

"resourceType": "Condition",

|

| 88 |

+

"id": "condition-diabetes-mellitus",

|

| 89 |

+

"meta": {

|

| 90 |

+

"profile": [

|

| 91 |

+

"http://hl7.org/fhir/R4/StructureDefinition/Condition"

|

| 92 |

+

]

|

| 93 |

+

},

|

| 94 |

+

"text": {

|

| 95 |

+

"status": "generated",

|

| 96 |

+

"div": "<div xmlns=\"http://www.w3.org/1999/xhtml\"><p><b>Diabetes Mellitus, Type 2</b> for Alex Sharma, diagnosed 2020-05-20</p></div>"

|

| 97 |

+

},

|

| 98 |

+

"clinicalStatus": {

|

| 99 |

+

"coding": [

|

| 100 |

+

{

|

| 101 |

+

"system": "http://terminology.hl7.org/CodeSystem/condition-clinical",

|

| 102 |

+

"code": "active",

|

| 103 |

+

"display": "Active"

|

| 104 |

+

}

|

| 105 |

+

]

|

| 106 |

+

},

|

| 107 |

+

"verificationStatus": {

|

| 108 |

+

"coding": [

|

| 109 |

+

{

|

| 110 |

+

"system": "http://terminology.hl7.org/CodeSystem/condition-ver-status",

|

| 111 |

+

"code": "confirmed",

|

| 112 |

+

"display": "Confirmed"

|

| 113 |

+

}

|

| 114 |

+

]

|

| 115 |

+

},

|

| 116 |

+

"category": [

|

| 117 |

+

{

|

| 118 |

+

"coding": [

|

| 119 |

+

{