Spaces:

Runtime error

Runtime error

Commit

·

7d90e8e

0

Parent(s):

Duplicate from VIPLab/Track-Anything

Browse filesCo-authored-by: zhe li <watchtowerss@users.noreply.huggingface.co>

This view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +46 -0

- LICENSE +21 -0

- README.md +94 -0

- XMem-s012.pth +3 -0

- app.py +665 -0

- app_save.py +381 -0

- app_test.py +46 -0

- assets/avengers.gif +3 -0

- assets/demo_version_1.MP4 +3 -0

- assets/inpainting.gif +3 -0

- assets/poster_demo_version_1.png +0 -0

- assets/qingming.mp4 +3 -0

- assets/track-anything-logo.jpg +0 -0

- checkpoints/E2FGVI-HQ-CVPR22.pth +3 -0

- demo.py +87 -0

- images/groceries.jpg +0 -0

- images/mask_painter.png +0 -0

- images/painter_input_image.jpg +0 -0

- images/painter_input_mask.jpg +0 -0

- images/painter_output_image.png +0 -0

- images/painter_output_image__.png +0 -0

- images/point_painter.png +0 -0

- images/point_painter_1.png +0 -0

- images/point_painter_2.png +0 -0

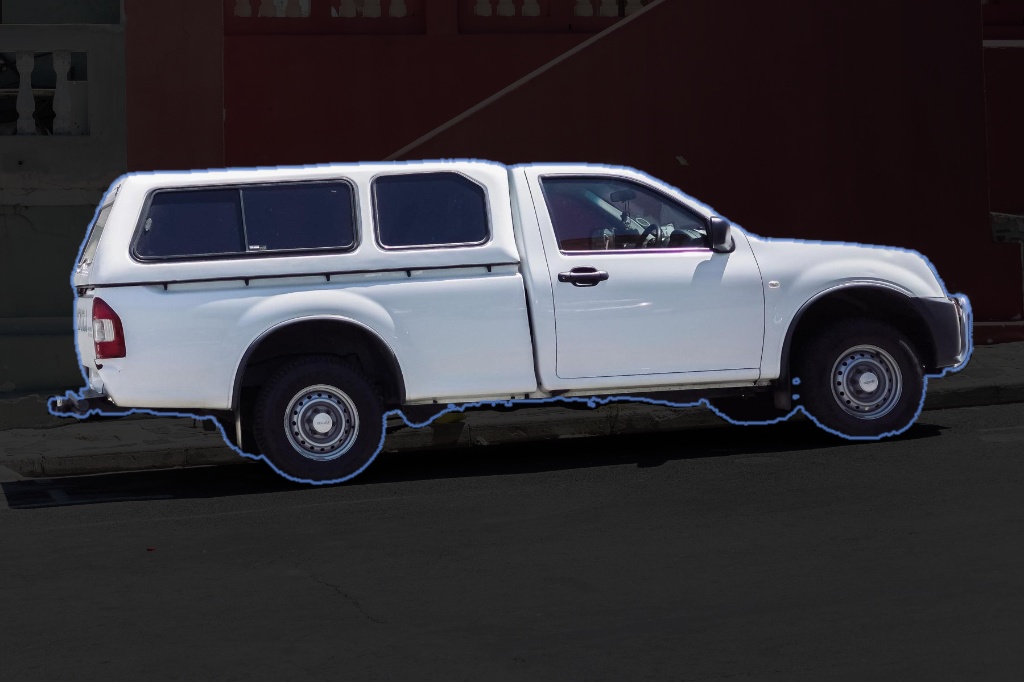

- images/truck.jpg +0 -0

- images/truck_both.jpg +0 -0

- images/truck_mask.jpg +0 -0

- images/truck_point.jpg +0 -0

- inpainter/.DS_Store +0 -0

- inpainter/base_inpainter.py +287 -0

- inpainter/config/config.yaml +4 -0

- inpainter/model/e2fgvi.py +350 -0

- inpainter/model/e2fgvi_hq.py +350 -0

- inpainter/model/modules/feat_prop.py +149 -0

- inpainter/model/modules/flow_comp.py +450 -0

- inpainter/model/modules/spectral_norm.py +288 -0

- inpainter/model/modules/tfocal_transformer.py +536 -0

- inpainter/model/modules/tfocal_transformer_hq.py +567 -0

- inpainter/util/__init__.py +0 -0

- inpainter/util/tensor_util.py +24 -0

- overleaf/.DS_Store +0 -0

- overleaf/Track Anything.zip +3 -0

- overleaf/Track Anything/figs/avengers_1.pdf +3 -0

- overleaf/Track Anything/figs/davisresults.pdf +3 -0

- overleaf/Track Anything/figs/failedcases.pdf +3 -0

- overleaf/Track Anything/figs/overview_4.pdf +0 -0

- overleaf/Track Anything/neurips_2022.bbl +105 -0

- overleaf/Track Anything/neurips_2022.bib +187 -0

- overleaf/Track Anything/neurips_2022.sty +381 -0

- overleaf/Track Anything/neurips_2022.tex +378 -0

.gitattributes

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

assets/demo_version_1.MP4 filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/inpainting.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/qingming.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

test_sample/test-sample1.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

assets/avengers.gif filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

overleaf/Track[[:space:]]Anything/figs/avengers_1.pdf filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

overleaf/Track[[:space:]]Anything/figs/davisresults.pdf filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

overleaf/Track[[:space:]]Anything/figs/failedcases.pdf filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

test_sample/test-sample13.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

test_sample/test-sample4.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

test_sample/test-sample8.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

test_sample/huggingface_demo_operation.mp4 filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Mingqi Gao

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Track Anything

|

| 3 |

+

emoji: 🐠

|

| 4 |

+

colorFrom: purple

|

| 5 |

+

colorTo: indigo

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.27.0

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

license: mit

|

| 11 |

+

duplicated_from: VIPLab/Track-Anything

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

| 15 |

+

<!--  -->

|

| 16 |

+

|

| 17 |

+

<div align=center>

|

| 18 |

+

<img src="./assets/track-anything-logo.jpg"/>

|

| 19 |

+

</div>

|

| 20 |

+

<br/>

|

| 21 |

+

<div align=center>

|

| 22 |

+

<a src="https://img.shields.io/badge/%F0%9F%93%96-Open_in_Spaces-informational.svg?style=flat-square" href="https://arxiv.org/abs/2304.11968">

|

| 23 |

+

<img src="https://img.shields.io/badge/%F0%9F%93%96-Arxiv_2304.11968-red.svg?style=flat-square">

|

| 24 |

+

</a>

|

| 25 |

+

<a src="https://img.shields.io/badge/%F0%9F%A4%97-Open_in_Spaces-informational.svg?style=flat-square" href="https://huggingface.co/spaces/watchtowerss/Track-Anything">

|

| 26 |

+

<img src="https://img.shields.io/badge/%F0%9F%A4%97-Open_in_Spaces-informational.svg?style=flat-square">

|

| 27 |

+

</a>

|

| 28 |

+

<a src="https://img.shields.io/badge/%F0%9F%9A%80-SUSTech_VIP_Lab-important.svg?style=flat-square" href="https://zhengfenglab.com/">

|

| 29 |

+

<img src="https://img.shields.io/badge/%F0%9F%9A%80-SUSTech_VIP_Lab-important.svg?style=flat-square">

|

| 30 |

+

</a>

|

| 31 |

+

</div>

|

| 32 |

+

|

| 33 |

+

***Track-Anything*** is a flexible and interactive tool for video object tracking and segmentation. It is developed upon [Segment Anything](https://github.com/facebookresearch/segment-anything), can specify anything to track and segment via user clicks only. During tracking, users can flexibly change the objects they wanna track or correct the region of interest if there are any ambiguities. These characteristics enable ***Track-Anything*** to be suitable for:

|

| 34 |

+

- Video object tracking and segmentation with shot changes.

|

| 35 |

+

- Visualized development and data annnotation for video object tracking and segmentation.

|

| 36 |

+

- Object-centric downstream video tasks, such as video inpainting and editing.

|

| 37 |

+

|

| 38 |

+

<div align=center>

|

| 39 |

+

<img src="./assets/avengers.gif"/>

|

| 40 |

+

</div>

|

| 41 |

+

|

| 42 |

+

<!-- ![avengers]() -->

|

| 43 |

+

|

| 44 |

+

## :rocket: Updates

|

| 45 |

+

- 2023/04/25: We are delighted to introduce [Caption-Anything](https://github.com/ttengwang/Caption-Anything) :writing_hand:, an inventive project from our lab that combines the capabilities of Segment Anything, Visual Captioning, and ChatGPT.

|

| 46 |

+

|

| 47 |

+

- 2023/04/20: We deployed [[DEMO]](https://huggingface.co/spaces/watchtowerss/Track-Anything) on Hugging Face :hugs:!

|

| 48 |

+

|

| 49 |

+

## Demo

|

| 50 |

+

|

| 51 |

+

https://user-images.githubusercontent.com/28050374/232842703-8395af24-b13e-4b8e-aafb-e94b61e6c449.MP4

|

| 52 |

+

|

| 53 |

+

### Multiple Object Tracking and Segmentation (with [XMem](https://github.com/hkchengrex/XMem))

|

| 54 |

+

|

| 55 |

+

https://user-images.githubusercontent.com/39208339/233035206-0a151004-6461-4deb-b782-d1dbfe691493.mp4

|

| 56 |

+

|

| 57 |

+

### Video Object Tracking and Segmentation with Shot Changes (with [XMem](https://github.com/hkchengrex/XMem))

|

| 58 |

+

|

| 59 |

+

https://user-images.githubusercontent.com/30309970/232848349-f5e29e71-2ea4-4529-ac9a-94b9ca1e7055.mp4

|

| 60 |

+

|

| 61 |

+

### Video Inpainting (with [E2FGVI](https://github.com/MCG-NKU/E2FGVI))

|

| 62 |

+

|

| 63 |

+

https://user-images.githubusercontent.com/28050374/232959816-07f2826f-d267-4dda-8ae5-a5132173b8f4.mp4

|

| 64 |

+

|

| 65 |

+

## Get Started

|

| 66 |

+

#### Linux

|

| 67 |

+

```bash

|

| 68 |

+

# Clone the repository:

|

| 69 |

+

git clone https://github.com/gaomingqi/Track-Anything.git

|

| 70 |

+

cd Track-Anything

|

| 71 |

+

|

| 72 |

+

# Install dependencies:

|

| 73 |

+

pip install -r requirements.txt

|

| 74 |

+

|

| 75 |

+

# Run the Track-Anything gradio demo.

|

| 76 |

+

python app.py --device cuda:0 --sam_model_type vit_h --port 12212

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

## Citation

|

| 80 |

+

If you find this work useful for your research or applications, please cite using this BibTeX:

|

| 81 |

+

```bibtex

|

| 82 |

+

@misc{yang2023track,

|

| 83 |

+

title={Track Anything: Segment Anything Meets Videos},

|

| 84 |

+

author={Jinyu Yang and Mingqi Gao and Zhe Li and Shang Gao and Fangjing Wang and Feng Zheng},

|

| 85 |

+

year={2023},

|

| 86 |

+

eprint={2304.11968},

|

| 87 |

+

archivePrefix={arXiv},

|

| 88 |

+

primaryClass={cs.CV}

|

| 89 |

+

}

|

| 90 |

+

```

|

| 91 |

+

|

| 92 |

+

## Acknowledgements

|

| 93 |

+

|

| 94 |

+

The project is based on [Segment Anything](https://github.com/facebookresearch/segment-anything), [XMem](https://github.com/hkchengrex/XMem), and [E2FGVI](https://github.com/MCG-NKU/E2FGVI). Thanks for the authors for their efforts.

|

XMem-s012.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:16205ad04bfc55b442bd4d7af894382e09868b35e10721c5afc09a24ea8d72d9

|

| 3 |

+

size 249026057

|

app.py

ADDED

|

@@ -0,0 +1,665 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import argparse

|

| 3 |

+

import gdown

|

| 4 |

+

import cv2

|

| 5 |

+

import numpy as np

|

| 6 |

+

import os

|

| 7 |

+

import sys

|

| 8 |

+

sys.path.append(sys.path[0]+"/tracker")

|

| 9 |

+

sys.path.append(sys.path[0]+"/tracker/model")

|

| 10 |

+

from track_anything import TrackingAnything

|

| 11 |

+

from track_anything import parse_augment, save_image_to_userfolder, read_image_from_userfolder

|

| 12 |

+

import requests

|

| 13 |

+

import json

|

| 14 |

+

import torchvision

|

| 15 |

+

import torch

|

| 16 |

+

from tools.painter import mask_painter

|

| 17 |

+

import psutil

|

| 18 |

+

import time

|

| 19 |

+

try:

|

| 20 |

+

from mmcv.cnn import ConvModule

|

| 21 |

+

except:

|

| 22 |

+

os.system("mim install mmcv")

|

| 23 |

+

|

| 24 |

+

# download checkpoints

|

| 25 |

+

def download_checkpoint(url, folder, filename):

|

| 26 |

+

os.makedirs(folder, exist_ok=True)

|

| 27 |

+

filepath = os.path.join(folder, filename)

|

| 28 |

+

|

| 29 |

+

if not os.path.exists(filepath):

|

| 30 |

+

print("download checkpoints ......")

|

| 31 |

+

response = requests.get(url, stream=True)

|

| 32 |

+

with open(filepath, "wb") as f:

|

| 33 |

+

for chunk in response.iter_content(chunk_size=8192):

|

| 34 |

+

if chunk:

|

| 35 |

+

f.write(chunk)

|

| 36 |

+

|

| 37 |

+

print("download successfully!")

|

| 38 |

+

|

| 39 |

+

return filepath

|

| 40 |

+

|

| 41 |

+

def download_checkpoint_from_google_drive(file_id, folder, filename):

|

| 42 |

+

os.makedirs(folder, exist_ok=True)

|

| 43 |

+

filepath = os.path.join(folder, filename)

|

| 44 |

+

|

| 45 |

+

if not os.path.exists(filepath):

|

| 46 |

+

print("Downloading checkpoints from Google Drive... tips: If you cannot see the progress bar, please try to download it manuall \

|

| 47 |

+

and put it in the checkpointes directory. E2FGVI-HQ-CVPR22.pth: https://github.com/MCG-NKU/E2FGVI(E2FGVI-HQ model)")

|

| 48 |

+

url = f"https://drive.google.com/uc?id={file_id}"

|

| 49 |

+

gdown.download(url, filepath, quiet=False)

|

| 50 |

+

print("Downloaded successfully!")

|

| 51 |

+

|

| 52 |

+

return filepath

|

| 53 |

+

|

| 54 |

+

# convert points input to prompt state

|

| 55 |

+

def get_prompt(click_state, click_input):

|

| 56 |

+

inputs = json.loads(click_input)

|

| 57 |

+

points = click_state[0]

|

| 58 |

+

labels = click_state[1]

|

| 59 |

+

for input in inputs:

|

| 60 |

+

points.append(input[:2])

|

| 61 |

+

labels.append(input[2])

|

| 62 |

+

click_state[0] = points

|

| 63 |

+

click_state[1] = labels

|

| 64 |

+

prompt = {

|

| 65 |

+

"prompt_type":["click"],

|

| 66 |

+

"input_point":click_state[0],

|

| 67 |

+

"input_label":click_state[1],

|

| 68 |

+

"multimask_output":"True",

|

| 69 |

+

}

|

| 70 |

+

return prompt

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

# extract frames from upload video

|

| 75 |

+

def get_frames_from_video(video_input, video_state):

|

| 76 |

+

"""

|

| 77 |

+

Args:

|

| 78 |

+

video_path:str

|

| 79 |

+

timestamp:float64

|

| 80 |

+

Return

|

| 81 |

+

[[0:nearest_frame], [nearest_frame:], nearest_frame]

|

| 82 |

+

"""

|

| 83 |

+

video_path = video_input

|

| 84 |

+

frames = [] # save image path

|

| 85 |

+

user_name = time.time()

|

| 86 |

+

video_state["video_name"] = os.path.split(video_path)[-1]

|

| 87 |

+

video_state["user_name"] = user_name

|

| 88 |

+

|

| 89 |

+

os.makedirs(os.path.join("/tmp/{}/originimages/{}".format(video_state["user_name"], video_state["video_name"])), exist_ok=True)

|

| 90 |

+

os.makedirs(os.path.join("/tmp/{}/paintedimages/{}".format(video_state["user_name"], video_state["video_name"])), exist_ok=True)

|

| 91 |

+

operation_log = [("",""),("Upload video already. Try click the image for adding targets to track and inpaint.","Normal")]

|

| 92 |

+

try:

|

| 93 |

+

cap = cv2.VideoCapture(video_path)

|

| 94 |

+

fps = cap.get(cv2.CAP_PROP_FPS)

|

| 95 |

+

if not cap.isOpened():

|

| 96 |

+

operation_log = [("No frames extracted, please input video file with '.mp4.' '.mov'.", "Error")]

|

| 97 |

+

print("No frames extracted, please input video file with '.mp4.' '.mov'.")

|

| 98 |

+

return None, None, None, None, \

|

| 99 |

+

None, None, None, None, \

|

| 100 |

+

None, None, None, None, \

|

| 101 |

+

None, None, gr.update(visible=True, value=operation_log)

|

| 102 |

+

image_index = 0

|

| 103 |

+

while cap.isOpened():

|

| 104 |

+

ret, frame = cap.read()

|

| 105 |

+

if ret == True:

|

| 106 |

+

current_memory_usage = psutil.virtual_memory().percent

|

| 107 |

+

|

| 108 |

+

# try solve memory usage problem, save image to disk instead of memory

|

| 109 |

+

frames.append(save_image_to_userfolder(video_state, image_index, frame, True))

|

| 110 |

+

image_index +=1

|

| 111 |

+

# frames.append(cv2.cvtColor(frame, cv2.COLOR_BGR2RGB))

|

| 112 |

+

if current_memory_usage > 90:

|

| 113 |

+

operation_log = [("Memory usage is too high (>90%). Stop the video extraction. Please reduce the video resolution or frame rate.", "Error")]

|

| 114 |

+

print("Memory usage is too high (>90%). Please reduce the video resolution or frame rate.")

|

| 115 |

+

break

|

| 116 |

+

else:

|

| 117 |

+

break

|

| 118 |

+

|

| 119 |

+

except (OSError, TypeError, ValueError, KeyError, SyntaxError) as e:

|

| 120 |

+

# except:

|

| 121 |

+

operation_log = [("read_frame_source:{} error. {}\n".format(video_path, str(e)), "Error")]

|

| 122 |

+

print("read_frame_source:{} error. {}\n".format(video_path, str(e)))

|

| 123 |

+

return None, None, None, None, \

|

| 124 |

+

None, None, None, None, \

|

| 125 |

+

None, None, None, None, \

|

| 126 |

+

None, None, gr.update(visible=True, value=operation_log)

|

| 127 |

+

first_image = read_image_from_userfolder(frames[0])

|

| 128 |

+

image_size = (first_image.shape[0], first_image.shape[1])

|

| 129 |

+

# initialize video_state

|

| 130 |

+

video_state = {

|

| 131 |

+

"user_name": user_name,

|

| 132 |

+

"video_name": os.path.split(video_path)[-1],

|

| 133 |

+

"origin_images": frames,

|

| 134 |

+

"painted_images": frames.copy(),

|

| 135 |

+

"masks": [np.zeros((image_size[0], image_size[1]), np.uint8)]*len(frames),

|

| 136 |

+

"logits": [None]*len(frames),

|

| 137 |

+

"select_frame_number": 0,

|

| 138 |

+

"fps": fps

|

| 139 |

+

}

|

| 140 |

+

video_info = "Video Name: {}, FPS: {}, Total Frames: {}, Image Size:{}".format(video_state["video_name"], video_state["fps"], len(frames), image_size)

|

| 141 |

+

model.samcontroler.sam_controler.reset_image()

|

| 142 |

+

model.samcontroler.sam_controler.set_image(first_image)

|

| 143 |

+

return video_state, video_info, first_image, gr.update(visible=True, maximum=len(frames), value=1), \

|

| 144 |

+

gr.update(visible=True, maximum=len(frames), value=len(frames)), gr.update(visible=True), gr.update(visible=True), gr.update(visible=True), \

|

| 145 |

+

gr.update(visible=True), gr.update(visible=True), gr.update(visible=True), gr.update(visible=True), \

|

| 146 |

+

gr.update(visible=True), gr.update(visible=True), gr.update(visible=True, value=operation_log),

|

| 147 |

+

|

| 148 |

+

def run_example(example):

|

| 149 |

+

return example

|

| 150 |

+

# get the select frame from gradio slider

|

| 151 |

+

def select_template(image_selection_slider, video_state, interactive_state):

|

| 152 |

+

|

| 153 |

+

# images = video_state[1]

|

| 154 |

+

image_selection_slider -= 1

|

| 155 |

+

video_state["select_frame_number"] = image_selection_slider

|

| 156 |

+

|

| 157 |

+

# once select a new template frame, set the image in sam

|

| 158 |

+

|

| 159 |

+

model.samcontroler.sam_controler.reset_image()

|

| 160 |

+

model.samcontroler.sam_controler.set_image(read_image_from_userfolder(video_state["origin_images"][image_selection_slider]))

|

| 161 |

+

|

| 162 |

+

# update the masks when select a new template frame

|

| 163 |

+

# if video_state["masks"][image_selection_slider] is not None:

|

| 164 |

+

# video_state["painted_images"][image_selection_slider] = mask_painter(video_state["origin_images"][image_selection_slider], video_state["masks"][image_selection_slider])

|

| 165 |

+

operation_log = [("",""), ("Select frame {}. Try click image and add mask for tracking.".format(image_selection_slider),"Normal")]

|

| 166 |

+

|

| 167 |

+

return read_image_from_userfolder(video_state["painted_images"][image_selection_slider]), video_state, interactive_state, operation_log

|

| 168 |

+

|

| 169 |

+

# set the tracking end frame

|

| 170 |

+

def get_end_number(track_pause_number_slider, video_state, interactive_state):

|

| 171 |

+

track_pause_number_slider -= 1

|

| 172 |

+

interactive_state["track_end_number"] = track_pause_number_slider

|

| 173 |

+

operation_log = [("",""),("Set the tracking finish at frame {}".format(track_pause_number_slider),"Normal")]

|

| 174 |

+

|

| 175 |

+

return read_image_from_userfolder(video_state["painted_images"][track_pause_number_slider]),interactive_state, operation_log

|

| 176 |

+

|

| 177 |

+

def get_resize_ratio(resize_ratio_slider, interactive_state):

|

| 178 |

+

interactive_state["resize_ratio"] = resize_ratio_slider

|

| 179 |

+

|

| 180 |

+

return interactive_state

|

| 181 |

+

|

| 182 |

+

# use sam to get the mask

|

| 183 |

+

def sam_refine(video_state, point_prompt, click_state, interactive_state, evt:gr.SelectData):

|

| 184 |

+

"""

|

| 185 |

+

Args:

|

| 186 |

+

template_frame: PIL.Image

|

| 187 |

+

point_prompt: flag for positive or negative button click

|

| 188 |

+

click_state: [[points], [labels]]

|

| 189 |

+

"""

|

| 190 |

+

if point_prompt == "Positive":

|

| 191 |

+

coordinate = "[[{},{},1]]".format(evt.index[0], evt.index[1])

|

| 192 |

+

interactive_state["positive_click_times"] += 1

|

| 193 |

+

else:

|

| 194 |

+

coordinate = "[[{},{},0]]".format(evt.index[0], evt.index[1])

|

| 195 |

+

interactive_state["negative_click_times"] += 1

|

| 196 |

+

|

| 197 |

+

# prompt for sam model

|

| 198 |

+

model.samcontroler.sam_controler.reset_image()

|

| 199 |

+

model.samcontroler.sam_controler.set_image(read_image_from_userfolder(video_state["origin_images"][video_state["select_frame_number"]]))

|

| 200 |

+

prompt = get_prompt(click_state=click_state, click_input=coordinate)

|

| 201 |

+

|

| 202 |

+

mask, logit, painted_image = model.first_frame_click(

|

| 203 |

+

image=read_image_from_userfolder(video_state["origin_images"][video_state["select_frame_number"]]),

|

| 204 |

+

points=np.array(prompt["input_point"]),

|

| 205 |

+

labels=np.array(prompt["input_label"]),

|

| 206 |

+

multimask=prompt["multimask_output"],

|

| 207 |

+

)

|

| 208 |

+

video_state["masks"][video_state["select_frame_number"]] = mask

|

| 209 |

+

video_state["logits"][video_state["select_frame_number"]] = logit

|

| 210 |

+

video_state["painted_images"][video_state["select_frame_number"]] = save_image_to_userfolder(video_state, index=video_state["select_frame_number"], image=cv2.cvtColor(np.asarray(painted_image),cv2.COLOR_BGR2RGB),type=False)

|

| 211 |

+

|

| 212 |

+

operation_log = [("",""), ("Use SAM for segment. You can try add positive and negative points by clicking. Or press Clear clicks button to refresh the image. Press Add mask button when you are satisfied with the segment","Normal")]

|

| 213 |

+

return painted_image, video_state, interactive_state, operation_log

|

| 214 |

+

|

| 215 |

+

def add_multi_mask(video_state, interactive_state, mask_dropdown):

|

| 216 |

+

try:

|

| 217 |

+

mask = video_state["masks"][video_state["select_frame_number"]]

|

| 218 |

+

interactive_state["multi_mask"]["masks"].append(mask)

|

| 219 |

+

interactive_state["multi_mask"]["mask_names"].append("mask_{:03d}".format(len(interactive_state["multi_mask"]["masks"])))

|

| 220 |

+

mask_dropdown.append("mask_{:03d}".format(len(interactive_state["multi_mask"]["masks"])))

|

| 221 |

+

select_frame, run_status = show_mask(video_state, interactive_state, mask_dropdown)

|

| 222 |

+

|

| 223 |

+

operation_log = [("",""),("Added a mask, use the mask select for target tracking or inpainting.","Normal")]

|

| 224 |

+

except:

|

| 225 |

+

operation_log = [("Please click the left image to generate mask.", "Error"), ("","")]

|

| 226 |

+

return interactive_state, gr.update(choices=interactive_state["multi_mask"]["mask_names"], value=mask_dropdown), select_frame, [[],[]], operation_log

|

| 227 |

+

|

| 228 |

+

def clear_click(video_state, click_state):

|

| 229 |

+

click_state = [[],[]]

|

| 230 |

+

template_frame = read_image_from_userfolder(video_state["origin_images"][video_state["select_frame_number"]])

|

| 231 |

+

operation_log = [("",""), ("Clear points history and refresh the image.","Normal")]

|

| 232 |

+

return template_frame, click_state, operation_log

|

| 233 |

+

|

| 234 |

+

def remove_multi_mask(interactive_state, mask_dropdown):

|

| 235 |

+

interactive_state["multi_mask"]["mask_names"]= []

|

| 236 |

+

interactive_state["multi_mask"]["masks"] = []

|

| 237 |

+

|

| 238 |

+

operation_log = [("",""), ("Remove all mask, please add new masks","Normal")]

|

| 239 |

+

return interactive_state, gr.update(choices=[],value=[]), operation_log

|

| 240 |

+

|

| 241 |

+

def show_mask(video_state, interactive_state, mask_dropdown):

|

| 242 |

+

mask_dropdown.sort()

|

| 243 |

+

select_frame = read_image_from_userfolder(video_state["origin_images"][video_state["select_frame_number"]])

|

| 244 |

+

|

| 245 |

+

for i in range(len(mask_dropdown)):

|

| 246 |

+

mask_number = int(mask_dropdown[i].split("_")[1]) - 1

|

| 247 |

+

mask = interactive_state["multi_mask"]["masks"][mask_number]

|

| 248 |

+

select_frame = mask_painter(select_frame, mask.astype('uint8'), mask_color=mask_number+2)

|

| 249 |

+

|

| 250 |

+

operation_log = [("",""), ("Select {} for tracking or inpainting".format(mask_dropdown),"Normal")]

|

| 251 |

+

return select_frame, operation_log

|

| 252 |

+

|

| 253 |

+

# tracking vos

|

| 254 |

+

def vos_tracking_video(video_state, interactive_state, mask_dropdown):

|

| 255 |

+

operation_log = [("",""), ("Track the selected masks, and then you can select the masks for inpainting.","Normal")]

|

| 256 |

+

model.xmem.clear_memory()

|

| 257 |

+

if interactive_state["track_end_number"]:

|

| 258 |

+

following_frames = video_state["origin_images"][video_state["select_frame_number"]:interactive_state["track_end_number"]]

|

| 259 |

+

else:

|

| 260 |

+

following_frames = video_state["origin_images"][video_state["select_frame_number"]:]

|

| 261 |

+

|

| 262 |

+

if interactive_state["multi_mask"]["masks"]:

|

| 263 |

+

if len(mask_dropdown) == 0:

|

| 264 |

+

mask_dropdown = ["mask_001"]

|

| 265 |

+

mask_dropdown.sort()

|

| 266 |

+

template_mask = interactive_state["multi_mask"]["masks"][int(mask_dropdown[0].split("_")[1]) - 1] * (int(mask_dropdown[0].split("_")[1]))

|

| 267 |

+

for i in range(1,len(mask_dropdown)):

|

| 268 |

+

mask_number = int(mask_dropdown[i].split("_")[1]) - 1

|

| 269 |

+

template_mask = np.clip(template_mask+interactive_state["multi_mask"]["masks"][mask_number]*(mask_number+1), 0, mask_number+1)

|

| 270 |

+

video_state["masks"][video_state["select_frame_number"]]= template_mask

|

| 271 |

+

else:

|

| 272 |

+

template_mask = video_state["masks"][video_state["select_frame_number"]]

|

| 273 |

+

fps = video_state["fps"]

|

| 274 |

+

|

| 275 |

+

# operation error

|

| 276 |

+

if len(np.unique(template_mask))==1:

|

| 277 |

+

template_mask[0][0]=1

|

| 278 |

+

operation_log = [("Error! Please add at least one mask to track by clicking the left image.","Error"), ("","")]

|

| 279 |

+

# return video_output, video_state, interactive_state, operation_error

|

| 280 |

+

masks, logits, painted_images_path = model.generator(images=following_frames, template_mask=template_mask, video_state=video_state)

|

| 281 |

+

# clear GPU memory

|

| 282 |

+

model.xmem.clear_memory()

|

| 283 |

+

|

| 284 |

+

if interactive_state["track_end_number"]:

|

| 285 |

+

video_state["masks"][video_state["select_frame_number"]:interactive_state["track_end_number"]] = masks

|

| 286 |

+

video_state["logits"][video_state["select_frame_number"]:interactive_state["track_end_number"]] = logits

|

| 287 |

+

video_state["painted_images"][video_state["select_frame_number"]:interactive_state["track_end_number"]] = painted_images_path

|

| 288 |

+

else:

|

| 289 |

+

video_state["masks"][video_state["select_frame_number"]:] = masks

|

| 290 |

+

video_state["logits"][video_state["select_frame_number"]:] = logits

|

| 291 |

+

video_state["painted_images"][video_state["select_frame_number"]:] = painted_images_path

|

| 292 |

+

|

| 293 |

+

video_output = generate_video_from_frames(video_state["painted_images"], output_path="./result/track/{}".format(video_state["video_name"]), fps=fps) # import video_input to name the output video

|

| 294 |

+

interactive_state["inference_times"] += 1

|

| 295 |

+

|

| 296 |

+

print("For generating this tracking result, inference times: {}, click times: {}, positive: {}, negative: {}".format(interactive_state["inference_times"],

|

| 297 |

+

interactive_state["positive_click_times"]+interactive_state["negative_click_times"],

|

| 298 |

+

interactive_state["positive_click_times"],

|

| 299 |

+

interactive_state["negative_click_times"]))

|

| 300 |

+

|

| 301 |

+

#### shanggao code for mask save

|

| 302 |

+

if interactive_state["mask_save"]:

|

| 303 |

+

if not os.path.exists('./result/mask/{}'.format(video_state["video_name"].split('.')[0])):

|

| 304 |

+

os.makedirs('./result/mask/{}'.format(video_state["video_name"].split('.')[0]))

|

| 305 |

+

i = 0

|

| 306 |

+

print("save mask")

|

| 307 |

+

for mask in video_state["masks"]:

|

| 308 |

+

np.save(os.path.join('./result/mask/{}'.format(video_state["video_name"].split('.')[0]), '{:05d}.npy'.format(i)), mask)

|

| 309 |

+

i+=1

|

| 310 |

+

#### shanggao code for mask save

|

| 311 |

+

return video_output, video_state, interactive_state, operation_log

|

| 312 |

+

|

| 313 |

+

|

| 314 |

+

|

| 315 |

+

# inpaint

|

| 316 |

+

def inpaint_video(video_state, interactive_state, mask_dropdown):

|

| 317 |

+

operation_log = [("",""), ("Removed the selected masks.","Normal")]

|

| 318 |

+

|

| 319 |

+

# solve memory

|

| 320 |

+

frames = np.asarray(video_state["origin_images"])

|

| 321 |

+

fps = video_state["fps"]

|

| 322 |

+

inpaint_masks = np.asarray(video_state["masks"])

|

| 323 |

+

if len(mask_dropdown) == 0:

|

| 324 |

+

mask_dropdown = ["mask_001"]

|

| 325 |

+

mask_dropdown.sort()

|

| 326 |

+

# convert mask_dropdown to mask numbers

|

| 327 |

+

inpaint_mask_numbers = [int(mask_dropdown[i].split("_")[1]) for i in range(len(mask_dropdown))]

|

| 328 |

+

# interate through all masks and remove the masks that are not in mask_dropdown

|

| 329 |

+

unique_masks = np.unique(inpaint_masks)

|

| 330 |

+

num_masks = len(unique_masks) - 1

|

| 331 |

+

for i in range(1, num_masks + 1):

|

| 332 |

+

if i in inpaint_mask_numbers:

|

| 333 |

+

continue

|

| 334 |

+

inpaint_masks[inpaint_masks==i] = 0

|

| 335 |

+

# inpaint for videos

|

| 336 |

+

|

| 337 |

+

try:

|

| 338 |

+

inpainted_frames = model.baseinpainter.inpaint(frames, inpaint_masks, ratio=interactive_state["resize_ratio"]) # numpy array, T, H, W, 3

|

| 339 |

+

video_output = generate_video_from_paintedframes(inpainted_frames, output_path="./result/inpaint/{}".format(video_state["video_name"]), fps=fps)

|

| 340 |

+

except:

|

| 341 |

+

operation_log = [("Error! You are trying to inpaint without masks input. Please track the selected mask first, and then press inpaint. If VRAM exceeded, please use the resize ratio to scaling down the image size.","Error"), ("","")]

|

| 342 |

+

inpainted_frames = video_state["origin_images"]

|

| 343 |

+

video_output = generate_video_from_frames(inpainted_frames, output_path="./result/inpaint/{}".format(video_state["video_name"]), fps=fps) # import video_input to name the output video

|

| 344 |

+

return video_output, operation_log

|

| 345 |

+

|

| 346 |

+

|

| 347 |

+

# generate video after vos inference

|

| 348 |

+

def generate_video_from_frames(frames_path, output_path, fps=30):

|

| 349 |

+

"""

|

| 350 |

+

Generates a video from a list of frames.

|

| 351 |

+

|

| 352 |

+

Args:

|

| 353 |

+

frames (list of numpy arrays): The frames to include in the video.

|

| 354 |

+

output_path (str): The path to save the generated video.

|

| 355 |

+

fps (int, optional): The frame rate of the output video. Defaults to 30.

|

| 356 |

+

"""

|

| 357 |

+

# height, width, layers = frames[0].shape

|

| 358 |

+

# fourcc = cv2.VideoWriter_fourcc(*"mp4v")

|

| 359 |

+

# video = cv2.VideoWriter(output_path, fourcc, fps, (width, height))

|

| 360 |

+

# print(output_path)

|

| 361 |

+

# for frame in frames:

|

| 362 |

+

# video.write(frame)

|

| 363 |

+

|

| 364 |

+

# video.release()

|

| 365 |

+

frames = []

|

| 366 |

+

for file in frames_path:

|

| 367 |

+

frames.append(read_image_from_userfolder(file))

|

| 368 |

+

frames = torch.from_numpy(np.asarray(frames))

|

| 369 |

+

if not os.path.exists(os.path.dirname(output_path)):

|

| 370 |

+

os.makedirs(os.path.dirname(output_path))

|

| 371 |

+

torchvision.io.write_video(output_path, frames, fps=fps, video_codec="libx264")

|

| 372 |

+

return output_path

|

| 373 |

+

|

| 374 |

+

def generate_video_from_paintedframes(frames, output_path, fps=30):

|

| 375 |

+

"""

|

| 376 |

+

Generates a video from a list of frames.

|

| 377 |

+

|

| 378 |

+

Args:

|

| 379 |

+

frames (list of numpy arrays): The frames to include in the video.

|

| 380 |

+

output_path (str): The path to save the generated video.

|

| 381 |

+

fps (int, optional): The frame rate of the output video. Defaults to 30.

|

| 382 |

+

"""

|

| 383 |

+

# height, width, layers = frames[0].shape

|

| 384 |

+

# fourcc = cv2.VideoWriter_fourcc(*"mp4v")

|

| 385 |

+

# video = cv2.VideoWriter(output_path, fourcc, fps, (width, height))

|

| 386 |

+

# print(output_path)

|

| 387 |

+

# for frame in frames:

|

| 388 |

+

# video.write(frame)

|

| 389 |

+

|

| 390 |

+

# video.release()

|

| 391 |

+

frames = torch.from_numpy(np.asarray(frames))

|

| 392 |

+

if not os.path.exists(os.path.dirname(output_path)):

|

| 393 |

+

os.makedirs(os.path.dirname(output_path))

|

| 394 |

+

torchvision.io.write_video(output_path, frames, fps=fps, video_codec="libx264")

|

| 395 |

+

return output_path

|

| 396 |

+

|

| 397 |

+

|

| 398 |

+

# args, defined in track_anything.py

|

| 399 |

+

args = parse_augment()

|

| 400 |

+

|

| 401 |

+

# check and download checkpoints if needed

|

| 402 |

+

SAM_checkpoint_dict = {

|

| 403 |

+

'vit_h': "sam_vit_h_4b8939.pth",

|

| 404 |

+

'vit_l': "sam_vit_l_0b3195.pth",

|

| 405 |

+

"vit_b": "sam_vit_b_01ec64.pth"

|

| 406 |

+

}

|

| 407 |

+

SAM_checkpoint_url_dict = {

|

| 408 |

+

'vit_h': "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_h_4b8939.pth",

|

| 409 |

+

'vit_l': "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_l_0b3195.pth",

|

| 410 |

+

'vit_b': "https://dl.fbaipublicfiles.com/segment_anything/sam_vit_b_01ec64.pth"

|

| 411 |

+

}

|

| 412 |

+

sam_checkpoint = SAM_checkpoint_dict[args.sam_model_type]

|

| 413 |

+

sam_checkpoint_url = SAM_checkpoint_url_dict[args.sam_model_type]

|

| 414 |

+

xmem_checkpoint = "XMem-s012.pth"

|

| 415 |

+

xmem_checkpoint_url = "https://github.com/hkchengrex/XMem/releases/download/v1.0/XMem-s012.pth"

|

| 416 |

+

e2fgvi_checkpoint = "E2FGVI-HQ-CVPR22.pth"

|

| 417 |

+

e2fgvi_checkpoint_id = "10wGdKSUOie0XmCr8SQ2A2FeDe-mfn5w3"

|

| 418 |

+

|

| 419 |

+

|

| 420 |

+

folder ="./checkpoints"

|

| 421 |

+

SAM_checkpoint = download_checkpoint(sam_checkpoint_url, folder, sam_checkpoint)

|

| 422 |

+

xmem_checkpoint = download_checkpoint(xmem_checkpoint_url, folder, xmem_checkpoint)

|

| 423 |

+

e2fgvi_checkpoint = download_checkpoint_from_google_drive(e2fgvi_checkpoint_id, folder, e2fgvi_checkpoint)

|

| 424 |

+

# args.port = 12213

|

| 425 |

+

# args.device = "cuda:8"

|

| 426 |

+

# args.mask_save = True

|

| 427 |

+

|

| 428 |

+

# initialize sam, xmem, e2fgvi models

|

| 429 |

+

model = TrackingAnything(SAM_checkpoint, xmem_checkpoint, e2fgvi_checkpoint,args)

|

| 430 |

+

|

| 431 |

+

|

| 432 |

+

title = """<p><h1 align="center">Track-Anything</h1></p>

|

| 433 |

+

"""

|

| 434 |

+

description = """<p>Gradio demo for Track Anything, a flexible and interactive tool for video object tracking, segmentation, and inpainting. To use it, simply upload your video, or click one of the examples to load them. Code: <a href="https://github.com/gaomingqi/Track-Anything">Track-Anything</a> <a href="https://huggingface.co/spaces/VIPLab/Track-Anything?duplicate=true"><img style="display: inline; margin-top: 0em; margin-bottom: 0em" src="https://bit.ly/3gLdBN6" alt="Duplicate Space" /></a> If you stuck in unknown errors, please feel free to watch the Tutorial video.</p>"""

|

| 435 |

+

|

| 436 |

+

|

| 437 |

+

with gr.Blocks() as iface:

|

| 438 |

+

"""

|

| 439 |

+

state for

|

| 440 |

+

"""

|

| 441 |

+

click_state = gr.State([[],[]])

|

| 442 |

+

interactive_state = gr.State({

|

| 443 |

+

"inference_times": 0,

|

| 444 |

+

"negative_click_times" : 0,

|

| 445 |

+

"positive_click_times": 0,

|

| 446 |

+

"mask_save": args.mask_save,

|

| 447 |

+

"multi_mask": {

|

| 448 |

+

"mask_names": [],

|

| 449 |

+

"masks": []

|

| 450 |

+

},

|

| 451 |

+

"track_end_number": None,

|

| 452 |

+

"resize_ratio": 0.6

|

| 453 |

+

}

|

| 454 |

+

)

|

| 455 |

+

|

| 456 |

+

video_state = gr.State(

|

| 457 |

+

{

|

| 458 |

+

"user_name": "",

|

| 459 |

+

"video_name": "",

|

| 460 |

+

"origin_images": None,

|

| 461 |

+

"painted_images": None,

|

| 462 |

+

"masks": None,

|

| 463 |

+

"inpaint_masks": None,

|

| 464 |

+

"logits": None,

|

| 465 |

+

"select_frame_number": 0,

|

| 466 |

+

"fps": 30

|

| 467 |

+

}

|

| 468 |

+

)

|

| 469 |

+

gr.Markdown(title)

|

| 470 |

+

gr.Markdown(description)

|

| 471 |

+

with gr.Row():

|

| 472 |

+

with gr.Column():

|

| 473 |

+

with gr.Tab("Test"):

|

| 474 |

+

# for user video input

|

| 475 |

+

with gr.Column():

|

| 476 |

+

with gr.Row(scale=0.4):

|

| 477 |

+

video_input = gr.Video(autosize=True)

|

| 478 |

+

with gr.Column():

|

| 479 |

+

video_info = gr.Textbox(label="Video Info")

|

| 480 |

+

resize_info = gr.Textbox(value="If you want to use the inpaint function, it is best to git clone the repo and use a machine with more VRAM locally. \

|

| 481 |

+

Alternatively, you can use the resize ratio slider to scale down the original image to around 360P resolution for faster processing.", label="Tips for running this demo.")

|

| 482 |

+

resize_ratio_slider = gr.Slider(minimum=0.02, maximum=1, step=0.02, value=0.6, label="Resize ratio", visible=True)

|

| 483 |

+

|

| 484 |

+

|

| 485 |

+

with gr.Row():

|

| 486 |

+

# put the template frame under the radio button

|

| 487 |

+

with gr.Column():

|

| 488 |

+

# extract frames

|

| 489 |

+

with gr.Column():

|

| 490 |

+

extract_frames_button = gr.Button(value="Get video info", interactive=True, variant="primary")

|

| 491 |

+

|

| 492 |

+

# click points settins, negative or positive, mode continuous or single

|

| 493 |

+

with gr.Row():

|

| 494 |

+

with gr.Row():

|

| 495 |

+

point_prompt = gr.Radio(

|

| 496 |

+

choices=["Positive", "Negative"],

|

| 497 |

+

value="Positive",

|

| 498 |

+

label="Point prompt",

|

| 499 |

+

interactive=True,

|

| 500 |

+

visible=False)

|

| 501 |

+

remove_mask_button = gr.Button(value="Remove mask", interactive=True, visible=False)

|

| 502 |

+

clear_button_click = gr.Button(value="Clear clicks", interactive=True, visible=False).style(height=160)

|

| 503 |

+

Add_mask_button = gr.Button(value="Add mask", interactive=True, visible=False)

|

| 504 |

+

template_frame = gr.Image(type="pil",interactive=True, elem_id="template_frame", visible=False).style(height=360)

|

| 505 |

+

image_selection_slider = gr.Slider(minimum=1, maximum=100, step=1, value=1, label="Track start frame", visible=False)

|

| 506 |

+

track_pause_number_slider = gr.Slider(minimum=1, maximum=100, step=1, value=1, label="Track end frame", visible=False)

|

| 507 |

+

|

| 508 |

+

with gr.Column():

|

| 509 |

+

run_status = gr.HighlightedText(value=[("Run","Error"),("Status","Normal")], visible=True)

|

| 510 |

+

mask_dropdown = gr.Dropdown(multiselect=True, value=[], label="Mask selection", info=".", visible=False)

|

| 511 |

+

video_output = gr.Video(autosize=True, visible=False).style(height=360)

|

| 512 |

+

with gr.Row():

|

| 513 |

+

tracking_video_predict_button = gr.Button(value="Tracking", visible=False)

|

| 514 |

+

inpaint_video_predict_button = gr.Button(value="Inpaint", visible=False)

|

| 515 |

+

# set example

|

| 516 |

+

gr.Markdown("## Examples")

|

| 517 |

+

gr.Examples(

|

| 518 |

+