Update README.md

Browse files

README.md

CHANGED

|

@@ -45,7 +45,7 @@ widget:

|

|

| 45 |

|

| 46 |

|

| 47 |

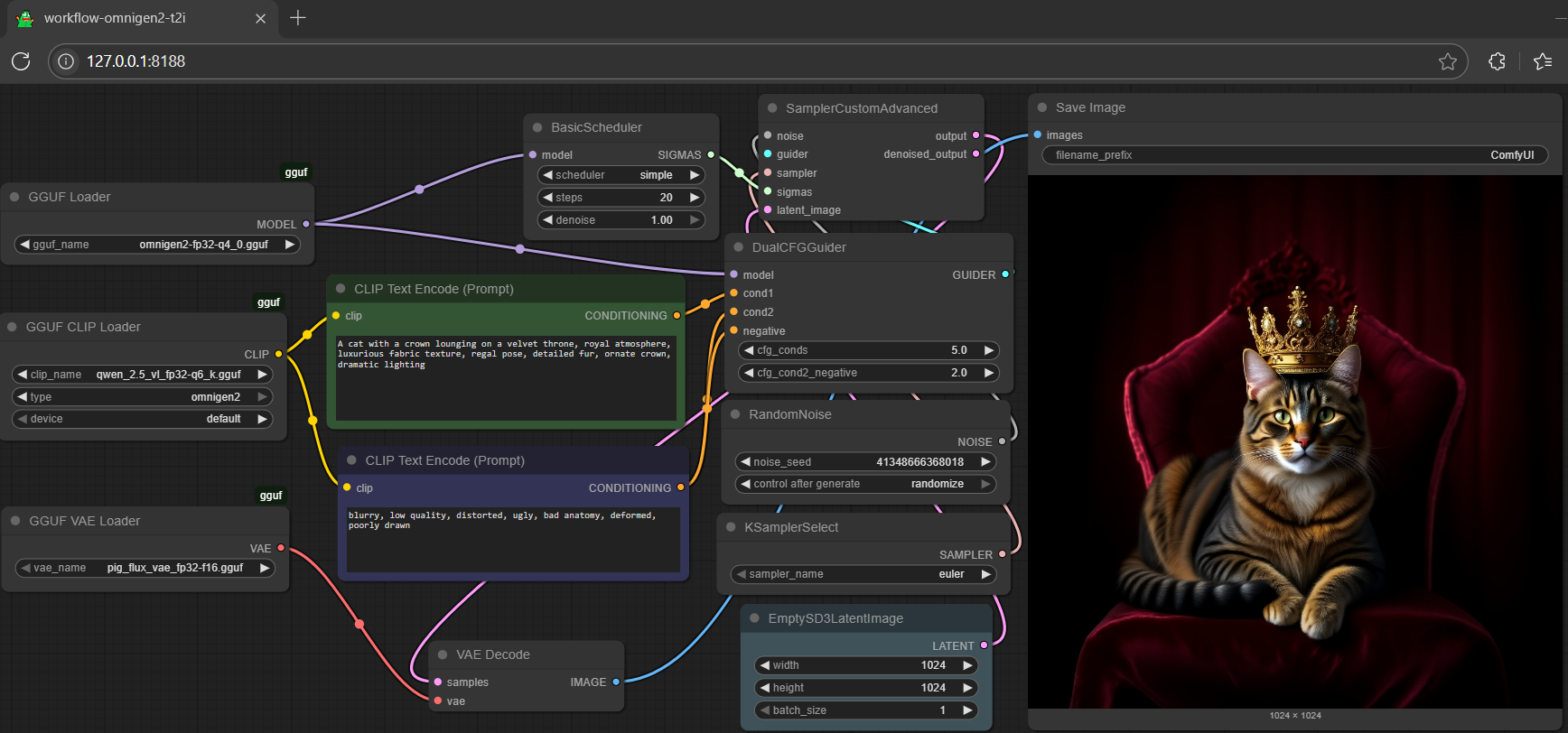

- t2i is roughly 3x to 5x faster than i2i or image editing

|

| 48 |

-

- get more **qwen2.5-vl-3b** gguf encoder either [here](https://huggingface.co/calcuis/pig-encoder/tree/main) (

|

| 49 |

- alternatively, you could get fp8-e4m3fn safetensors encoder [here](https://huggingface.co/chatpig/encoder/blob/main/qwen_2.5_vl_3b_fp8_e4m3fn.safetensors), or make it with `TENSOR Cutter (Beta)`; works pretty good as well; and don't even need to switch loader (gguf clip loader supports scaled fp8 safetensors)

|

| 50 |

|

| 51 |

### **reference**

|

|

|

|

| 45 |

|

| 46 |

|

| 47 |

- t2i is roughly 3x to 5x faster than i2i or image editing

|

| 48 |

+

- get more **qwen2.5-vl-3b** gguf encoder either [here](https://huggingface.co/calcuis/pig-encoder/tree/main) (pig quant) or [here](https://huggingface.co/chatpig/qwen2.5-vl-3b-it-gguf/tree/main) (llama.cpp quant)

|

| 49 |

- alternatively, you could get fp8-e4m3fn safetensors encoder [here](https://huggingface.co/chatpig/encoder/blob/main/qwen_2.5_vl_3b_fp8_e4m3fn.safetensors), or make it with `TENSOR Cutter (Beta)`; works pretty good as well; and don't even need to switch loader (gguf clip loader supports scaled fp8 safetensors)

|

| 50 |

|

| 51 |

### **reference**

|